Alvin Tan

@alvinwmtan

Followers

75

Following

14

Media

4

Statuses

17

Joined September 2022

When I was finishing undergrad, I put together a short reader in the history and philosophy of linguistics. I've now ported it to Quarto Book and added a new chapter on computational approaches (along with other updates). Feedback is welcome! 😊 https://t.co/nicFJBc4x8

0

0

2

I'm recruiting PhD students + postdocs for my lab, coming to @JohnsHopkins in Fall 2025! Our brand new lab is at the intersection of cognitive science and AI, using computational + behavioral methods to understand how language works in minds and machines. Details below! (1/4)

7

206

811

Friends! I am looking for PhD and Masters students to join my group for Fall 2025, Please share with students you may know who are interested in the intersection of language, CogSci, and AI! first deadline is Dec 1st, see my page for more info:

7

150

410

The 1st paper of my thesis out in Child Development, OA! 🎉 «Cognate beginnings to bilingual lexical acquisition» w/Daniela Avila-Varela, @IgCastillejo & Nuria Sebastian-Galles. @cbcUPF @UPFBarcelona @UAM_Madrid

9

12

66

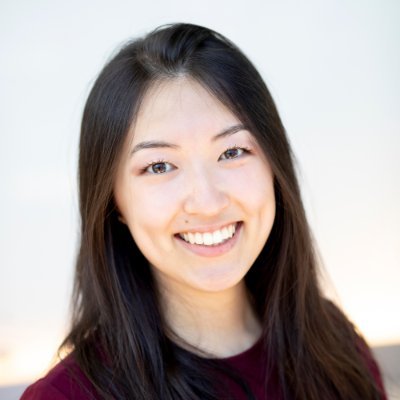

Are you thinking of applying to PhD programs in psychology but unsure about how to start or whether it's a good fit? Apply by Sep 29th to Stanford Psychology's 8th annual Paths to PhD info-session/workshop to have all of your questions answered! https://t.co/tex6YrzDzC

docs.google.com

Join Stanford Psychology graduate students, research assistants, and faculty for a free one-day virtual information session and workshop on applying to research positions and Ph.D. programs in...

3

129

250

Examining the robustness and generalizability of the shape bias: a meta-analysis - new paper by Samah Abdelrahim and me. Lots of heterogeneity in this important phenomenon, perhaps masking true developmental and cross-linguistic trends... https://t.co/GsrMjZ5cFV

0

10

27

Announcing the launch of the Open Encyclopedia of Cognitive Science: https://t.co/5OogaxRymH! OECS is a freely-available, growing collection of peer-reviewed articles introducing key topics in cogsci to a broad audience of students and scholars.

oecs.mit.edu

The Open Encyclopedia of Cognitive Science is a new, multidisciplinary guide to understanding the mind: a freely-available, growing collection of peer-reviewed articles introducing key topics to a...

1

142

317

Come see all these amazing folks presenting their work at #CogSci2024! I’m sorry I won’t be there!

1

21

96

@babyLMchallenge @wkvong Huge thanks to my fantastic undergrad RA Sunny Yu, collaborators @brialong, @AnyaWMa, Tonya Murray, Rebecca Silverman, @jdyeatman, and of course my amazing advisor @mcxfrank for making this work possible. Stay tuned for code/data + future iterations of DevBench! 😉7/7

0

0

6

@babyLMchallenge @wkvong Overall, DevBench demonstrates that models vary in similarity to human responses over development, and areas of biggest divergence (e.g., trials with lexical ambiguities like “horn” or “net”) suggest entry points for further research towards the improvement of VLMs. 6/

1

0

3

@babyLMchallenge @wkvong As an example of development within a VLM, we analysed the training trajectory of OpenCLIP. Its similarity to humans increases with training on some tasks, and on the Visual Vocab task (VV) it also recovered developmental trends: earlier epochs were more similar to young kids. 5/

1

0

2

@babyLMchallenge @wkvong Across tasks, we find that models which are more accurate on a task are also more similar to humans. Furthermore, for some tasks, worse-performing models have more child-like responses, while better-performing models have more adult-like responses. 4/

1

0

2

@babyLMchallenge @wkvong We draw from the dev psych literature to construct a set of 7 tasks with corresponding human data spanning from infancy to adulthood. Crucially, each task has item-level data, so we can compare not just overall accuracy, but similarity in *response patterns* for each item. 3/

1

0

1

LMs are much less data efficient than humans. Recent efforts (e.g., @babyLMchallenge, work by @wkvong) have introduced models trained on smaller, more developmentally realistic data, but such models have been (implicitly or explicitly) compared to adult levels of performance. 2/

1

0

3

How do we measure similarities and differences between how kids and models learn language? We introduce DevBench, a suite of multimodal developmental evaluations with corresponding human data from both children and adults. 🧵 1/ 📝: https://t.co/B299kyjl1X

2

21

69

What do preschool learning experiences look like? We examined variability in children’s language environments in a preschool classroom using a new dataset of naturalistic egocentric videos. 🧵 OSF: https://t.co/HBuohY8lfl 🔗: https://t.co/oFFBdGz2cU

@cogsci_soc #CogSci2024

1

9

28