Ashish Kapoor

@akapoor_av8r

Followers

6K

Following

1K

Media

195

Statuses

895

Building general purpose robotics intelligence @genrobotics_ai | Aviator

Seattle, WA

Joined June 2016

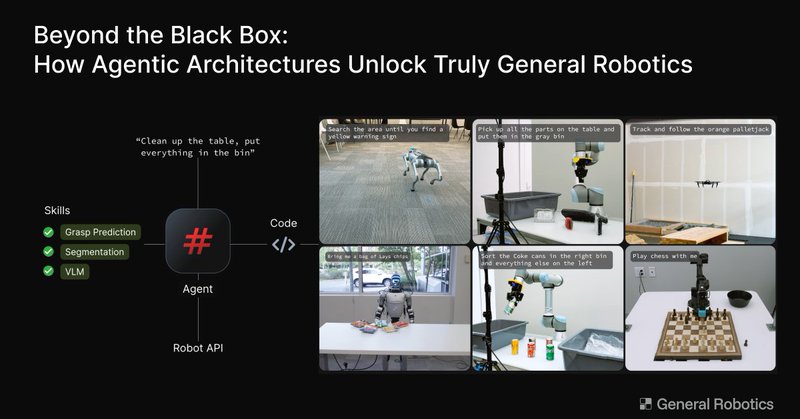

Robotics in the age of FMs, cloud and Starlink. We've been rethinking robot AI — cloud-first, LLM-first approach. Fast to deploy. Modular. Data-centric. Built for long-horizon reasoning. Paper: https://t.co/XWVNZodcnx Blog: https://t.co/QymfLdyd80 🧵 #Robotics #AI #LLM #VLM

6

13

56

📄 Paper: https://t.co/XWVNZodcnx 🔗 Blog: https://t.co/QymfLdyd80 Thank you to all authors who contributed. Join us in exploring cloud- and AI-native robotics—an ecosystem where embodied agents evolve as rapidly as the AI models that drive them.

generalrobotics.company

0

0

3

This shift is conceptual as much as technical –– from: - Control systems → learning systems - Static deployments → continually evolving cognition - Edge isolation → cloud collaboration. n/12

1

0

1

D3. Fast deployment –– no local retraining or reinstallation. Simply make API calls to ever evolving skill services. e.g. A mobile manipulator streams perception + planning from the cloud. n/11

1

0

1

D2. Long-horizon planning –– Integration with classical and deep-ML constructs enables coordination across temporal scales. e.g robot arm perceives with a foundation model, acts with an actuator controller, plans with stockfish chess engine. n/10

1

0

1

D1. Modularity and scalability –– A single substrate supports heterogeneous robots (manipulators, drones, mobile bases, humanoids) by streaming shared skills as APIs. No bespoke stack per platform, just standardized “Skill Access Protocols” n/9

1

0

1

Benchmarking: Sustaining 30FPS vision streaming across different resolutions. n/8

1

0

1

Benchmarking: Achieving control latencies worth tens of milliseconds even across geographic regions. n/7

1

0

1

Benchmarking: Huge amount of careful engineering went into running modular skills in near real-time for full-scale models. n/6

1

0

1

This naturally enables agents –– - Elastic infra with MCP servers exposing each skill in LLM-friendly form - Each robot is a lightweight node that invokes skills (perception, planning, reasoning) as composable services - Multiple agents can work together on complex tasks. n/5

1

0

1

We propose a multi-layered architecture that consists of: - Fixed on-robot layer: low-latency control - Fixed Skill Access Protocol: standardized API for skill invocation - Evolving cloud layer: training, sim, orchestration & skill composition The ever evolving cloud hosts

1

0

2

Modern robotics must evolve beyond the 1990s –– From siloed, closed, edge-centric systems → AI-first, cloud-first architectures. We improve across 3 dimensions: - Ability to create embodied AI across form factors, scenarios and use cases rapidly. - Enable long-horizon

1

0

2

Current robot AI ecosystem is fractured ROS stacks, sims, datasets, and controllers, each re-implement core functionality. Robots are built in silos with intelligence welded to hardware. Result? Abysmal engineering efficiency - spending millions and years just to create a POC

1

0

2

Our NVIDIA #SIGGRAPH workshop on embodied AI is now available on demand. Get hands-on with GRID to create, deploy, and adapt robot behaviors in minutes—translating visual ideas into real-world action. 🔗

nvidia.com

In the era of embodied AI, the boundary between digital creation and physical action is vanishing

0

3

21

Time to move towards more modern robotics architectures that are AI-first and Cloud-first -- enabling agentic workflows.

Agents have transformed software, now it's time for robotics. Today, we’re revealing our blueprint for building agentic robots –– machines that can reason, converse, compose, and remember. https://t.co/1bmR9vz3lJ

0

2

14

One GRID to control them all!

Unveiling agentic robotics on GRID –– our blueprint for machines that can reason, converse, compose, and remember. Agents have transformed software, now it's time for robotics. - Modular tools for perception, planning, and robot control. - Scalable, elastic infrastructure to

0

1

9

Unveiling agentic robotics on GRID –– our blueprint for machines that can reason, converse, compose, and remember. Agents have transformed software, now it's time for robotics. - Modular tools for perception, planning, and robot control. - Scalable, elastic infrastructure to

3

28

95

Why can robots do backflips but still struggle to open a drawer??? [📍 Link to project] Precise grasping and whole-body coordination make it harder than acrobatics. DreamControl takes a step toward solving this. It combines diffusion models and reinforcement learning to teach

6

27

112

You very rarely see policies like this which show actual autonomous manipulation

Last week, we shared DreamControl — a scalable framework for whole-body humanoid control that fuses diffusion priors with reinforcement learning to enable real-world scene interaction. Diffusion + RL → natural whole-body skills Policies run in real time → bridges sim-to-real

3

15

88

Thank you to a number of researchers and engineers who contributed to this effort led by @jonathanhuang11: @DvijKalaria, @sudarshan_s_h , @pushkalkatara, Sangkyung Kwak, @sarthak__bhagat, Shankar Sastry, Srinath Sridhar, @saihv

0

0

7