Amey Agrawal

@agrawalamey12

Followers

577

Following

3K

Media

49

Statuses

222

Systems for AI | Forging @ProjectVajra | CS PhD candidate @gtcomputing, visiting scholar @MSFTResearch

Atlanta, GA

Joined January 2016

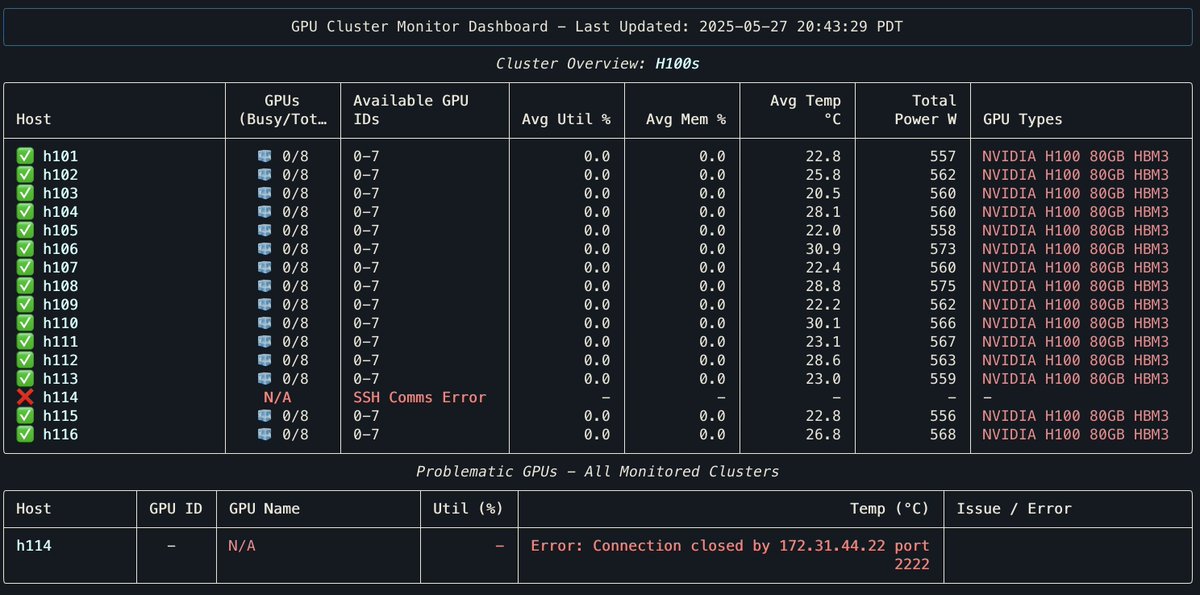

A few of us at Georgia Tech are building Vajra, an open‑source inference engine built for the next wave of serving problems:. * Multimodal streaming first – Low‑latency realtime video, audio pipelines.* Highly distributed computing - Managing 1000s of GPUs.* Hierarchical Graph.

0

4

15

RT @ramaramjee: Evaluation of LLM serving systems is tricky because several factors influence performance (prefill length, decode length, p….

0

2

0

Interesting work on long context inference from @nvidia, where they scale KV parallelism on gb200-nvl72 systems! To learn more about accelerating long context inference and trade-offs between different parallelism dimensions checkout out our paper, Medha:

arxiv.org

As large language models (LLMs) handle increasingly longer contexts, serving long inference requests of millions of tokens presents unique challenges. We show that existing work for long context...

What if you could ask a chatbot a question the size of an entire encyclopedia—and get an answer in real time?. Multi-million token queries with 32x more users are now possible with Helix Parallelism, an innovation by #NVIDIAResearch that drives inference at huge scale. 🔗

0

5

14

Super cool paper, deepseek style communication-computation overlap on steroids! Deepseek creates separate microbatches to overlap communication and communication --- thus hiding the EP communication overhead. TokenWeave splits existing batch into smaller microbatches to overlap.

TokenWeave is the first system that almost fully hides the ~20% communication cost during inference of LLMs that are sharded in a tensor-parallel manner on H100 DGXs. Check out the thread/paper below!.

0

1

9

RT @agrawalamey12: Super excited to share another incredible systems that we have built over the past two years! Training giant foundation….

0

13

0

Maya offers a transparent, accurate, and efficient way to model and optimize large-scale DL training without needing expensive hardware clusters for exploration. A crucial step towards sustainable AI!. Read the paper: Work done with @Y_Srihas , @1ntEgr8 ,.

arxiv.org

Training large foundation models costs hundreds of millions of dollars, making deployment optimization critical. Current approaches require machine learning engineers to manually craft training...

0

1

2