Aditi Mavalankar

@aditimavalankar

Followers

2K

Following

1K

Media

8

Statuses

133

Research Scientist @DeepMind working on Gemini Thinking

London, UK

Joined March 2017

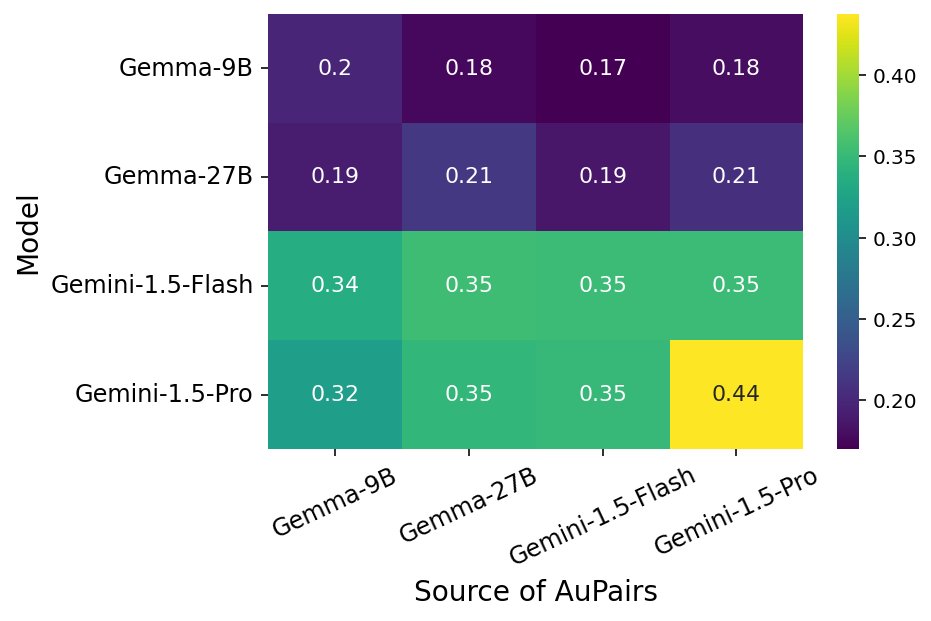

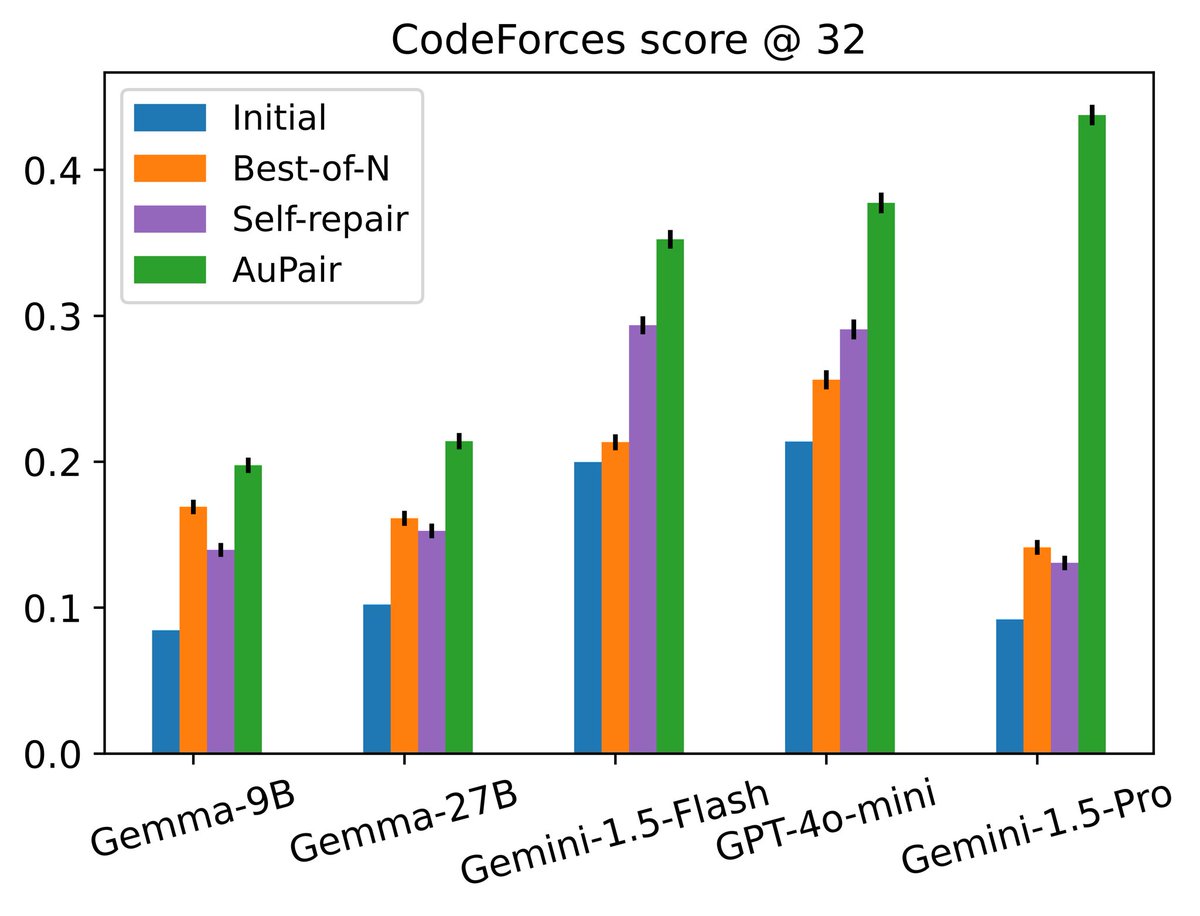

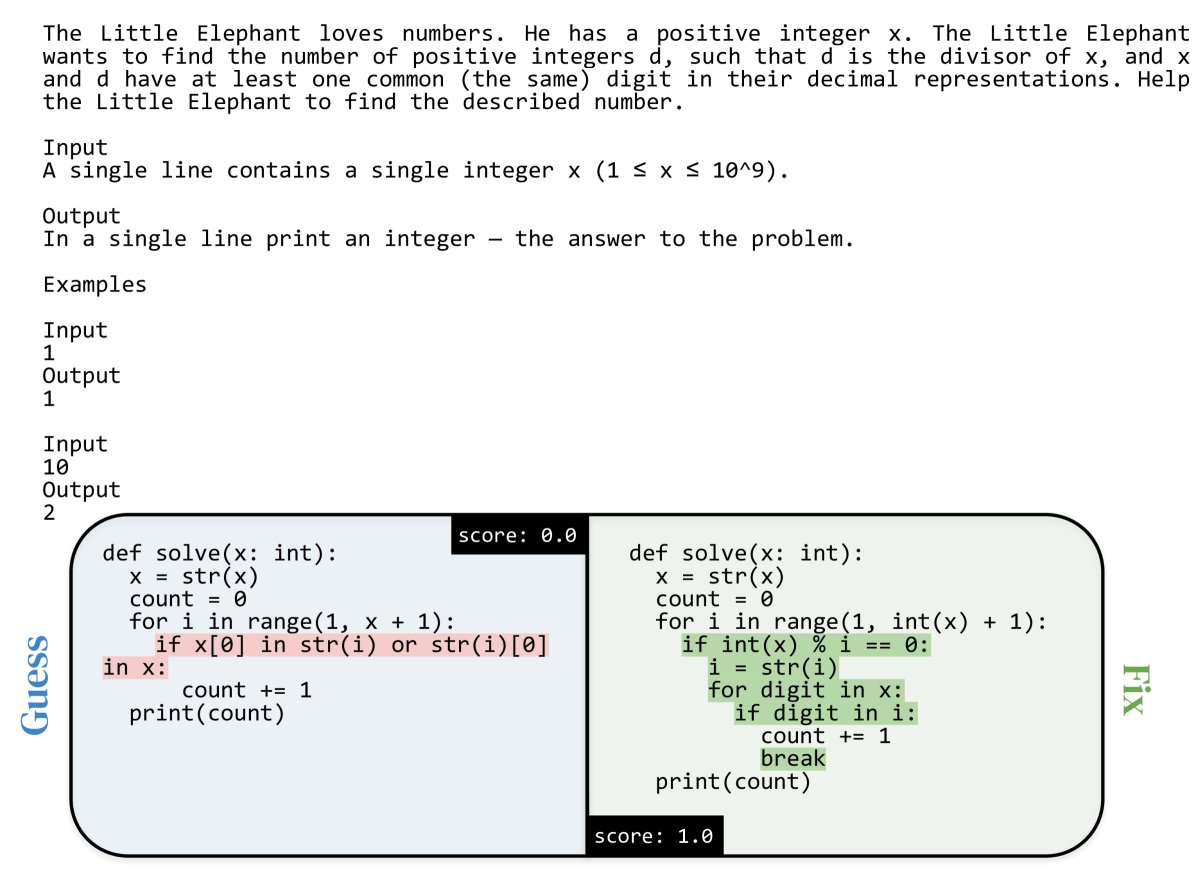

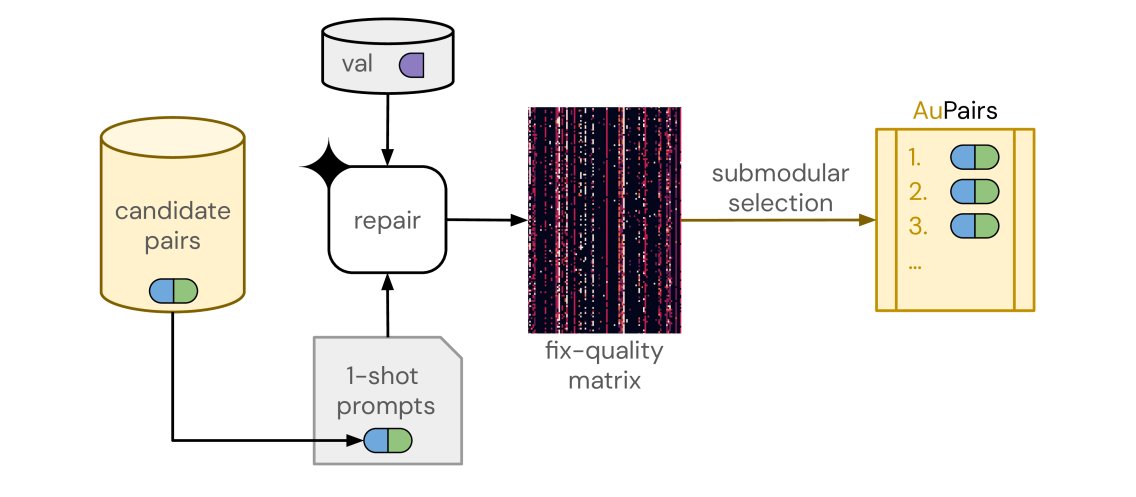

Excited to share our recent work, AuPair, an inference-time technique that builds on the premise of in-context learning to improve LLM coding performance!.

arxiv.org

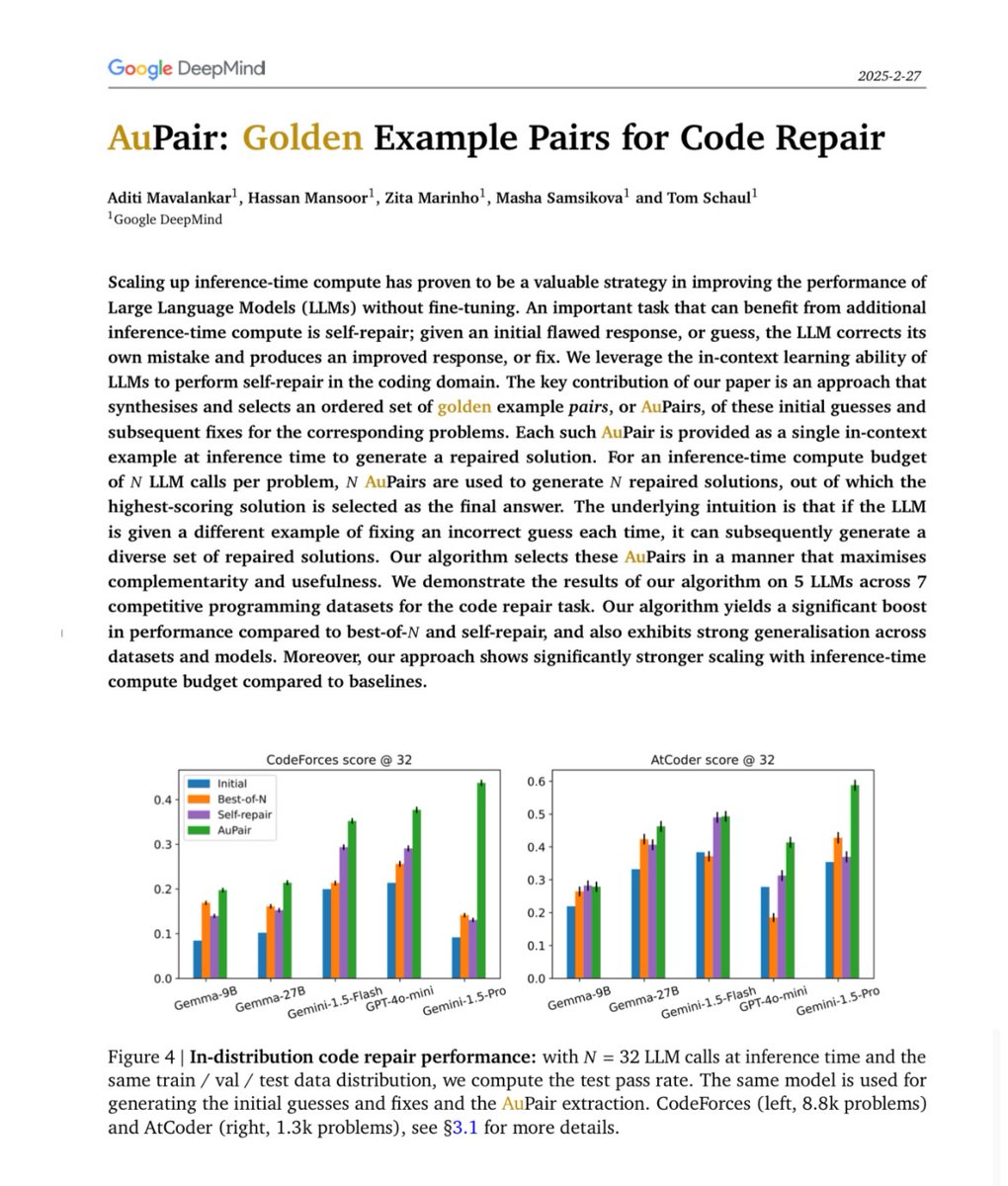

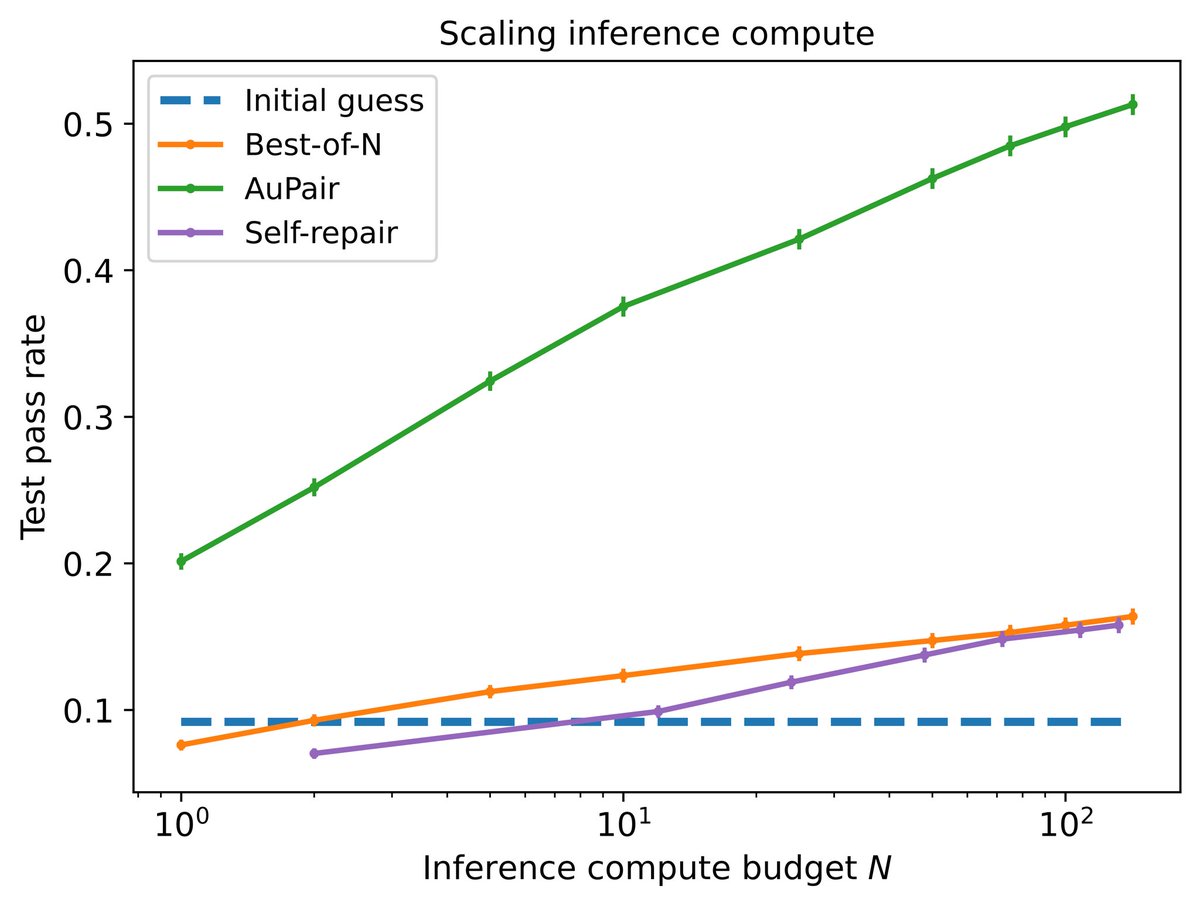

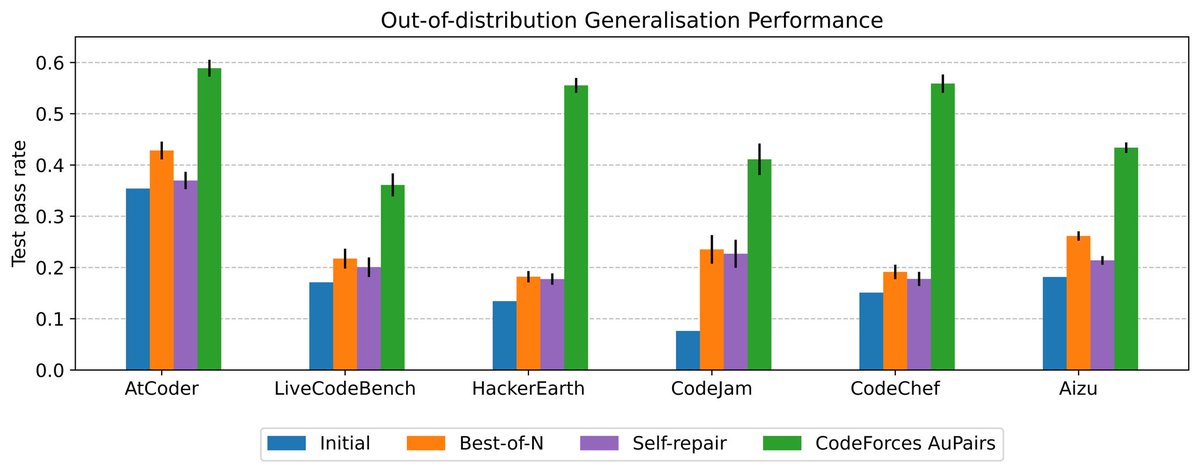

Scaling up inference-time compute has proven to be a valuable strategy in improving the performance of Large Language Models (LLMs) without fine-tuning. An important task that can benefit from...

3

4

41

Gemini with advanced deep think achieved gold medal-level performance at IMO 2025!🥇. Very happy to have been a small part of this collaboration on the inference side, and congrats to everyone involved!.

An advanced version of Gemini with Deep Think has officially achieved gold medal-level performance at the International Mathematical Olympiad. 🥇. It solved 5️⃣ out of 6️⃣ exceptionally difficult problems, involving algebra, combinatorics, geometry and number theory. Here’s how 🧵

0

1

19

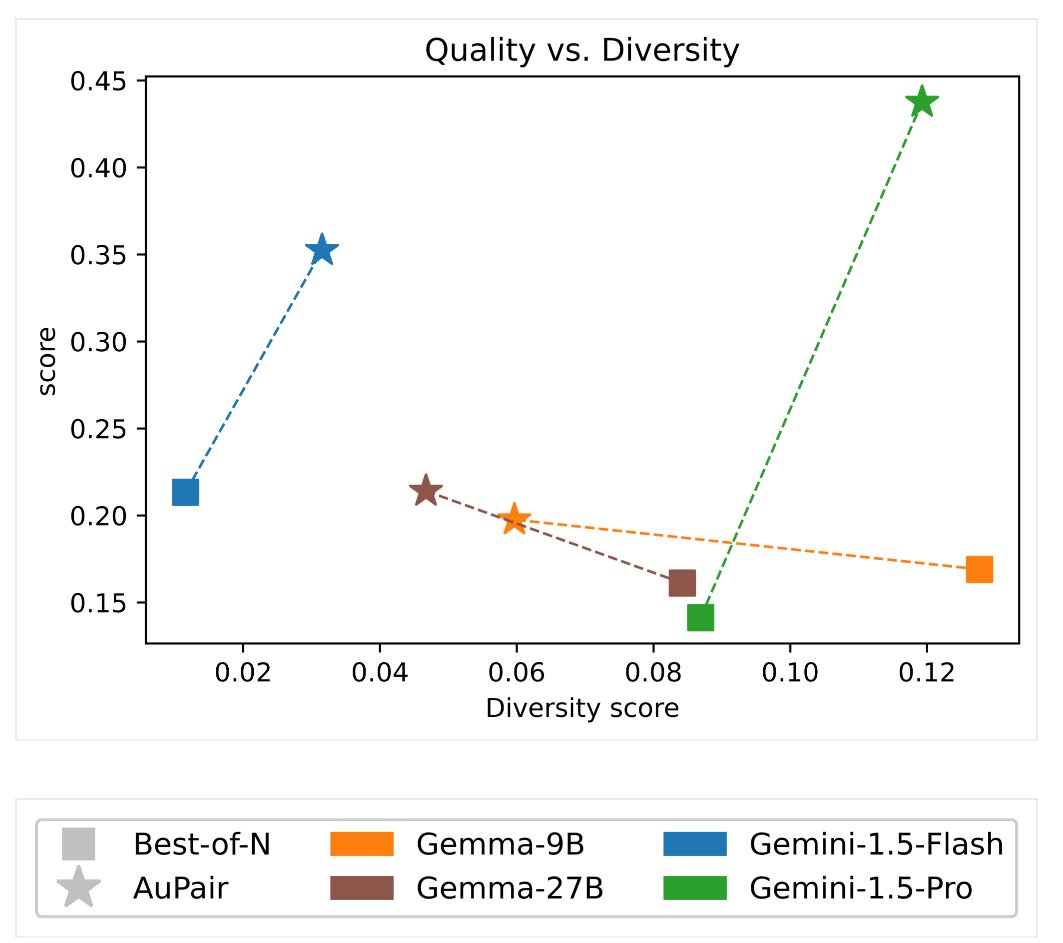

On my way to #ICML2025 to present our algorithm that strongly scales with inference compute, in both performance and sample diversity! 🚀. Reach out if you’d like to chat more!

Excited to share our recent work, AuPair, an inference-time technique that builds on the premise of in-context learning to improve LLM coding performance!.

2

9

82

Accepted to #ICML2025 .See you in Vancouver!.

Excited to share our recent work, AuPair, an inference-time technique that builds on the premise of in-context learning to improve LLM coding performance!.

0

1

17

Outstanding achievement, congratulations, @demishassabis and John Jumper!! 🎉.

Huge congratulations to @DemisHassabis and John Jumper on being awarded the 2024 Nobel Prize in Chemistry for protein structure prediction with #AlphaFold, along with David Baker for computational protein design. This is a monumental achievement for AI, for computational.

0

0

22

RT @demishassabis: Advanced mathematical reasoning is a critical capability for modern AI. Today we announce a major milestone in a longsta….

0

580

0

RT @MichaelD1729: Presenting new work clarifying our perspective on open-endedness (co-lead @edwardfhughes). A clear consequence of our def….

0

5

0

RT @demishassabis: Delighted and honoured to receive a Knighthood for services to AI. It’s been an incredible journey so far building @Goog….

0

290

0

RT @_rockt: I am really excited to reveal what @GoogleDeepMind's Open Endedness Team has been up to 🚀. We introduce Genie 🧞, a foundation….

0

552

0

If this is the way @British_Airways treats business class customers, I wonder what kind of treatment I should expect if I had been flying economy class as I usually do. Threatening foreigners with security when the fault is 100% on the airline is clearly wrong. Do better!.

2

0

29