Yi Xu

@_yixu

Followers

494

Following

53

Media

13

Statuses

41

AI researcher, interested in LLMs and reinforcement learning | Previously @UCL_DARK, @imperialcollege, @UniMelb

London

Joined June 2024

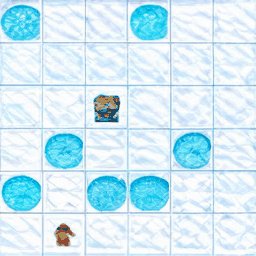

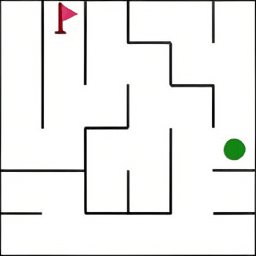

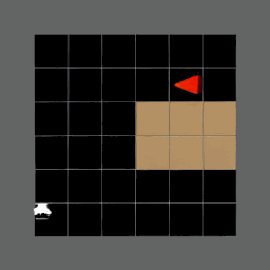

🚀Let’s Think Only with Images. No language and No verbal thought.🤔 . Let’s think through a sequence of images💭, like how humans picture steps in their minds🎨. We propose Visual Planning, a novel reasoning paradigm that enables models to reason purely through images.

14

213

1K

RT @LauraRuis: LLMs can be programmed by backprop 🔎. In our new preprint, we show they can act as fuzzy program interpreters and databases.….

0

55

0

RT @hanzhou032: Automating Multi-Agent Design:. 🧩Multi-agent systems aren’t just about throwing more LLM agents together. 🛠️They require m….

0

166

0

Huge appreciation to @li_chengzu, @hanzhou032, and @wanxingchen_, @caiqizh, @annalkorhonen, @licwu for their incredible support and collaboration. It’s been truly rewarding to work together!.

0

1

10

📘 Want to know more?. 📄 Read the paper: ⭐️Star the repo:

github.com

Visual Planning: Let's Think Only with Images. Contribute to yix8/VisualPlanning development by creating an account on GitHub.

2

5

33

RT @li_chengzu: Happy to share that MVoT got accepted to ICML 2025 @icmlconf 🎉🎉#ICML . If you are interested, do check out our paper and he….

twimlai.com

0

13

0

RT @LauraRuis: This got accepted to #ICML2025 as a *spotlight paper* (top 2.6%!) 🚀 --- work that @_yixu did as an Msc student! Surely this….

0

10

0

RT @hahahawu2: 💡Unlocking Efficient Long-to-Short LLM Reasoning with Model Merging. We comprehensively study existing model merging methods….

0

11

0

Huge thanks to @LauraRuis, @_rockt and @_robertkirk for their invaluable collaboration and support. It has been a pleasure working together.

1

0

10

Want to know more? 📖 Read our paper to understand why rankings are unstable and how to fix them! 🚀

arxiv.org

Automatic evaluation methods based on large language models (LLMs) are emerging as the standard tool for assessing the instruction-following abilities of LLM-based agents. The most common method...

2

2

7