Anthony Chen 🤖

@_anthonychen

Followers

462

Following

5K

Media

17

Statuses

186

ai research @googledeepmind ♊️ phd from @ucirvine

little worm in big apple

Joined May 2017

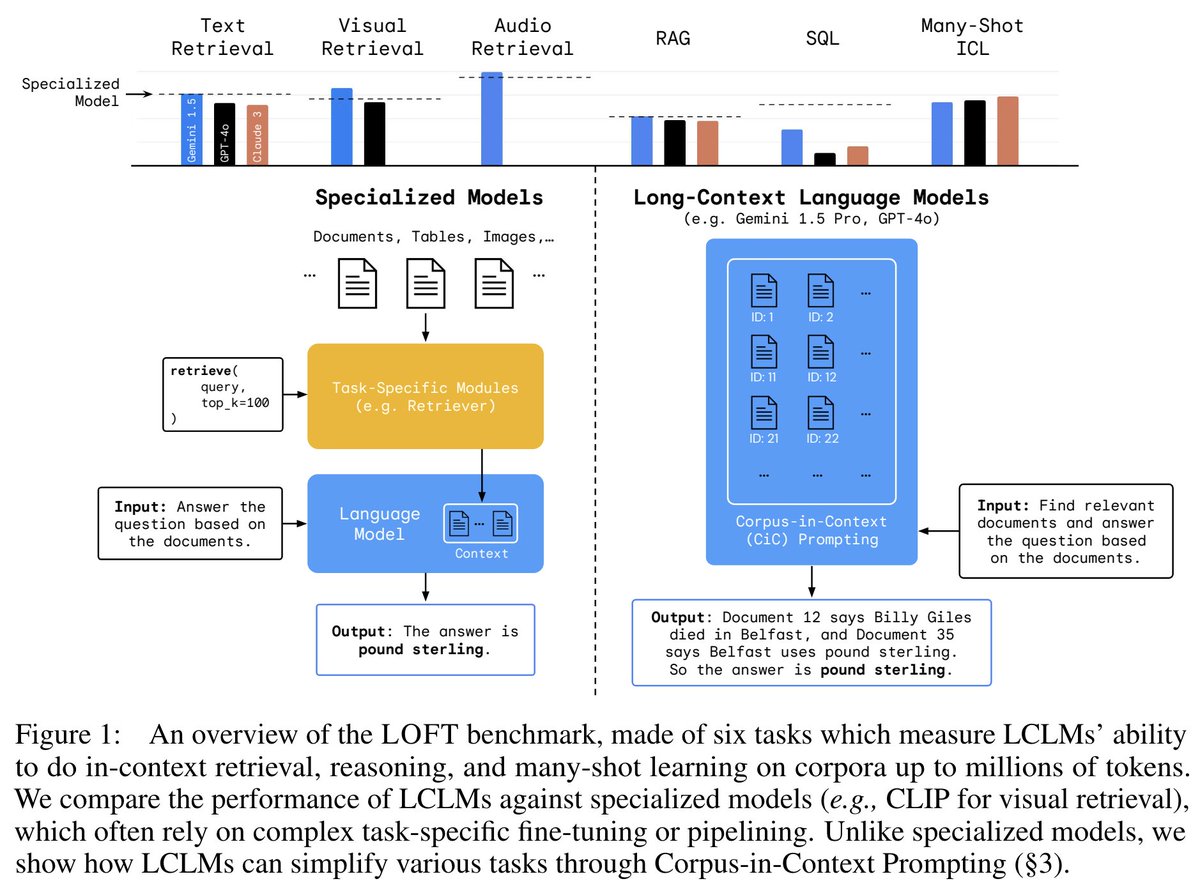

Lots of discourse around long-context language models (LCLMs) subsuming RAG and retrieval but how close are we to this paradigm shift?. Introducing LOFT a 1 million token benchmark spanning 6 tasks & 35 datasets to test LCLMs’ ability to do in-context retrieval & reasoning [1/10].

Can long-context language models (LCLMs) subsume retrieval, RAG, SQL, and more?. Introducing LOFT: a benchmark stress-testing LCLMs on million-token tasks like retrieval, RAG, and SQL. Surprisingly, LCLMs rival specialized models trained for these tasks!.

1

4

22

RT @lmarena_ai: BREAKING: Gemini 2.5 Pro is now #1 on the Arena leaderboard - the largest score jump ever (+40 pts vs Grok-3/GPT-4.5)! 🏆. T….

0

417

0

RT @GoogleDeepMind: Think you know Gemini? 🤔 Think again. Meet Gemini 2.5: our most intelligent model 💡 The first release is Pro Experimen….

0

518

0

GDM's work converting Gemini into a SOTA dual encoder is now out! SOTA results across all benchmarks including exceptionally strong coding performance. Check out the tech report for more details and some interesting ablations!.

🎉 Gemini Embedding is LIVE! 🎉. Try our state-of-the-art text embedding model for FREE on Vertex AI (text-embedding-large-exp-03-07; 120 QPM) & AI Studio (gemini-embedding-exp-03-07)!. ➡️ APIs: ➡️ Report:

0

0

6

RT @DrJimFan: This is the most gut-wrenching blog I've read, because it's so real and so close to heart. The author is no longer with us. I….

0

401

0

Thanks for sharing our paper!.

Can Long-Context Language Models Subsume Retrieval, RAG, SQL, and More?. Google DeepMind conducts a deep performance analysis of long-context LLMs on in-context retrieval and reasoning. They first present a benchmark with real-world tasks requiring 1M token context. Report

0

0

4

RT @ZhuyunDai: Thrilled to unveil LOFT, our latest research showing how long-context language models like Gemini can subsume retrieval, RAG….

0

9

0

RT @YiLuan9: Very happy to contribute to the Multimodal benchmarking on the LOFT project! Very excited to see with fewshot prompting only,….

0

4

0

RT @riedelcastro: "just put the corpus into the context"! . Long context models can already match or beat various bespoke pipelines and inf….

0

17

0

RT @kelvin_guu: Do long-context LMs obsolete retrieval, RAG, SQL and more? Excited to share our answer! from the te….

0

12

0

RT @leejnhk: Can long-context language models (LCLMs) subsume retrieval, RAG, SQL, and more?. Introducing LOFT: a benchmark stress-testing….

0

53

0

Work done with a great group of collaborators:.@leejnhk @zhuyundai @ddua17 @devendr06654102 @michaelboratko @yiluan9 @seba1511 @vincentperot @siddalmia05 @hexiang_hu @xudong_lin_ai @icepasupat @amini_aida @jeremy_r_cole @riedelcastro @iftekharnaim @mchang21 @kelvin_guu [10/10].

0

0

2