Wesley Chang

@_WesChang

Followers

181

Following

73

Media

2

Statuses

17

PhD student @ucsd_cse. Computer graphics, rendering, and differentiable rendering.

San Diego, CA

Joined July 2022

In our #SIGGRAPH2025 work, we enable artist-level editing of hair reconstructed from video for the first time! We convert 3D strands into a procedural model controllable via guide strands and intuitive operators like curl and bend. 1/4 https://t.co/V26aBn3XEi (Code available!)

1

2

23

I’m excited to announce our #SIGGRAPHAsia2025 paper on making the rendering of Gaussian process implicit surfaces (GPIS) practical: https://t.co/N9Cxsx3Llw. We achieve this with a novel procedural noise formulation and by enabling next-event estimation for specular BRDFs. [1/7]

6

6

22

🌲Introducing our paper A generalizable light transport 3D embedding for Global Illumination https://t.co/qW04w1NdLU. Just as Transformers learn long-range relationships between words or pixels, our new paper shows they can also learn how light interacts and bounces around a 3D

4

33

125

If you’re at SIGGRAPH 2025 in Vancouver, join us Thu 2 PM for our talk “Generative Neural Materials”! We introduce a universal neural material model for bidirectional texture functions and a complementary generative pipeline. 1/2

1

5

19

I'll be presenting this at the conference on Wed Aug 13 in the Avatars session at 10:45am in West Building, Rooms 220-222. See you there! 4/4

0

0

1

Thanks to my amazing collaborators at UCSD and Meta Reality Labs: Andrew Russell, Stephane Grabli, Matt Chiang, Christophe Hery, Doug Roble, Ravi Ramamoorthi, @tzumaoli, and Olivier Maury. 3/4

1

0

1

This is done using clever optimization methods that exploit the random variations in hair. Our method does not require training data and is fully interpretable! We also show that converting to our grooms lead to better hair structure, enabling applications like simulation. 2/4

1

0

1

https://t.co/F1d85YqWGz Check out Wesley, Xuanda, and Yash's latest work on combining cross bilateral filtering and Adam to make optimization in graphics more robust and fast, through better preconditioning the gradient.

0

15

89

What a pleasant big surprise. Free to share a $1M secret to get a best paper award: to get Iliyan presenting the paper. @EGSympRendering @iliyang. Congrats to @tzumaoli, Trevor and Ravi.

7

14

94

Better than never! I finally recorded and uploaded my short presentation of our SIGGRAPH "2023" paper, A Practical Walk-on-Boundary Method for Boundary Value Problems, on YouTube.

1

5

34

I hate when my images look blurry when I zoom them in. If you're like me, check out our #SIGGRAPHAsia2023 paper on a method that allows infinite zooming with razor-sharp discontinuities. https://t.co/1Dh4Iwj8ex

iliyan.com

Neural image representations offer the possibility of high fidelity, compact storage, and resolution-independent accuracy, providing an attractive alternative to traditional pixel- and grid-based...

0

22

133

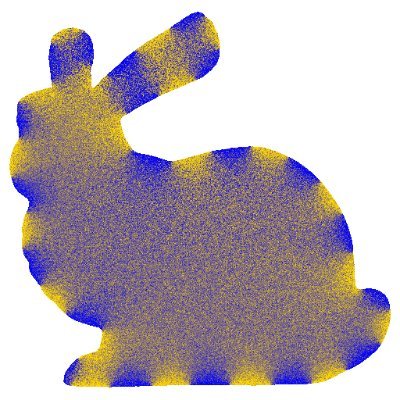

Checkout our #SIGGRAPH2023 paper “Fluid Cohomology” 🌊🐇🍩 We show that, despite its applications, the current formulation of vorticity-streamfunction is insufficient at simulating fluids on non-simply-connected domains. [1/6] https://t.co/OVRWsEXYqk

1

13

57

The code for our EGSR 2023 paper, Personalized Video Prior for Editable Dynamic Portraits using StyleGAN, has been released! It allows you to create a personalized dynamic portrait from a single video! Project page: https://t.co/EFOUO1BeB2 Github: https://t.co/aY4QjaHEU5

2

7

21

Thanks to my amazing advisors and collaborators: @tzumaoli, Ravi Ramamoorthi, Toshiya Hachisuka, @DerekRenderling, and Venkataram Sivaram! 3/3

0

0

9

This allows us to more efficiently recover texture parameters of the complex Disney BRDF. We also propose an extension to resampled importance sampling, which allows it to sample arbitrary real-valued functions, broadening its applicability outside of rendering. 2/3

1

0

8

Excited to share our #SIGGRAPH2023 work on accelerating inverse rendering with ReSTIR. Since we render a sequence of frames during optimization, we can reuse samples from previous frames, just like in real-time rendering. 1/3 https://t.co/rSKNwHy4Mp

2

37

173