Xingwei Tan

@Xingwei__Tan

Followers

23

Following

36

Media

1

Statuses

18

Joined October 2024

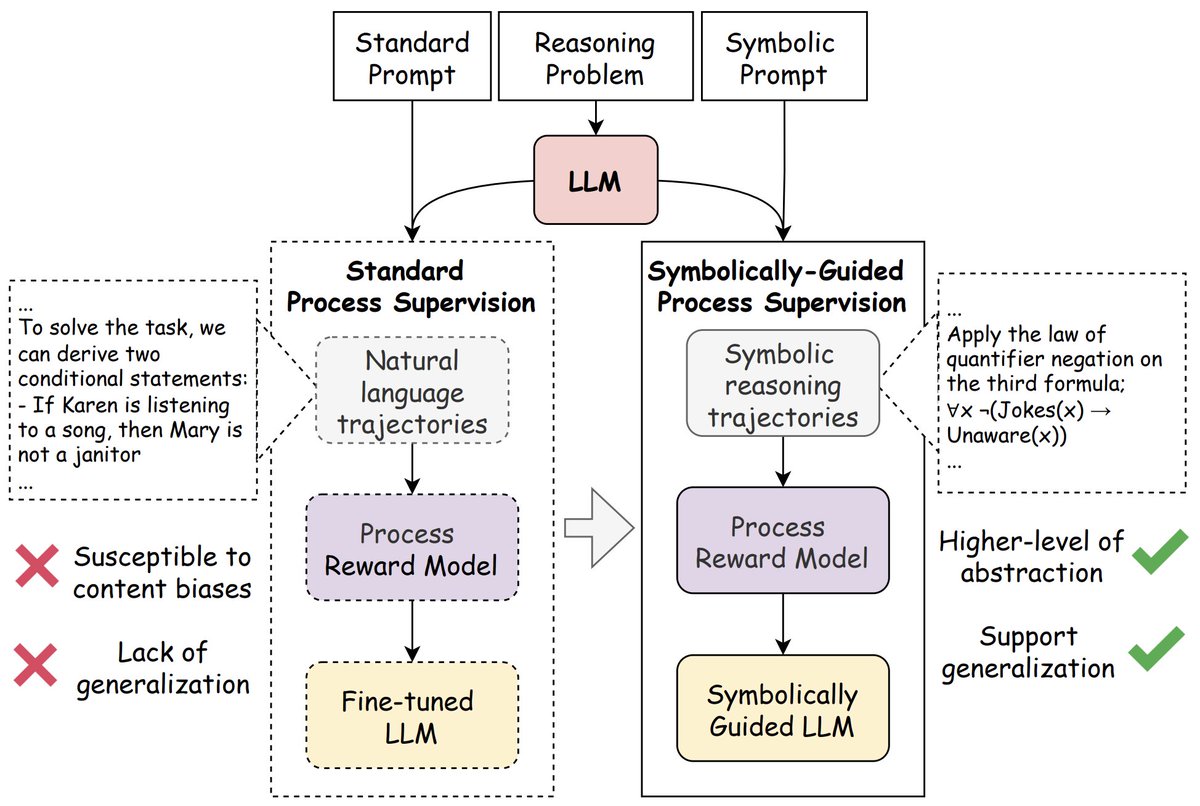

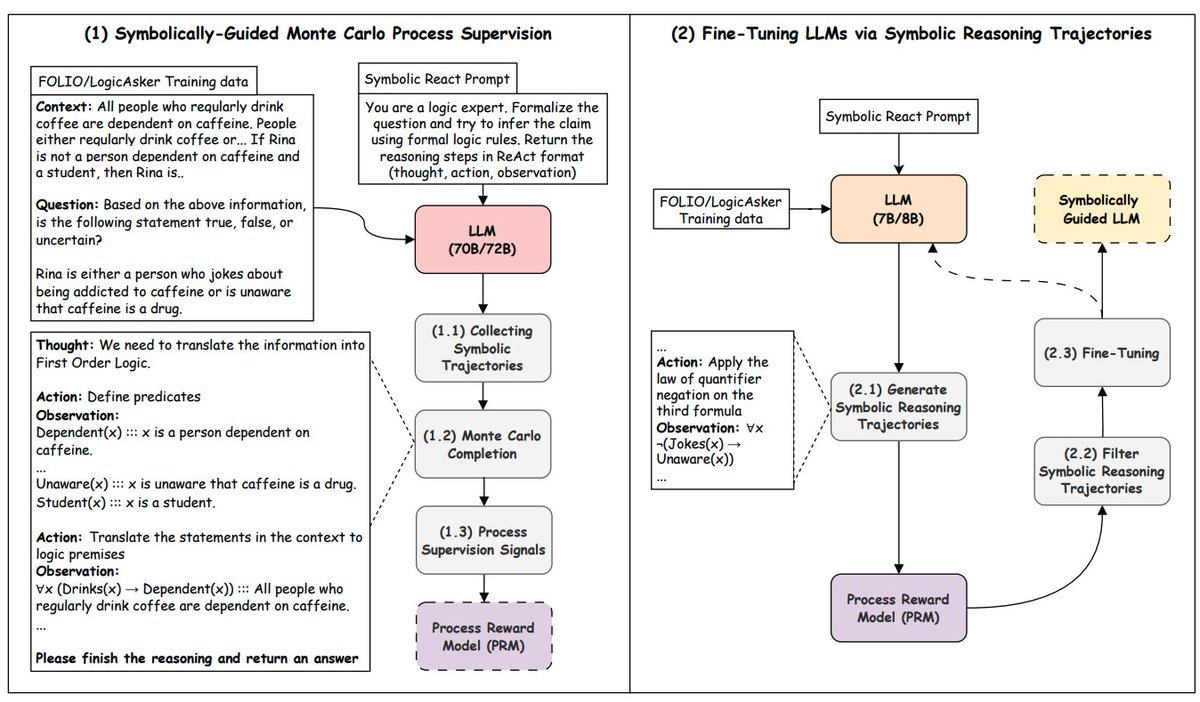

📃 Enhancing Logical Reasoning in Language Models via Symbolically-Guided Monte Carlo Process Supervision. 🌐 @akhter_elahi .@xrysoflhs .@nikaletras.

0

0

2

Multi-step reasoning #LLMs are often fine-tuned on trajectories that are filtered with process reward models (PRMs) automatically trained on Monte Carlo estimation-generated pseudo labels.

1

4

14

If you are attending #NAACL2025 in person, you are welcome to check out our Safespeech DEMO in Hall 3 (9:00-10:30).

arxiv.org

Detecting toxic language including sexism, harassment and abusive behaviour, remains a critical challenge, particularly in its subtle and context-dependent forms. Existing approaches largely focus...

1

2

8

Welcome to join our oral talk on "Cascading Large Language Models for Salient Event Graph Generation" starting from 14:00 on Thursday at Albuquerque Convention Center Ruidoso room. #NAACL2025.

arxiv.org

Generating event graphs from long documents is challenging due to the inherent complexity of multiple tasks involved such as detecting events, identifying their relationships, and reconciling...

0

1

8

In partnership with the UK Forensic Network, we have developed a system designed to analyze conversational data and identify instances of harmful speech, with a specific focus on Violence Against Women and Girls (VAWG) content. Checkout our #naacl2025 DEMO.

arxiv.org

Detecting toxic language including sexism, harassment and abusive behaviour, remains a critical challenge, particularly in its subtle and context-dependent forms. Existing approaches largely focus...

1

1

4