Wang Magic

@WangMagic_

Followers

605

Following

123

Media

3

Statuses

74

Google’s Gemini2.5 Deep Think claimed they used parallel thinking, and we just publicly released this core technology.

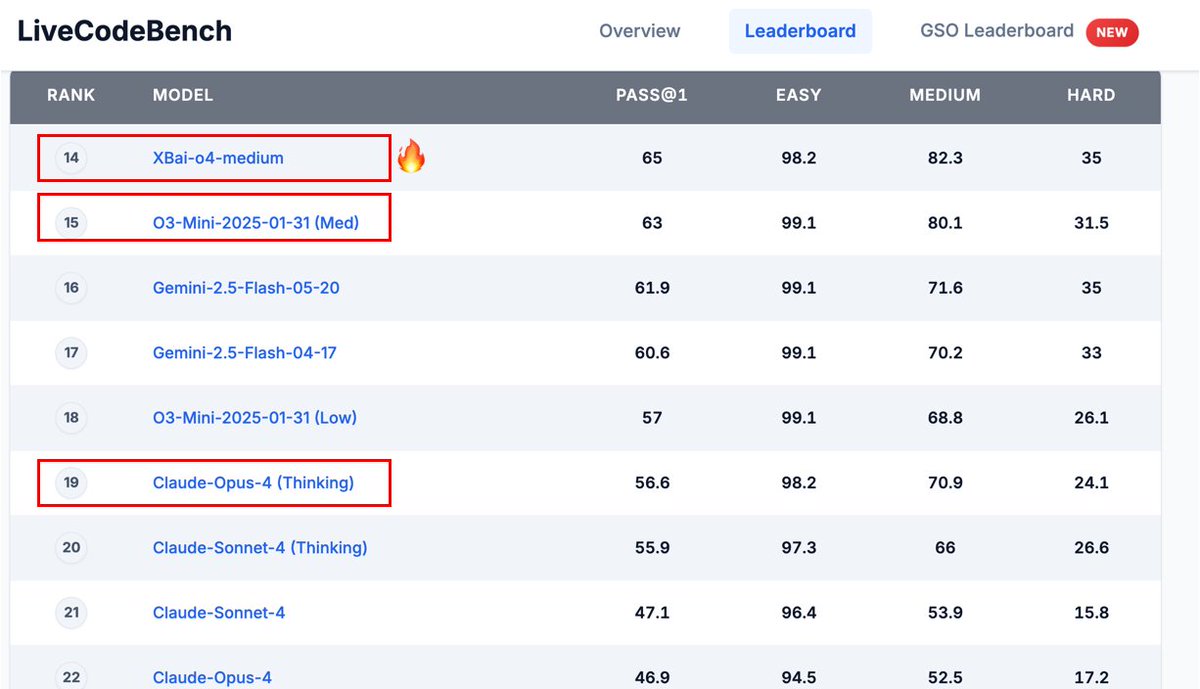

🚀 Introducing XBai o4:a milestone in our 4th-generation open-source technology based on parallel test time scaling!.In its medium mode, XBai o4 now fully outperforms OpenAI−o3−mini.📈. 🔗Open-source weights: .Github link:

0

5

36

RT @AashiDutt: 🚀 Just published: “XBai o4: A Guide With Demo Project”. Walks you through locally deploying @theMetaStoneAI's new reasoning….

0

1

0

Big move.

@WankyuChoi Try and play with Xbai-o4 ("high" setting). While smaller, it outperforms (at the FOMO riddle) a model that's more than 10X its size (GLM-4.5). TTS works.

0

0

6

Make the community move!.

gpt-oss is a big deal; it is a state-of-the-art open-weights reasoning model, with strong real-world performance comparable to o4-mini, that you can run locally on your own computer (or phone with the smaller size). We believe this is the best and most usable open model in the.

0

0

4

Thanks Ivan, anyone can enjoy our XBai o4 in this way.

XBai o4 ready to go on MLX in 4,5,6,8 quantization formats. DWQ cooking. I love the thinking trace of this model. M3 Ultra 80 cores: 30.9 tokens/sec.M4 Max 40 cores: 23.5 tokens/sec. Some context size tests and mmli pro benchmarks in the queue.

2

1

26

We recently collected some good cases of XBai o4. The top three most-liked comments on this post will receive 100 million tokens of XBai o4 API credit.

🚀 Introducing XBai o4:a milestone in our 4th-generation open-source technology based on parallel test time scaling!.In its medium mode, XBai o4 now fully outperforms OpenAI−o3−mini.📈. 🔗Open-source weights: .Github link:

15

2

53

XBai o4 has released the core technology of Gemini 2.5 Deep Think in “parallel thinking”🔥@GoogleDeepMind.

🚀 Introducing XBai o4:a milestone in our 4th-generation open-source technology based on parallel test time scaling!.In its medium mode, XBai o4 now fully outperforms OpenAI−o3−mini.📈. 🔗Open-source weights: .Github link:

1

1

15

RT @TheTuringPost: 7 notable AI models to pay attention to:. ▪️ Kimi K2.▪️ Gemini 2.5.▪️ Grok 4.▪️ Voxtral.▪️ MirageLSD.▪️ LTX-Video.▪️ Met….

0

35

0

RT @_akhaliq: MetaStone-S1 just dropped on Hugging Face. Test-Time Scaling with Reflective Generative Model. Experiments demonstrate that M….

0

52

0