Vlad Chituc is on bsky

@VladChituc

Followers

3K

Following

139K

Media

338

Statuses

3K

Yale Psych postdoc studying in morality, happiness, and subjective magnitude.

New Haven, CT

Joined September 2009

Hi friends! I'm finally feeling well enough to share some news that I've been very excited about: earlier this week, my kidney was removed through a small cut in my stomach, tucked into a box, flown down to johns hopkins, and implanted into a stranger where its working great

94

249

7K

.@realDonaldTrump: “If Hillary Clinton can’t satisfy her husband what makes her think she can satisfy America?” http://t.co/i0mrNDvlTy

384

37K

372K

UPenn's MindCORE Postdoctoral Fellowship is accepting applications (due Dec 1)! https://t.co/hX34aZHAQy Funding is available for 3 years. Fellows receive ~$70k in salary, $20k in a research/travel budget, assistance in relocation (e.g., $5k), and benefits.

0

7

18

New paper now out in Physics Report: If you're interested in information theory, complex systems, and emergent properties, this is the paper for you: a comprehensive review paper designed to bring anyone up to speed on information theory for complex systems scientists.

17

134

727

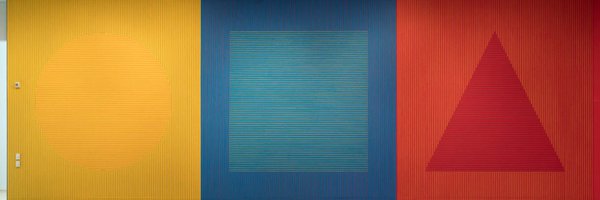

Thrilled to announce a new paper out this weekend in @CognitionJourn. Moral psychologists almost always use self-report scales to study moral judgment. But there's a problem: the meaning of these scales is inherently relative. A 2 min demo (and a short thread): 1/7

3

18

27

"How to show that a cruel prank is worse than a war crime: Shifting scales and missing benchmarks in the study of moral judgment" 📢New paper from: @VladChituc, @mollycrockett, & Brian Scholl https://t.co/ty1I2pjlPn

Thrilled to announce a new paper out this weekend in @CognitionJourn. Moral psychologists almost always use self-report scales to study moral judgment. But there's a problem: the meaning of these scales is inherently relative. A 2 min demo (and a short thread): 1/7

0

1

3

Bonus: these scenarios are obviously exaggerated, and it's not clear how widespread these problems are in the actual moral psych literature. But if you're interested in using these methods (or would like to be involved in a large-scale replication project) please reach out!

1

0

6

I love this project, and I'm thrilled that it's finally out (it's been YEARS in the making). You can read the paper at the following link for the next month or so, and for free on my site anytime after that Journal: https://t.co/3nyUipmImM Preprint: https://t.co/SJXrHfYuFv 7/7

1

1

6

We should hope that the measures used by our discipline can reveal something as obvious as the fact that a college prank (however cruel) is not nearly as bad as murder. But only one method consistently does this: magnitude estimation. 6/7

1

1

3

So while self-report ratings only show that a war crime is worse than a prank when scenarios are presented together (or within-subjects), you get the obvious difference with magnitude estimation whether you're rating the scenarios together or apart (between-subjects). 5/7

1

1

4

So how can we avoid this? Use a psychophysical method called "magnitude estimation" (where you make ratio judgments in reference to a benchmark stimulus): e.g. if stealing a wallet is a 10, then something twice as bad would be a 20, half as bad would be a 5, and so on. 4/7

1

0

3

This is obvious for everyday adjectives—if I say I have a small house and a big dog, you know I'm not saying that the latter is larger than the former. And the same holds true for immorality. (Also: this project has my favorite joke that I've ever snuck into a paper). 3/7

1

0

4

To state the obvious: college students don't really think a cruel prank is worse than an internationally recognized war crime. Instead, the words "very immoral" just mean very different things in these very different contexts. 2/7

1

0

3

Thrilled to announce a new paper out this weekend in @CognitionJourn. Moral psychologists almost always use self-report scales to study moral judgment. But there's a problem: the meaning of these scales is inherently relative. A 2 min demo (and a short thread): 1/7

3

18

27

If you want to know whether AI models are smart, you shouldn't be looking at the kinds of things that only smart people can do well—chess or Go or logical proofs. You should look at the things that even the dumbest people can *still* do well. https://t.co/UZHEhsJm6I

0

0

2

I regret to inform you that I started reading Yudkowsky's book

A really funny and ironic thing about AI researchers is that they fundamentally do not even kind of understand scaling laws. If they did, they wouldn't be shocked that LLMs hit a wall, nor would they see "superintelligence" as anything but wildly implausible sci fi bullshit.

0

0

0

Vegeta didn't turn super saiyan until 33. It's not over for you unc keep grinding

376

18K

104K

So can our minds get that much faster? Maybe, but I see no reason to think its likely or inevitable. But more importantly: if I were trying to warn of an AI apocalypse, I would spend more time explaining how it could work, and less time on paperclips and bombing data centers...

0

0

1

Concretely, the human brain is about 1200 cubic cm. To 10x our speed, you'd have to fit all of that computational power (with 10x the energy) into *1 cubic cm.* So you have to pump more and more energy into a smaller and smaller space to get diminishing returns on speed.

1

0

1

And unless you can get our dendrites to send signal faster, that means the distance between neurons has to get 10x smaller to fire 10x faster. and because of how volume scales, your brain would have to get 1000x smaller. And because heat dissipation as a function of area...

1

0

1

The thing is, you can't speed up our brains that fast, and not even because of biology per se (neurons rather than transistors or whatever). To 10x our firing rate, you also need to 10x the speed which our neurons communicate with one another—otherwise the timing gets fucked.

1

0

1