Neural network potentials can make these predictions thousands of times faster than possible with direct solution of Schrödinger equation.

2

2

38

Replies

Ok so what is a neural network potential concretely? It's just a very flexible function with many adjustable parameters that you fit to the 'potential energy surface.' This is just the energy as function of the position of the atoms in your system. 1/n

7

51

402

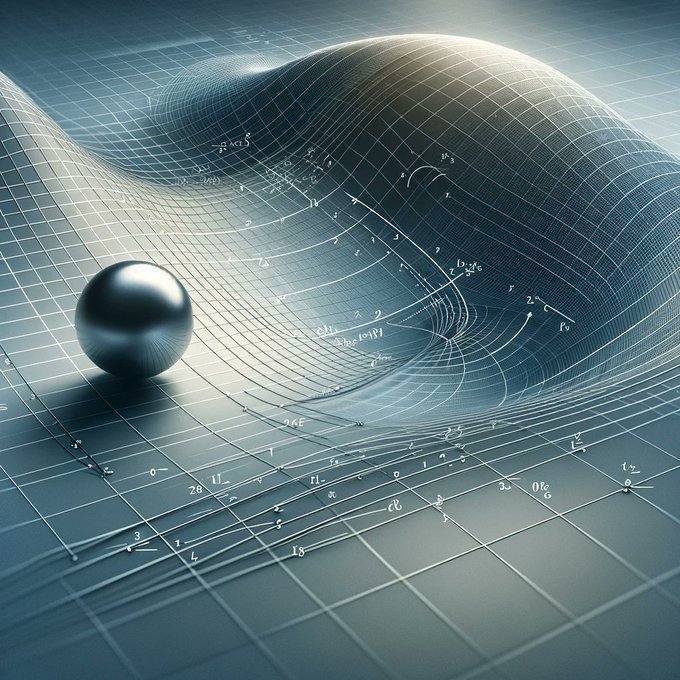

For a single particle in 2D you can visualise this concretely as a real surface where the height corresponds to the potential energy. Mostly we care about hugely high dimensional versions of this though where you have many particles, so you have:

1

2

27

Typically we don't care about gravity but instead we care about the quantum mechanical interaction of the atoms. This means we solve Schordingers equation to try and calculate this energy (and its gradient) for a given arrangement of atoms.

2

2

28

This can be done to well below thermal noise for many small systems today but the problem is it's slow. Here NNPs come to the rescue, they predict E from the positions of all the atoms much faster. We train them on examples of Schrodingers equation we have solved previously.

2

3

33

This is really important as the energy is so fundamental. It determines the probability of observing particles in a given arrangement and its gradient is the negative of the force so it can tell you how the particles move too.

1

2

31

NNPs are a particular class of machine learning potential. There are many types but a particularly important recent breakthrough has been equivariance which greatly reduced the amount of training data needed. (Alphafold2 used this idea)

1

2

33

Equivariance is very cool and draws on some deep mathematics that has already revolutionised theoretical physics. But the intuition is just to use neural networks that can keep track of, and compare, directions not just raw numbers.

1

2

28

Predicting energies from coordinates is much more general than just simulating atoms directly though. We often want to ignore parts of the system (marginalise) and this means we want to calculate free eneriges which again determine the probabilities of particular arrangements.

1

2

22

This is an essentially identical problem but can work for much bigger particles. You just train them to learn the average forces of a subset of your particles. (Free energies are just determined by the average forces which is a very nice stat mech trick).

3

2

30

@TimothyDuignan

any insight on why this is the case? especially re the NN part, is the particular inductive bias of NNs unreasonable effective or does one observe analogous speedups by other means?

0

0

0