Tao Meng

@TaoMeng10

Followers

179

Following

40

Media

2

Statuses

22

PhD@UCLANLP, NLP&ML research focus: injecting constraints in NLP/ML models https://t.co/mjHcc1ouiV

Los Angeles

Joined May 2019

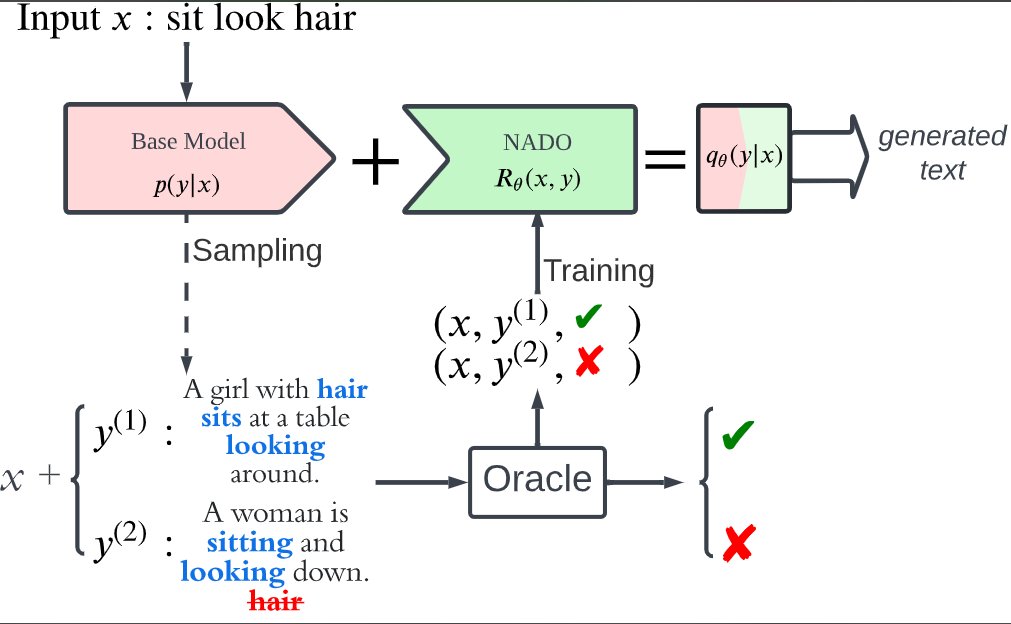

Happy to share our work on Controllable Text Generation with NeurAlly-Decomposed Oracle (NADO) accepted at #Neurips2022! Looks like our model is excited about NeurIPS as much as we do. See more in

3

22

102

RT @HonghuaZhang2: Reliable control of large language models is a crucial problem. We propose GeLaTo (Generating Language with Tractable Co….

arxiv.org

Despite the success of autoregressive large language models in text generation, it remains a major challenge to generate text that satisfies complex constraints: sampling from the conditional...

0

38

0

RT @kaiwei_chang: ACL/ICML highlights threats of LMs like ChatGPT generating paper content. However, I'm more concerned about reviews. Exam….

0

22

0

Welcome to our controllable text generation poster this afternoon!.

Dear friends at #NeurIPS2022, #PlusLab_UCLANLP will be presenting three papers at the main conference and one at the ENLSP workshop. This is a thread of some details about our presentations. My students and I are looking forward to seeing you at NOLA! (1/5).

0

0

3

RT @sidilu_pluslab: It's an honor of mine to share our work InsNet! We propose a unified, efficient, and powerful framework for both sequen….

0

5

0

RT @VioletNPeng: I’m super excited to share several fresh off the press works on controllable (creative) generation this Thursday at Stanfo….

0

12

0

The code for reproduce the results is released at Special thanks to my collaborators Sidi Lu, Nanyun Peng and Kai-Wei Chang! @sidilu_pluslab @VioletNPeng @kaiwei_chang.

github.com

Constrained Decoding Project. Contribute to MtSomeThree/constrDecoding development by creating an account on GitHub.

0

1

0

RT @LiLiunian: Happy to share our work on Object Detection in the Wild through Grounded Language Image Pre-training (GLIP) (Oral at #CVPR20….

0

41

0

RT @HonghuaZhang2: Can language models learn to reason by end-to-end training? We show that near-perfect test accuracy is deceiving: instea….

arxiv.org

Logical reasoning is needed in a wide range of NLP tasks. Can a BERT model be trained end-to-end to solve logical reasoning problems presented in natural language? We attempt to answer this...

0

58

0

RT @uclanlp: UCLA Chang's (@kaiwei_chang ) and Plus lab (@VioletNPeng) will present papers and a tutorial on topics including Fairness & Ro….

0

29

0

RT @natarajan_prem: Thrilled to participate in the launch event of the Science Hub at UCLA. Looking forward to the many advances that this….

0

9

0

Besides, we provide a theoretical analysis about the tightness of the polytopes and the reliability of the mined constraints. Paper/Code: .4/4.

web.cs.ucla.edu

An Integer Linear Programming Framework for Mining Constraints from Data Share this page: An Integer Linear Programming Framework for Mining Constraints from Data Tao Meng and Kai-Wei Chang, in ICML,...

0

0

1

Can a model learn problem structure/constraints from data? E.g., given pairs of adjacent matrix and corresponding minimal spanning tree, can a model learn to solve MST? Check out our #ICML2021 paper on constraint mining with ILP w/@kaiwei_chang 1/4.

web.cs.ucla.edu

An Integer Linear Programming Framework for Mining Constraints from Data Share this page: An Integer Linear Programming Framework for Mining Constraints from Data Tao Meng and Kai-Wei Chang, in ICML,...

1

5

24

RT @kaiwei_chang: UCLA-NLP will present at #acl2020nlp. 👇Check out our ACL/TACL papers and pre-recorded talks at.Lo….

0

9

0

RT @kaiwei_chang: The problem is much more beyond data bias. ML systems can be poorly calibrated ( and bias in the….

0

48

0