Stuart Reid

@StuartReid1929

Followers

3K

Following

8K

Media

825

Statuses

7K

CEO @NosibleAI - Search, rebuilt for AI

South Africa

Joined June 2013

Web search is holding back AI 👇🏻 Web search costs two orders of magnitude too much and is holding back large-scale AI adoption in enterprises. Here's a story that illustrates this. An agentic risk analyst agent does 100 searches a day to find lawsuits, cyber-attacks, recalls,

2

2

10

@jeremyphoward You can do something like fd = os.memfd_create(name) os.ftruncate(fd, size) and then either share fd with your child process e.g. via subprocess.Popen(pass_fds=) or you mmap it which multiprocessing can deserialize to the same region. The kernel refcounts the fd like a file.

12

11

164

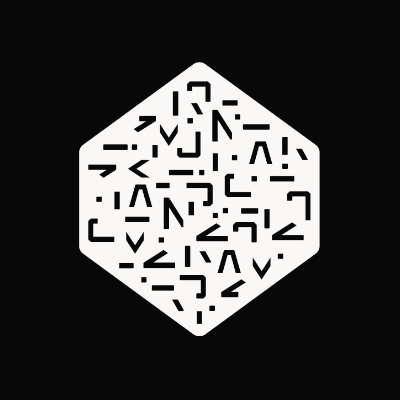

Why would they choose such a non-random pattern? Could this be a bias in the generation method?

Some crazy people on Reddit managed to extract the "SynthID" watermark that Nano Banana applies to every image. It's possible to make the watermark visible by oversaturating the generated images. This is the Google SynthID watermark:

0

0

1

I tuned into this and it was great. The case was made that one should focus on representations that understand the content in your index in the same way that you understand the user query. If you can get that part right everything downstream is an alignment problem. This doesn't

Ranking is rank. Query Understanding is Quool. Come Hang with Tunkelang! Friday, 2PM ET https://t.co/JkwhBBy450

0

1

2

Using 40x L40S to rerank 1.25 billion query document pairs with qwen 3 then distilling 250,000 shard level xgboost rerankers 🔥

1

0

0

This code got messy. So I turned it into a Pydantic class with input validation and attribute setters. Now I'm thinking about how to "trigger" setters. Put differently. Each API -- Google Knowledge Graph, WikiData, NOSIBLE Search, Gleif Search, Open Corporates -- might add a

🙋♂️ I volunteer. I am building a dataset of every company that ever made it to Wikipedia including symbology (where applicable) and corporate actions mined using search. I already have: - Company name - Public / private - Active / Inactive - GICS classification - Geographic

2

0

0

Found another great SciFi series - Planetside. It follows a military investigator who is sent to a planet to investigate a disappearance. MIlitary SciFi meets Myster. Epic!

audible.co.uk

Check out this great listen on Audible.com. A seasoned military officer uncovers a deadly conspiracy on a distant, war-torn planet… War heroes aren't usually called out of semi-retirement and sent to...

0

0

1

Startups are hard, I need your help! 🙏🏻 This year we found our niche: large firms who want to monitor the world at unprecedented scale. E.g. One customer does 2Mn searches a day looking for cyber-attacks, lawsuits, product recalls, and other material events over thousands of

6

5

15

Reinforcement Learning (RL) has long been the dominant method for fine-tuning, powering many state-of-the-art LLMs. Methods like PPO and GRPO explore in action space. But can we instead explore directly in parameter space? YES we can. We propose a scalable framework for

88

386

3K

🙋♂️ I volunteer. I am building a dataset of every company that ever made it to Wikipedia including symbology (where applicable) and corporate actions mined using search. I already have: - Company name - Public / private - Active / Inactive - GICS classification - Geographic

How, after all these years, is it still so hard to find survivorship bias free securities master files with all security identifiers and previous company names? LLMs are smart enough to mine these datasets!

0

0

9

🤡 "We are entering the post-retrieval age. The winners will not be the ones who maintain the biggest vector databases, but the ones who design the smartest agents to traverse abundant context and connect meaning across documents. In hindsight, RAG will look like training

I wrote 6 months ago that RAG might be dead. That was after an aha moment with Gemini’s 1M context window - running 200+ page docs through it and being impressed with the accuracy. But I didn’t have skin in the game. @nicbstme does. He just published an excellent piece: "The

0

0

4

How, after all these years, is it still so hard to find survivorship bias free securities master files with all security identifiers and previous company names? LLMs are smart enough to mine these datasets!

0

0

1

One of the young engineers in my team admitted to me today that he has stopped using AI because he wasn't learning anything. I can respect that. You can't learn if you delegate your thinking.

2

0

7

Given the cost vs. performance of grok-4-fast I will be doing a LOT more RADEs (Retrieval Augmented Data Extractions) on NOSIBLE.

0

0

1

Cool paper by MongoDB. They show that training a small (22m) encoder model with a larger model as static teacher (so just embeddings) works really well. Because the small model is aligned to the teacher, it can be run asymmetrically (i.e., teacher -> index, small -> query)

1

1

7

New post! I discuss static late interaction models, and show that these enable a very efficient reformulation that leads to a sparse, BM25-like model. This makes me doubt that static late interaction is a good idea. I also formulate some ideas for future research. Link below!

3

4

24