Sierra Team

@Sierra_ML_Lab

Followers

444

Following

45

Media

2

Statuses

64

Twitter account of the Sierra project-team @Inria_Paris, @CNRS, @ENS_ULM #MachineLearning & #Optimization

Paris

Joined March 2021

As the SIERRA team of @inria_paris, we will be presenting 11 papers at @NeurIPSConf 2021 and a Test of Time Award! + Very happy to mention that one of our papers received an Outstanding Paper Award! See the thread below for details + join us at the posters if interested! 1/13

2

9

99

What are good optimizers for diffusion models? 🍂 TLDR: Muon and SOAP are very good. Paper: https://t.co/TYqRpfcu5t

7

45

332

Bravo Etienne!

I am honored to be a 2025 Google PhD Fellow in Machine Learning & ML Foundations 🥳 Grateful to @Googleorg for their incredible support, my advisors @BachFrancis & Michael I. Jordan, and my lab at @Inria & @ENS_ULM for fostering a space to think deeply.

1

0

1

I am honored to be a 2025 Google PhD Fellow in Machine Learning & ML Foundations 🥳 Grateful to @Googleorg for their incredible support, my advisors @BachFrancis & Michael I. Jordan, and my lab at @Inria & @ENS_ULM for fostering a space to think deeply.

🎉 We're excited to announce the 2025 Google PhD Fellows! @GoogleOrg is providing over $10 million to support 255 PhD students across 35 countries, fostering the next generation of research talent to strengthen the global scientific landscape. Read more: https://t.co/0Pvuv6hsgP

9

9

126

Not all scaling laws are nice power laws. This month’s blog post: Zipf’s law in next-token prediction and why Adam (ok, sign descent) scales better to large vocab sizes than gradient descent: https://t.co/uoy5GPrZek

4

51

472

Tired of lengthy computations to derive scaling laws? This post is made for you: discover the sharpness of the z-transform! https://t.co/k1CJu0CVoR

2

40

271

Happy to have our recent papers on conformal prediction with e-values presented at COLT by my advisor @BachFrancis! Full details here: 📚 https://t.co/exKCl7g5Pf 📚 https://t.co/YFtmxeVTtN

#COLT2025

0

7

66

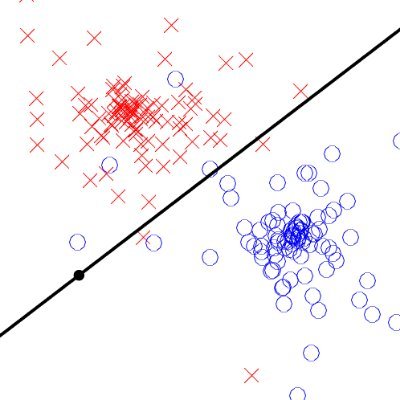

For good probability predictions, you should use post-hoc calibration. With @Eugene_Berta, Michael Jordan, and @BachFrancis we argue that early stopping and tuning should account for this! Using the loss after post-hoc calibration often avoids premature stopping. 🧵1/

2

23

127

I’ll be presenting our paper at COLT in Lyon this Monday at the Predictions and Uncertainty workshop — come say hi if you're around! 👋 Check out @DHolzmueller's thread below 👇 #COLT2025

For good probability predictions, you should use post-hoc calibration. With @Eugene_Berta, Michael Jordan, and @BachFrancis we argue that early stopping and tuning should account for this! Using the loss after post-hoc calibration often avoids premature stopping. 🧵1/

0

5

21

Characterizing finely the decay of eigenvalues of kernel matrices: many people need it, but explicit references are hard to find. This blog post reviews amazing asymptotic results from Harold Widom (1963!) and proposes new non-asymptotic bounds. https://t.co/HrrAacOvCA

1

23

129

A free book: Learning Theory from First Principles by @BachFrancis It covers a bunch of key topics from machine learning (ML) theory and practice, such as: - Math basics - Supervised learning - Generalization, overfitting & adaptivity - Tools to design learning algorithms -

2

109

541

Michael Jordan is indeed one of the greatest thinkers in the history of AI 🐐 Economics, incentives (mechanism design), information flow, creativity, cooperation, greed and power struggles are important topics that we crucially need to understand better for the benefit of

An inspirational talk by Michael Jordan: a refreshing, deep, and forward-looking vision for AI beyond LLMs. https://t.co/QhHPlrRUIs

3

12

77

[🔴#IAScienceSociety] @BachFrancis (@inria_paris-@ENS_ParisSaclay) participe au symposium "The Mathematics of #MachineLearning" aux côtés de @gabrielpeyre, Gilles Louppe (@UniversiteLiege), Gersende Fort (@CNRS) et Vianney Perchet (@INSAE @IP_Paris_).

0

8

26

🔴 @Inria est présent à la conférence "AI,Science and society" à @IP_Paris_, prélude au #SommetActionIA et sous la direction du Pr. Michael Jordan (@UCBerkeley-@Inria) ! L'événement a été inauguré ce matin par Michael Jordan et cet après-midi par @ClaraChappaz 🗣

1

10

16

My book is (at last) out, just in time for Christmas! A blog post to celebrate and present it: https://t.co/ttRo04efDF

33

315

2K

Bravo Bertille et Marc!

@MissBrutus @InriaParisNLP @CNRSinformatics @ENS_ULM 🎓#PhDs | Félicitations à @BertilleFollain et Marc Lambert de l’équipe-projet commune @Sierra_ML_Lab (@CNRSinformatics, @ENS_ULM) et à Roman Gambelin de l'équipe-projet commune DYOGENE (@CNRSinformatics, @ENS_ULM) qui ont soutenu leur #thèse en novembre 🎉👏 !

0

0

8

🎓#PhDs | Félicitations à Juliette Murris et Alice Rogier de l’équipe @TeamHeka (@univ_paris_cite, @CRCordeliers, @Inserm) ainsi qu'à @oumaymabounou et Céline Moucer des équipes WILLOW et @Sierra_ML_Lab (@CNRSinformatics, @ENS_ULM) qui ont soutenu leur #thèse en octobre 🎉👏 !

0

1

11

#ERCStG 🏆 | Félicitations à @TaylorAdrien, chargé de recherche @inria au centre @inria_paris, membre de l'équipe-projet commune @Sierra_ML_Lab (@CNRS @CNRSinformatics @ENS_ULM), lauréat d'une ERC Starting Grant 👏 Découvrez son portrait et son projet 👉 https://t.co/l0gGdsrcSd

0

6

35

How fast is gradient descent, *for real*? Some (partial) answers in this new blog post on scaling laws for optimization. https://t.co/qFpIX2EIZv

3

81

477

Bravo Adrien!

📰 #Article | Découvrez le projet CASPER, porté par @TaylorAdrien, chercheur au sein de l'équipe-projet commune @Sierra_ML_Lab (@inria_paris /@ENS_ULM/@CNRSinformatics), qui vient d’obtenir une bourse #ERCStG. Félicitations Adrien 🙌 ! 👉 https://t.co/8fp7iDXreu [🧵...]

0

1

26