Transformer

@ReadTransformer

Followers

442

Following

0

Media

7

Statuses

122

A publication about the power and politics of transformative AI. Subscribe for free: https://t.co/zlzcMxHVf4

Joined September 2025

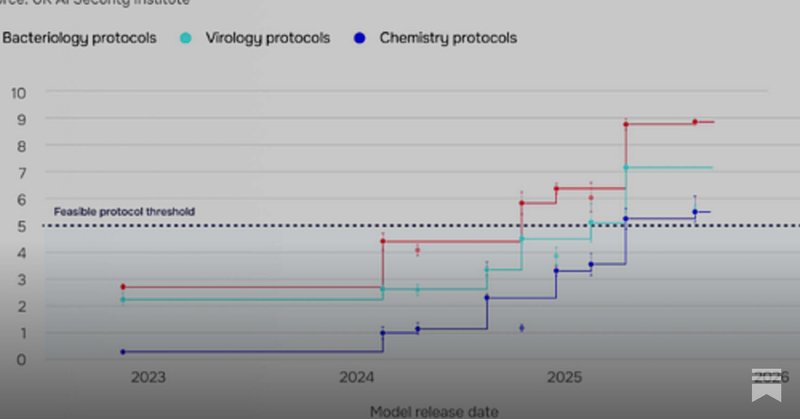

AI models are rapidly improving at potentially dangerous biological and chemical tasks, and also showing fast increases in self-replication capabilities, according to a new report from the UK’s AI Security Institute.

transformernews.ai

UK AISI’s first Frontier AI Trends Report finds that AI models are getting better at self-replication, too

0

0

0

"As crunch time for AI approaches, those at the wheel seem perfectly happy driving a clown car." @ShakeelHashim on why we're in the dumbest timeline for AI, plus GPT 5.2, OpenAI's Disney deal and much more in this week's Transformer Weekly. https://t.co/cUomAZsPOy

transformernews.ai

Transformer Weekly: Trump signs EO, Hochul guts the RAISE Act, and GPT-5.2 launches

0

0

0

So an AI can pick up its identity, which it then models its behaviour on, through the text in its dataset. The huge corpus of classic evil AI sci-fi novels and misaligned AI research papers in the datasets of GPTs could be a serious issue for AI labs:

transformernews.ai

The theory that we’re accidentally teaching AI to turn against us

0

0

1

There are already hints this could be an issue. In some evaluations, Sonnet 4.5 explicitly mentioned being tested over 80% of the time, compared to 10% for Sonnet 4. What caused this jump? It was likely due to exposure to misalignment evaluations during training.

1

0

1

Here's a startling sci-fi conceit: what if AIs misbehave because they're trained on all our stories about misbehaving AI? What if articles on the threat of misaligned AI are teaching AI to become misaligned? And what if this becomes a severe problem for all of us pretty soon?

1

0

1

EXCLUSIVE: New York’s governor is trying to turn the RAISE Act into an SB 53 copycat. Gov. Kathy Hochul is proposing to strike the entire text of the RAISE Act, replacing it with verbatim language from SB 53, sources tell Transformer.

1

0

2

From I, Robot to 2001: A Space Odyssey tales of intelligent robots attacking humanity have become part of the corpus of human knowledge that today’s LLMs are trained on. Are we accidentally teaching models to misbehave — by showing them examples?

transformernews.ai

The theory that we’re accidentally teaching AI to turn against us

0

0

0

Sam Altman has declared a "code red" over at OpenAI. And The Future of Life Institute has refused to give any lab a mark higher than "C+" on their latest AI Safety Index. There's all this, and much more, in our latest weekly briefing.

0

0

3

Here's our new piece on the advantages — and the substantial risks — of the way that AI safety works right now:

transformernews.ai

By building their own intellectual ecosystem, researchers worried about existential AI risk shed academia's baggage — and, perhaps, some of its strengths

0

0

1

The AI safety community prioritizes speed and insider familiarity so that they can match AI's astonishing rate of progress. It's a trade-off that bypasses the limits of academia. But it also means ideas might not be getting the scrutiny they deserve.

1

0

4

Why the apparent lack of safety testing on DeepSeek's latest model speaks to bigger problems, plus preemption’s out of the NDAA, OpenAI’s ‘code red,’ Anthropic’s IPO prep and more in today's Transformer Weekly:

transformernews.ai

Transformer Weekly: Preemption’s out the NDAA, OpenAI’s ‘code red,’ and Anthropic’s IPO prep

0

0

0

By building their own intellectual ecosystem, researchers worried about existential AI risk shed academia's baggage — and, perhaps, some of its strengths https://t.co/SSSaqdC8px

transformernews.ai

By building their own intellectual ecosystem, researchers worried about existential AI risk shed academia's baggage — and, perhaps, some of its strengths

0

0

8

The preemption fight is certainly not over — but the position of the groups wanting to regulate AI has never been stronger, thanks in part to their opponents’ repeated miscalculations. You can read more about the ongoing battle for preemption here:

transformernews.ai

The second failed attempt to pass federal preemption of state AI laws could have lasting repercussions for the industry

0

0

1

President Trump endorsed the preemption efforts shortly after a White House visit from the head of an industry-backed group. Since that endorsement has possibly made Trump look weak and not in control of his party, he might be wary of taking advice from such people in future.

1

0

1

The AI industry pushing against regulation finds itself on the backfoot in other ways too — AI is becoming increasingly unpopular in the US, and as time passes, politicians will become more worried about giving AI companies a free pass.

1

0

1

Well, the mission to get federal preemption of state AI laws has failed yet again. And the debate around it has shown that a policy of little-to-no AI regulation is a political nonstarter: anyone who's pushed for it has triggered uproar from across the political spectrum.

1

1

2

"Rapid AI diffusion could unleash social disruptions faster than even an adaptive authoritarian state can manage." @Scott_R_Singer on how China's ambitious AI plan could backfire:

transformernews.ai

Opinion: Scott Singer argues that the country’s plan to embed AI across all facets of society could create huge growth — and accelerate social unrest

0

1

1

Anthropic is set to publish its whistleblowing policy for employees imminently — likely as soon as this week — making it the second major AI company to publicly reveal how it will handle internal whistleblowing.

transformernews.ai

As Anthropic prepares to publish its whistleblowing policy, can the industry make the most of protecting those who speak out?

0

0

1

SB 53 forces California to address this problem, and the AG’s office has already announced that it is hiring an AI expert. When regulators take whistleblower protections seriously, companies change internal policies for the better. Read the full op-ed:

transformernews.ai

Opinion: Abra Ganz and Karl Koch argue that whistleblower protections in SB-53 aren’t good enough on the face of it — but how the state chooses to interpret the law could turn that around

0

0

0