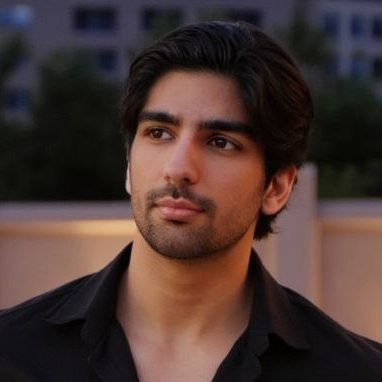

Ravi | ML Engineer

@RaviRaiML

Followers

1K

Following

4K

Media

271

Statuses

7K

Freelance ML Engineer | Background in Quantum Computing

Toronto, ON 🇨🇦

Joined December 2023

Cursor is so buggy. And now Claude Code is down. How do we survive this apocalypse?

0

0

1

There are basically two ways to build now. Building to learn (slow, ask, understand) Building to ship (fast, validate, trust) I find mixing them just means you'll move slow and gain shallow understanding. Best to pick one, and stick with it.

0

0

0

multi-tasking is now absolutely feasible, especially if building various POCs. My laziness is now my bottleneck I guess

0

0

0

Me: gives AI the full codebase AI: "I improved 47 files for you!" Me: "I asked you to add a button"

0

0

1

Being an engineer now is just babysitting mini primitive ultrons. Can't wait to see them grow up.

0

0

1

Working on an audio AI project. It's pretty cool, usually lost in a bunch of text. Kinda crazy how fast money can go though, just to do some vibe checks, we tested on 30 samples for the sake of diversity. It's honestly a stupid simple workflow, just a few LLM prompt passes.

0

0

0

Gonna be honest for a sec, I did once have to use LLMs to solve a problem meant for XGBoost. And no, it didn't even work.

0

0

1

If you think LLMs don’t drift, you’re just looking in the wrong place. Classical drift hit the model. LLM drift hits the system. Same problem. New surface.

0

0

0

Accuracy is a lagging signal. Early AI R&D is about clarity, not evals. Optimize too soon, and you just get the wrong answers faster.

0

0

0

AI strategy looks obvious in theory. Production is where data gets creative, budgets get opinions, and tradeoffs come due. Prepare well, so when things break, you don't.

0

0

0

Modern AI looks different. The feedback loop is faster. The hype is louder. But the hard parts remain the same.

0

0

0

“We’ll fix it later with AI” is a red flag You rarely hear anyone say this explicitly. But it shows up all the time between the lines. Usually in the form of: - Vague problem statements - “We’ll figure out the data later” - Treating AI as a safety net for unclear decisions

0

0

0

A mental model I use for debugging ML systems When things go wrong, I assume the system lied - not the model. I trace it in order: Inputs → Transformations → Model → Outputs Most bugs live before or after inference, not inside the model. Treat ML like software, not magic.

0

0

0

Why I don’t start AI apps with chat UIs anymore Chat UIs make AI feel powerful fast. That’s exactly the problem. In early AI products, chat features can be a crutch in a way. They hide bad product decisions behind vague inputs, unclear state, and poorly thought out

0

0

0

How I avoid building gimmicky AI features. Beyond obvious UI decorations. Building quality AI features is hard. The last thing you want is one that exists just to exist. A quick gut check I use: If removing the AI doesn’t break a core workflow, it’s probably a gimmick.

0

0

0

Results matter. But they’re not the whole story. I used to think it was simple: If someone had the result I wanted, I could just do what they did and get there too. Turns out it doesn’t work like that. The details matter: - Your starting point - Your environment - Your

0

0

0

While LLMs are powerful. SLMs are practical. So much so that I think SLMs will steadily rise in usage over the next few years. Not because LLMs aren’t powerful. But because most products don’t need general intelligence. They need fast, cheap, reliable behavior on a narrow

0

0

0

You don’t need to “outgrow” Jupyter notebooks. That take usually comes from two extremes: - Everything lives in one messy notebook - Or notebooks are banned entirely Both miss the point. Notebooks don’t need to be production code. They don’t need to be the pipeline. They

0

0

0

Before I build an AI feature, I try to kill it. Not by tearing it down, but by stress-testing the idea before writing any code. I don’t start with model choice. I don’t start with prompt cleverness. I start with this: - How will we measure whether it’s actually helping? -

0

0

1