Roger Waleffe

@RWaleffe

Followers

77

Following

23

Media

2

Statuses

26

Computer Sciences PhD student at the University of Wisconsin-Madison

Joined June 2020

RT @DisseminatePod: 🚨 "MariusGNN: Resource-Efficient Out-of-Core Training of Graph Neural Networks" with @RWaleffe is available now! . 🎧….

buymeacoffee.com

Hey I'm Jack from Disseminate: The Computer Science Research Podcast!If you enjoy the show consider buying me a coffee so I can keep making the show. Thanks!

0

2

0

Joint work with Patrik Okanovic @vmageirakos Kostis Nikolakakis @aminkarbasi @DKalogerias @nmervegurel @thodrek.

1

0

5

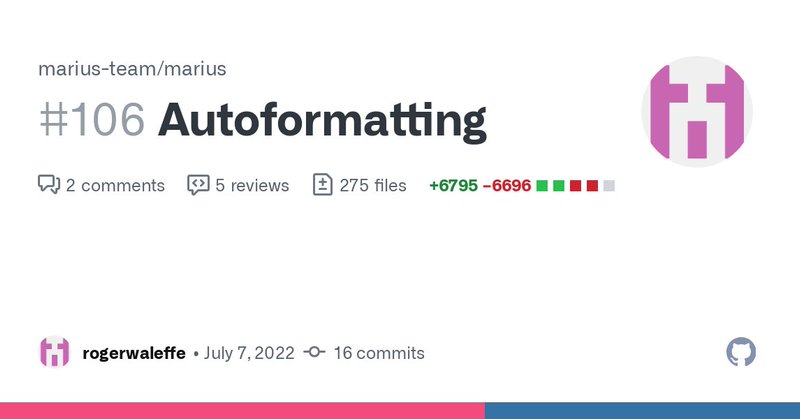

RT @keenuniverse: Marius, another amazing KGE (and more) library is now auto-formatting its code with black as of 🚀….

github.com

This PR adds auto-formatters and linters for the Python and CPP sources. They have been added to GitHub actions to enforce the same style for the code base. The pip install dependencies have also b...

0

2

0

RT @ImmanuelTrummer: Roger Waleffe (@RWaleffe) from @wiscdb introduces the Marius++ system! .Check out the talk: @W….

0

2

0

RT @ImmanuelTrummer: Roger Waleffe (@RWaleffe) shows how to train over billion-scale graphs on a single machine! .Join us at 1 PM ET via Zo….

0

2

0

RT @thodrek: Scalability is a key factor limiting the use of Graph Neural Networks (GNNs) over large graphs; w/ @RWaleffe, @JasonMohoney ,….

0

5

0

RT @thodrek: Accepted to #OSDI21: @JasonMohoney & @RWaleffe show how to train massive graph embeddings in a 𝘀𝗶𝗻𝗴𝗹𝗲 𝗺𝗮𝗰𝗵𝗶𝗻𝗲; don't burn $$$$….

0

9

0

RT @StatMLPapers: Principal Component Networks: Parameter Reduction Early in Training. (arXiv:2006.13347v1 [cs.LG])

0

2

0