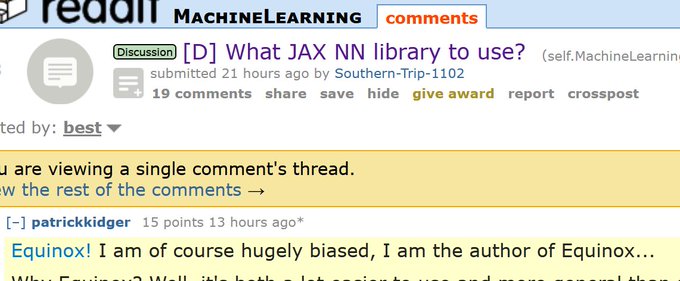

A recent Reddit post on the difference between neural network libraries for

#JAX

. In which I advocate for Equinox🌓!

Reddit:

GitHub:

4

15

141

Replies

@PatrickKidger

Thanks for the explanations! You mention the lack of footguns and the ease of use, where does it stands in term of performance, have you had the time to benchmark it? Thanks =)

1

0

0

@NoisyFrequency

So because it always ends up boiling down to jax.jit producing an XLA computation graph (regardless of library), then the performance is the usual excellent performance you can expect from JAX.

When using this in the context of diffeq solves I've seen ~100x speedup over PyTorch.

1

0

3

@_joaogui1

Uhh. I have no idea. Let me know if you find out?

(I tried googling it, or looking it up myself, but I don't see anything.)

2

0

1

@PatrickKidger

Recently into Flax to put together a blog. There were parts with the Flax effect system that were quite nice

* `vmap` with different parameters ()

* caching for RNNs (

)

* avoiding recomp of parameters ()

1

0

4