Perry Zhang

@PY_Z001

Followers

945

Following

478

Media

14

Statuses

185

PhD student at UCSD CSE. Working on video generation architecture.

San Diego, US

Joined November 2018

[1/5] [Lmgame Bench] 🎮 Question: Can RL-based LLM post-training on games generalize to other tasks? We shared a preliminary study to explore this question: - Same-family (in-domain): Training on 6×6 Sokoban → 8×8 and Tetris (1 block type) → Tetris (2 block types) transfers,

2

13

95

Excited to share my 1st project as a Research Scientist Intern at Meta FAIR! Grateful to my mentor @jiawzhao for guidance, and to @tydsh & Xuewei for their valuable advice and collaboration. Our work DeepConf explores local confidence for more accurate & efficient LLM reasoning!

Introducing DeepConf: Deep Think with Confidence 🚀 First method to achieve 99.9% on AIME 2025 with open-source models! Using GPT-OSS-120B even without tools, we reached this almost-perfect accuracy while saving up to 85% generated tokens. It also delivers many strong

11

14

86

[Lmgame Bench] 🤔 Ever wondered how to evaluate different games in Lmgame-Bench or even add your own, but don’t know where to start? We’ve made it super easy to run evaluations and integrate new games. Our latest blog walks you through a few key features from Lmgame Bench

1

3

20

Simple design ofen wins in the long run. GPT-OSS uses sliding window atteniton. Our Sliding Tile Attention brings efficieint window attention to video generation: https://t.co/KnkCqpP3lt

Just released gpt-oss: state-of-the-art open-weight language models that deliver strong real-world performance. Runs locally on a laptop!

0

2

23

Try FastWan at https://t.co/PV8g6YE6dA!

(1/n) 🚀 With FastVideo, you can now generate a 5-second video in 5 seconds on a single H200 GPU! Introducing FastWan series, a family of fast video generation models trained via a new recipe we term as “sparse distillation”, to speed up video denoising time by 70X! 🖥️ Live

0

2

14

Crazy fast!! Great work from @haoailab

(1/n) 🚀 With FastVideo, you can now generate a 5-second video in 5 seconds on a single H200 GPU! Introducing FastWan series, a family of fast video generation models trained via a new recipe we term as “sparse distillation”, to speed up video denoising time by 70X! 🖥️ Live

0

1

4

(1/n) 🚀 With FastVideo, you can now generate a 5-second video in 5 seconds on a single H200 GPU! Introducing FastWan series, a family of fast video generation models trained via a new recipe we term as “sparse distillation”, to speed up video denoising time by 70X! 🖥️ Live

10

109

436

I learned a lot from NATTEN!

Watch my talk about NATTEN on @GPU_MODE this Saturday at 3PM ET / noon PT. I'll go over all the exciting new features we shipped very recently, especially our Hopper and Blackwell FNA kernels, now speeding up video / world models by up to 2.6X e2e! https://t.co/Wn2wwekOVZ

0

0

2

Watch my talk about NATTEN on @GPU_MODE this Saturday at 3PM ET / noon PT. I'll go over all the exciting new features we shipped very recently, especially our Hopper and Blackwell FNA kernels, now speeding up video / world models by up to 2.6X e2e! https://t.co/Wn2wwekOVZ

1

6

26

📣 We’ve had three papers accepted at #ICML2025, Hao-AI-Lab is sending @haozhangml to attend ICML in person😂! If you're around, please find Hao at the venue and chat with him about video diffusion, LLM agents, and efficient attention 👋🧠 🎬 Fast Video Generation with Sliding

1

5

17

Heading to ICML next week (Monday - Thursday). Down to chat research, ideas, anything cool, or just hang 😄📍🎯

0

2

10

🚀 Attention is the bottleneck in video DiTs—5 s of 720p = 100K+ tokens, quadratic cost blows up fast. Sparse/linear attention is 🔑 for long-context world models. 🧠 Track relavent papers in our awsome-video-attention repo → https://t.co/nJJyfadLgo

#WorldModel #VideoAI

github.com

A curated list of recent papers on efficient video attention for video diffusion models, including sparsification, quantization, and caching, etc. - hao-ai-lab/Awesome-Video-Attention

0

9

40

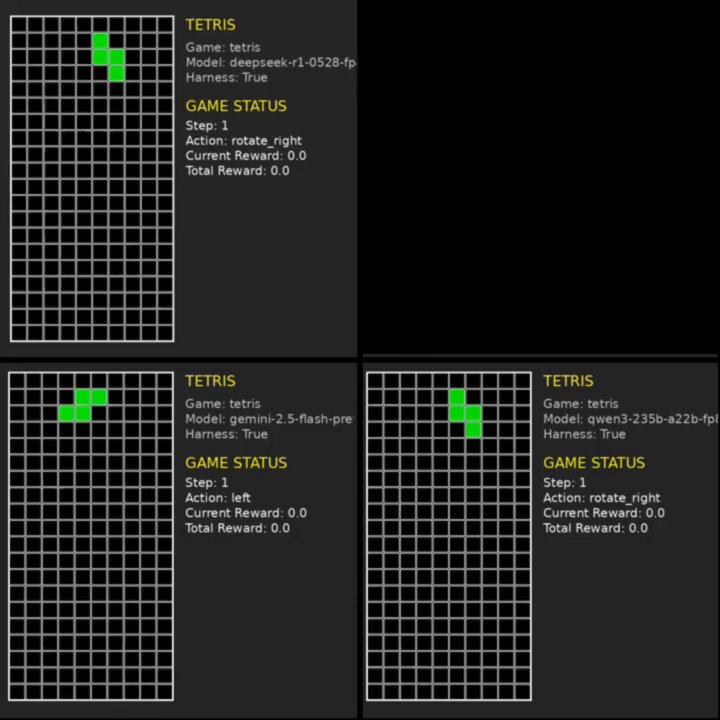

🔧🤖 New wave of open-source LLMs like Deekseek-R1-0528 and Qwen3-235B-A22B are leveling up with stronger agentic performance. We test them in head-to-head gameplay — the upgraded Deekseek-R1-0528 outsmarts strong reasoning models like o4-mini across several games and it nearly

7

64

285

I will be giving a talk in @GPU_MODE tomorrow (May 31 12pm PST) about FastVideo/STA/VSA. Come if you're interested! https://t.co/eIlyPDde0y

2

22

111

Announcing FastVideo V1, a unified framework for accelerating video generation. FastVideo V1 offers: - A simple, consistent Python API - State of the art model performance optimizations - Optimized implementations of popular models Blog: https://t.co/0lFBmrrwYN

2

43

164

STA is accepted by ICML 2025!!

🎥 Videos DiTs are painfully slow, HunyuanVideo takes 16 min to generate a 5s 720P video on H100. 🤯 Announcing Sliding Tile Attention (STA): * Accelerate 3D full attention (FA3) by up to 10x * Slash the end-to-end time from 16 --> 5 mins * NO extra training. NO quality loss!

0

6

28

Thrilled to share recent research from our fascinating lab members and collaborators at #ICLR2025! 🚀✨ Come say hi in our poster sessions and dive into discussions on LLM agents, reasoning, long-context training, efficient inference, and more. We’re excited to share, learn and

0

3

21

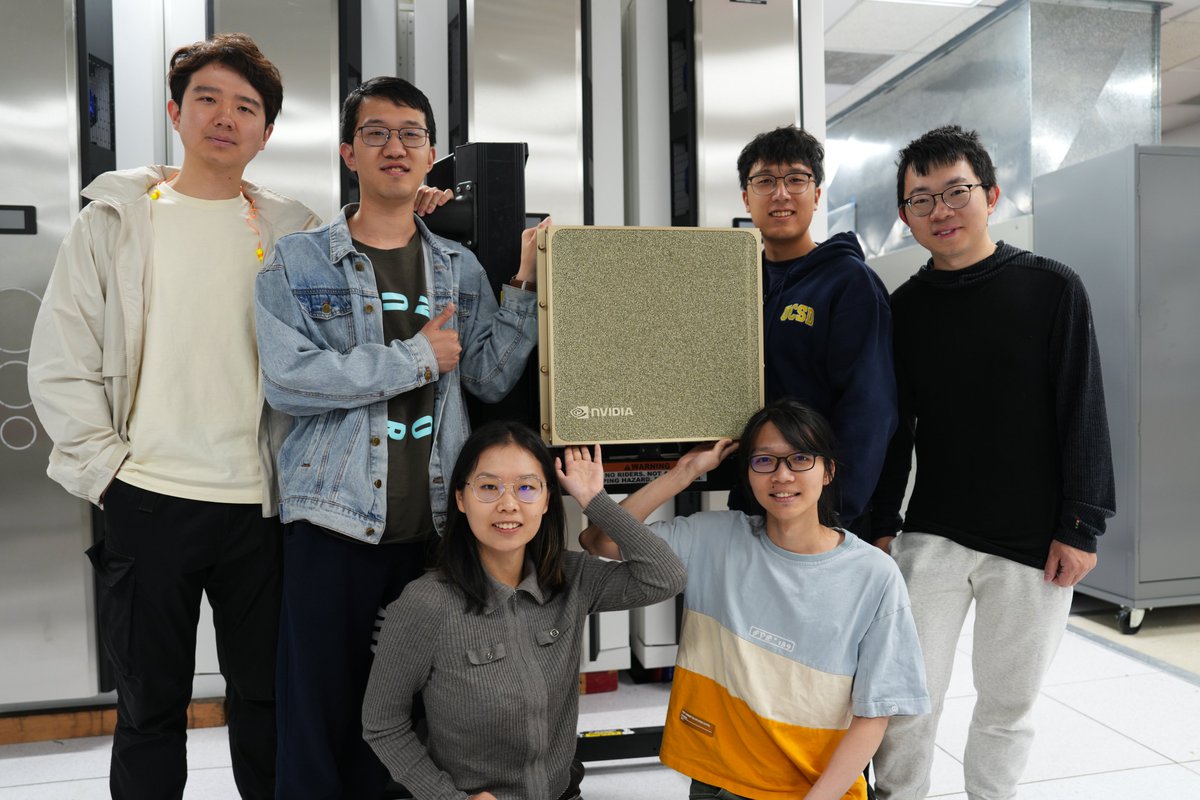

Let me tell a real story of my own with @nvidia. Back in 2014, I was a wide-eyed first-year PhD student at CMU in @ericxing's lab, trying to train AlexNet on CPU (don’t ask why). I had zero access to GPUs. NVIDIA wasn’t yet "THE NVIDIA" we know today—no DGXs, no trillion-dollar

We are beyond honored and thrilled to welcome the amazing new @nvidia DGX B200 💚 at @HDSIUCSD @haoailab. This generous gift from @nvidia is an incredible recognition and an opportunity for the UCSD MLSys community and @haoailab to push the boundaries of AI + System research. 💪

21

58

633