Peymàn M. Kiasari

@PKiasari

Followers

23

Following

450

Media

27

Statuses

108

ML researcher and engineer | currently focusing on computer vision, especially DS-CNNs

Vienna, Austria

Joined August 2021

If you are interested in generalization in deep learning, you can find our poster in the poster session right now at #AAAI2025 : )

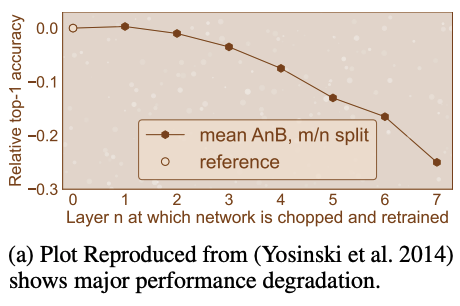

Camera-ready version is out! (. TL;DR: Deep CNN filters may be generalized, not specialized as previously believed. Major update(Fig4): We froze layers from end-to-start instead of start-to-end. The result ironically suggests early layers are specialized!

1

1

5

Camera-ready version is out! (. TL;DR: Deep CNN filters may be generalized, not specialized as previously believed. Major update(Fig4): We froze layers from end-to-start instead of start-to-end. The result ironically suggests early layers are specialized!

🧵1/13 Happy to announce our AAAI paper! We challenge the notion that CNN filters should specialize in deeper layers- mostly established in @jasonyo's influential work. Our findings suggest something quite different!.This is a continuation of our CNN understanding paper series:.

0

0

3

A company created a huge open-source model along with a full paper. They publicly shared everything they had—unlike closed companies. Now, people are debating whether they are a "threat" or not. I wonder if the reaction would be the same if they weren't Chinese.

0

0

9

RT @PaulGavrikov: Our discoveries? VLMs naturally lean more towards shape, mimicking human perception more closely than we thought! And we….

0

2

0

13/13 If you prefer video content, you can check out the video I made for AAAI:. Thanks for reading! I wish a great day for you. #DeepLearning #ComputerVision #AAAI2025.

0

0

1