Ohad Asor

@ohadasor

Followers

888

Following

20

Media

1

Statuses

131

@TauLogicAI Founder & CTO. The logical way to AI! -- I rarely check my DMs, so better DM @TauLogicAI

Joined March 2009

Machine learning models / LLMs excel at patterns but will never offer logical correctness for non-trivial/complex problems. I'm excited about formal software synthesis from logical requirements, where correctness is guaranteed by construction rather than hoped for.

10

47

298

2

4

37

Imagine software that adapts to you—individualized to your needs. Ohad Asor and the Tau Team are building the first and ONLY blockchain that its users fully control:

21

75

174

Light years away, not even close and doesn't even say anything. LLM can't even start helping to code the Tau language. https://t.co/ET1VnLf2JV

7

17

56

Quantum mechanics works very well (except when it doesn't), but whenever a quantum theory is illogical, they say "find a better logic". LLM works very well (except when it doesn't), but whenever it is illogical, they say "what is logic? there isn't such a thing". Coincidence? No.

7

18

57

Show me one blockchain project which is not just more of the same thing. Show me one AI project which is not just more of the same thing. I'll show you one that ticks both boxes: @TauLogicAI $AGRS. Read my two recent articles here to see why it's the case

10

27

89

Asked ChatGPT "why can't machine learning perform logical reasoning?" and got a good answer! Too long to paste here, so try it yourself

5

6

45

I bet that currently no field is full of misconceptions, fake news, and outright lies, as much as the fields of AI and Blockchain are, each. And I live in both those worlds. But I will never succumb to falsity.

2

11

51

"The results show that a four-way interaction is difficult even for experienced adults to process without external aids." Yes, we humans suck. But LLMs suck even more: you can do better than LLM with only some minutes of work. The only way to surpass the human capabilities is

1

9

56

At least they don't go backward, but they still go to the left instead of forward: 1. If first order logic theorem provers could practically verify nontrivial programs, they'd revolutionize the swdev world long ago. In fact the only reasonable swspec lang, not only for

3

15

62

"How did we get to the doorstep of the next leap in prosperity? In three words: deep learning worked. In 15 words: deep learning worked, got predictably better with scale, and we dedicated increasing resources to it." Mark my words: it may take some time but machine learning (in

4

19

62

Heh. This is religious talk. Junkie talk. People try to reaffirm theirs and society's beliefs at all cost. First, machine learning can't reason, more specifically, can't infer, and more generally, can't perform a single class of tasks accurately, and will never be able to, by its

I really hate the argument about whether LLMs can reason or not. Can anyone mathematically differentiate between inference and reasoning? :) People treat reasoning like it’s something magical, but I bet many who argue about this issue can’t define it, relying more on gut feelings

2

11

44

The easy argument against the machine learning bubble is information theoretic rather complexity theoretic. If a "model" is trained on a set of examples, why should we believe it'll perform well on instances it never saw? Well, it is easy to see that it's simply impossible: the

7

7

45

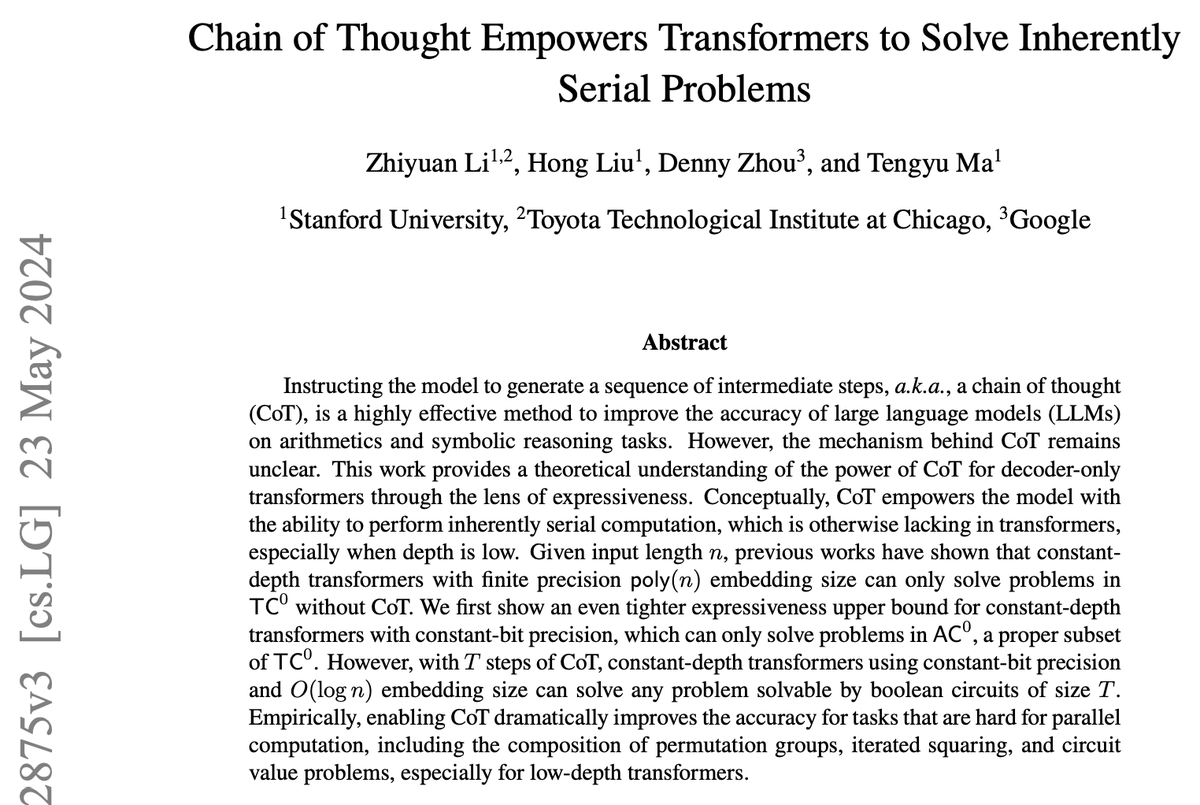

"can solve any problem"? Really?? Let's read the abstract in the image attached to the post, and see if the quote is correct. Ah wow! Somehow he forgot to quote the rest of the sentence! How is that possible? The full quote is "can solve any problem solvable by boolean circuits

What is the performance limit when scaling LLM inference? Sky's the limit. We have mathematically proven that transformers can solve any problem, provided they are allowed to generate as many intermediate reasoning tokens as needed. Remarkably, constant depth is sufficient.

5

17

95

This is funny on so many levels. Yes, ML in general (including NN) does interpolate, but it also does extrapolate. The whole field of theoretical ML is about the dialectics between interpolation and extrapolation, the latter, ofc, only up to a certain accuracy, never a full one.

No, @ylecun, high dimensionality doesn’t erase the critical distinction between interpolation & extrapolation. Thread on this below, because only way forward for ML is to confront it directly. To deny it would invite yet another decade of AI we can't trust. (1/9)

1

12

42