Gherman Novakovsky (слава Україні! 🇺🇦)

@NovakovskyG

Followers

276

Following

3K

Media

23

Statuses

752

PhD, Illumina AI lab; interested in Deep Learning and genome regulation; also drawing, martial arts, guitar, and death metal! (he/him)

Joined January 2018

Excited to share my first contribution here at Illumina! We developed PromoterAI, a deep neural network that accurately identifies non-coding promoter variants that disrupt gene expression.🧵 (1/)

2

31

111

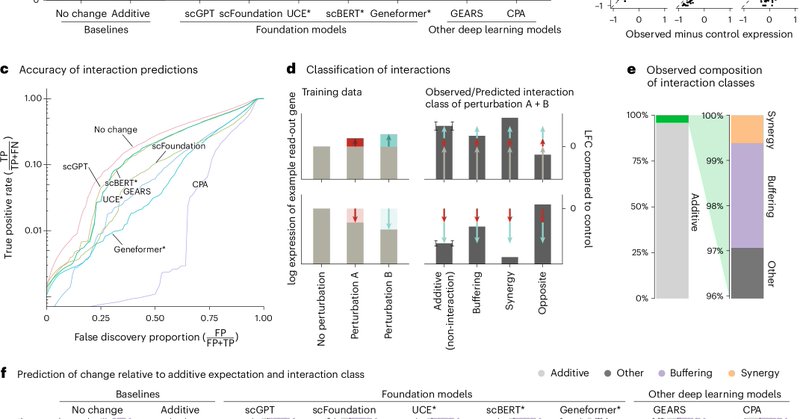

Systema: a framework for evaluating genetic perturbation response prediction beyond systematic variation https://t.co/hF56Sxbj82

2

26

130

Our latest book on the mathematical principles of deep learning and intelligence has been released publicly at: https://t.co/ihPBCkI3x5 It also comes with a customized Chatbot that helps readers study and a Chinese version translated mainly by AI. This is an open-source project.

15

278

1K

A reminder that not every problem benefits from large language models. Low-rank matrix completion or collaborative filtering will likely give you even stronger baselines. https://t.co/7P5yhzLQKE

nature.com

Nature Methods - The analysis presented in this Brief Communication shows that, despite their complexity, current deep learning models do not outperform linear baselines in predicting gene...

0

2

28

We observed the same across six promoter variant effect benchmarks. Evo2 did worse than basic CNNs and had ~0.5 auROC on some tasks.

*Easter egg alert* NOT in the published paper. We also benchmarked Evo 2 and while it did better than other gLMs (consistent that scale can improve gLMs), it still falls short of a basic CNN trained using one-hot sequences and far short of supervised SOTA https://t.co/vDKDzwJqq6

4

7

44

Excited to introduce LiftOn – an open-source tool for accurate liftover of genome annotations (GFF) across assemblies. 🚀 👉 Code &community: https://t.co/Ri8KjyLPaN It’s been incredibly rewarding building this for the genomics community. Thank you to all collaborators/friends!

github.com

🚀 LiftOn: Accurate annotation mapping for GFF/GTF across assemblies - Kuanhao-Chao/LiftOn

Genome annotation is falling behind how fast genomes can be assembled—but Johns Hopkins researchers @KuanHaoChao, @StevenSalzberg1, @elapertea, @alaina_shumate, @celinehohzm, & @alan_mayonnaise (+ @JakobHeinz9) have created a tool that can change that:

0

18

58

Congratulations Gherman! 🖥️🧬🥳 A tour-de-force of AI/ML on predicting promoter variant effects in humans. 🔗: https://t.co/BJkX0jNlYt

#MachineLearning #Bioinformatics #Genomics

science.org

Only a minority of patients with rare genetic diseases are presently diagnosed by exome sequencing, suggesting that additional unrecognized pathogenic variants may reside in noncoding sequence. In...

Excited to share my first contribution here at Illumina! We developed PromoterAI, a deep neural network that accurately identifies non-coding promoter variants that disrupt gene expression.🧵 (1/)

0

2

9

Huge thanks to the amazing Illumina team—this was an incredible learning experience! I'm excited to keep pushing forward as we develop models to tackle gene expression and non-coding variant interpretation. (16/)

0

0

2

A complementary thread from my colleague Kishore Jaganathan @kjaganatha

https://t.co/RzEy22UjtZ (15/)

We're thrilled to introduce PromoterAI — a tool for accurately identifying promoter variants that impact gene expression. 🧵 (1/)

1

1

3

Want to learn more about PromoterAI? 📄 Read the paper: https://t.co/92wvzo3R1o 💻 Explore the code & precomputed scores: https://t.co/UeHuSUCaND. (14/)

1

1

4

We followed up by testing promoter variants in Mendelian genes using MPRA. Surprisingly, PromoterAI was more effective than MPRA at prioritizing variants linked to patient phenotypes, highlighting limitations of MPRA for rare disease interpretation. (13/)

1

0

1

While we noticed that the use of additional species such as mouse does not lead to substantial improvement of variant effect prediction, it does help with ensembling. Thus, the final model is an ensemble of two: trained on human only and trained on mouse+human together. (12/)

1

0

1

In the Genomics England rare disease cohort, functional promoter variants predicted by PromoterAI were enriched in phenotype-matched Mendelian genes. These variants accounted for an estimated 6% of the rare disease genetic burden. (11/)

1

0

0

In the @uk_biobank cohort, PromoterAI's predicted promoter variant effects correlated strongly with measured protein levels and quantitative traits, suggesting that promoter variants contribute meaningfully to phenotypic variation in the general population. (10/)

1

0

1

PromoterAI's embeddings split promoters into three distinct classes: P1 (~9K genes, ubiquitously active), P2 (~3K genes, bivalent chromatin), E (~6K genes, enhancer-like). The E class, enriched for TATA boxes, may reflect enhancers co-opted as promoters. (9/)

1

0

2

Fine-tuning improved PromoterAI’s ability to predict the direction of motif effects — a known issue of multitask models. The model often recognized motifs before fine-tuning, but got the direction wrong. After fine-tuning, its predictions aligned better with the data. (8/)

1

0

1

We used our list of gene expression outliers to explore their effect on transcription factor binding sites. Our results show that it is easier for new variants to cause outlier gene expression by disrupting existing regulatory components rather than creating new ones. (7/)

1

0

2

We also attempted to fine-tune Enformer and Borzoi on our promoter variant set. While performance improved, both models lagged behind PromoterAI. Notably, PromoterAI outperformed Enformer and was similar to Borzoi before fine-tuning. (6/)

1

0

2

When it comes to predicting expression effects of promoter variants, PromoterAI achieved best performance across benchmarks spanning RNA, proteins, QTLs, and MPRA. (5/)

1

0

2

The second step was to fine-tune the model using a carefully curated list of rare promoter variants linked to aberrant gene expression. The fine-tuning was done using a twin-network setup to ensure the generalization across unseen genes and datasets. (4/)

1

0

3

First, we pre-trained PromoterAI to predict histone marks, TF binding, DNA accessibility, and CAGE signal from a genomic sequence. The key difference with models like Enformer and Borzoi is that we predict at a single base-pair resolution and use only TSS-centered regions. (3/)

1

0

3