Navid Azizan

@NavidAzizan

Followers

2K

Following

277

Media

21

Statuses

101

MIT Prof | AI & machine learning, systems & control, optimization | Fmr postdoc @Stanford, PhD @Caltech

Cambridge, MA

Joined June 2018

Introducing Instance-Adaptive Inference-Time Scaling! Paper: https://t.co/0mGdkUjMXK Code: https://t.co/uENXKuoL0T

🧠 Inference-time scaling lets LLMs spend more compute to solve harder problems, but not every question needs that! After all, we don’t use a whiteboard to solve 1 + 1. So why should an LLM? Introducing Instance-Adaptive Inference-Time Scaling, a smarter way to allocate

0

2

16

In collaboration with the @MITIBMLab, thanks to the one and only @HW_HaoWang!

0

0

5

Paper: https://t.co/EHpD7XUMWg Code:

github.com

Contribute to azizanlab/repreli development by creating an account on GitHub.

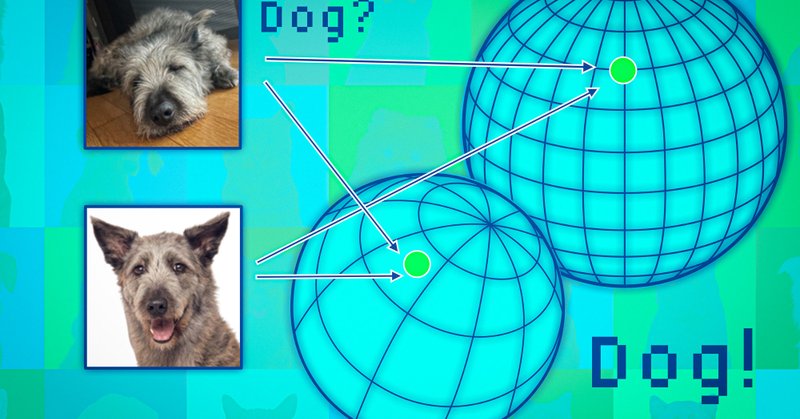

How to assess a general-purpose AI model’s reliability before it’s deployed. A new technique from MIT LIDS researchers @NavidAzizan and Young-Jin Park enables users to compare several large models and choose the one that works best for their task. https://t.co/AHEqwkFYkS

2

3

16

Wondering when to trust pre-trained AI models and how to assess their reliability before deployment? Check out our work at #UAI2024! If you’re in Barcelona, visit my poster (#368) tomorrow!! 🔗 Read More: https://t.co/qSW0IH51zj (Paper), https://t.co/SD8ioXjaWM (MIT News).

news.mit.edu

A new technique estimates the reliability of a self-supervised foundation model, like those that power ChatGPT, without the need to know what task that model will be deployed on later.

1

3

4

📢 Still a few days left to apply for our postdoc position: https://t.co/8GYqB9Nz9N Candidates who wish to be considered for the "MIT Postdoctoral Fellowship for Engineering Excellence" may also apply here and list my name: https://t.co/uquuPYdNlv Deadline: Jan 31 @MIT @MIT_SCC

0

10

39

Fri, Dec 15, 17:20-17:40: 𝐎𝐧 𝐭𝐡𝐞 𝐂𝐨𝐧𝐯𝐞𝐫𝐠𝐞𝐧𝐜𝐞 𝐑𝐚𝐭𝐞 𝐨𝐟 𝐃𝐢𝐬𝐭𝐫𝐢𝐛𝐮𝐭𝐞𝐝 𝐋𝐢𝐧𝐞𝐚𝐫 𝐒𝐲𝐬𝐭𝐞𝐦 𝐒𝐨𝐥𝐯𝐞𝐫𝐬 Session: Distributed Control III (Roselle Junior 4711) 𝐁𝐨𝐫𝐢𝐬 𝐕𝐞𝐥𝐚𝐬𝐞𝐯𝐢𝐜 (MIT) https://t.co/6aVJftw3Mw

1

0

3

Fri, Dec 15, 10:20-10:40: 𝐃𝐚𝐭𝐚-𝐃𝐫𝐢𝐯𝐞𝐧 𝐂𝐨𝐧𝐭𝐫𝐨𝐥 𝐰. 𝐈𝐧𝐡𝐞𝐫𝐞𝐧𝐭 𝐋𝐲𝐚𝐩𝐮𝐧𝐨𝐯 𝐒𝐭𝐚𝐛𝐢𝐥𝐢𝐭𝐲 Session: Data-Driven Verification & Control of Cyber-Physical Systems (Orchid Main 4202-4303) 𝐘𝐨𝐮𝐧𝐠𝐣𝐚𝐞 𝐌𝐢𝐧 (MIT) @youngjaem0

https://t.co/WQDMXYpvli

1

1

9

Today, Dec 14, 16:20-16:40: 𝐎𝐧𝐥𝐢𝐧𝐞 𝐋𝐞𝐚𝐫𝐧𝐢𝐧𝐠 𝐟𝐨𝐫 𝐄𝐪𝐮𝐢𝐥𝐢𝐛𝐫𝐢𝐮𝐦 𝐏𝐫𝐢𝐜𝐢𝐧𝐠 𝐮𝐧𝐝𝐞𝐫 𝐈𝐧𝐜𝐨𝐦𝐩𝐥𝐞𝐭𝐞 𝐈𝐧𝐟𝐨𝐫𝐦𝐚𝐭𝐢𝐨𝐧 Session: Learning, Optimization, & Game Theory (Orchid Main 4202-4306) 𝐇𝐚𝐨𝐲𝐮𝐚𝐧 𝐒𝐮𝐧 (MIT) https://t.co/Ze7Q4T47Xg

1

0

3

🚀Excited to be @IEEECDC2023 in Singapore with three of my brilliant students presenting their papers today and tomorrow! (See details below) P.s. Yes, we ditched @NeurIPSConf this year, sorry! #IEEECDC2023 #CDC2023

1

1

27

A new machine-learning technique can efficiently learn to control a robot, leading to better performance. Using this method, “we’re able to naturally create controllers that function much more effectively in the real world,” Navid Azizan says. https://t.co/bkSQV8ylLH

3

27

94

If you are at #ICML2023, check out our oral by @spenMrich! Schedule:

Excited to present "Learning Control-Oriented Dynamical Structure from Data" next week at #ICML2023! We enforce factorized structure in learned dynamics models to enable performant nonlinear control. Paper: https://t.co/f79wPtohz9 Code (w/ #JAX): https://t.co/jqorikwxt5

0

1

7

When can we trust the output representations of "foundation models"? Turns out one may be able to tell: https://t.co/EHpD7XUMWg Skillfully done by my wonderful student @Young_J_Park @MIT & the amazing @HW_HaoWang of @MITIBMLab See the🧵below

So many pre-trained models fueling diverse downstream tasks! When can we confidently trust and leverage these models? 🤔 Check it out! “Representation Reliability and Its Impact on Downstream Tasks” ( https://t.co/kloJzG6JUG)

@HW_HaoWang, @ShervinArdeshir, and @NavidAzizan

1

8

30

SketchOGD: Memory-Efficient Continual Learning. (arXiv:2305.16424v1 [cs.LG])

0

1

7

Professor Navid Azizan has been selected as the 2023 Outstanding UROP Faculty Mentor. UROP (Undergraduate Research Opportunities Program) students nominate research mentors who have demonstrated exceptional guidance and teaching in a research setting each spring.

0

3

35

Youngjae will be presenting his work on one-pass learning at 2:50-3:10pm https://t.co/C6lVAY7Srq

Can we learn sequentially available data without retraining on previous datapoints? We propose 𝗢𝗥𝗙𝗶𝘁 (Orthogonal Recursive Fitting), an algorithm for "one-pass" learning which seeks to fit every new datapoint while minimally changing the predictions on previous data. 1/3

0

1

2

If you are at #CDC22 @CSSIEEE, come to the invited session on 𝐑𝐞𝐜𝐞𝐧𝐭 𝐀𝐝𝐯𝐚𝐧𝐜𝐞𝐬 𝐢𝐧 𝐋𝐞𝐚𝐫𝐧𝐢𝐧𝐠 𝐚𝐧𝐝 𝐂𝐨𝐧𝐭𝐫𝐨𝐥 at 𝟏:𝟑𝟎-𝟑:𝟑𝟎𝐩𝐦 in Tulum Ballroom 𝐄 w. @KaiqingZhang @guannanqu & @AdamWierman

#CDC2022

1

1

19