Chenhao Zheng

@Michael3014018

Followers

75

Following

22

Media

10

Statuses

20

Computer Vision PhD student @uwcse | Student Reseacher @allen_ai | ex Undergrad @UMichCSE and @sjtu1896

Seattle, WA

Joined December 2022

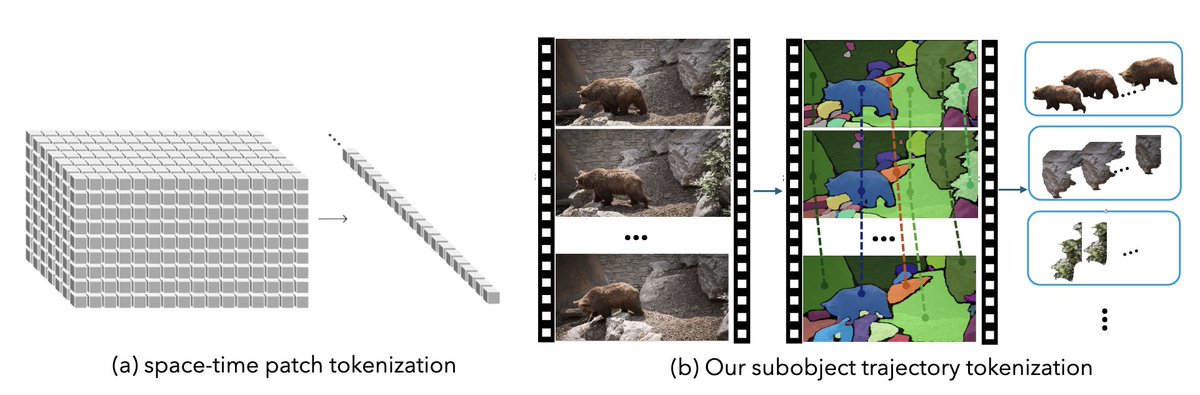

Having trouble dealing with the excessive token number when processing a video? Check out our paper that is accepted by ICCV 2025 with an average score of 5.5! We tokenize video with tokens grounded in trajectories of all objects rather than fix-sized patches. Trained with a

1

25

112

Extremely grateful to work with the amazing team!!.@JieyuZhang20, @mrezasal1, Ziqi Gao, Vishnu Iyengar, Norimasa Kobori, Quan Kong, @RanjayKrishna.

1

0

2

RT @jae_sung_park96: 🔥We are excited to present our work Synthetic Visual Genome (SVG) at #CVPR25 tomorrow! .🕸️ Dense scene graph with d….

0

8

0

RT @JieyuZhang20: Calling all #CVPR2025 attendees!. Join us at the SynData4CV Workshop at @CVPR (Jun 11 full day at Grand C2, starting at 9….

syndata4cv.github.io

[“CVPR 2025 Workshop”, “June 11th, 2025, Grand C2”, “Nashville, TN, United States”]

0

8

0

RT @ainaz_eftekhar: 🎉 Excited to introduce "The One RING: a Robotic Indoor Navigation Generalist" – our latest work on achieving cross-embo….

0

36

0

RT @jbhuang0604: The slide is bad, her response to an audience is even worse…. “Maybe there is one, maybe they are common, who knows what.….

0

20

0

This project is led by @zitong_lan , with collaboration of @zhiwei_zzz, and advised by @mingmin_zhao . Thank for everyone’s great efforts!.

0

0

0

Excited to share our #NeurIPS2024 spotlight: Acoustic Volume Rendering (AVR) for Neural Impulse Response Fields. AVR greatly improve the state-of-the-art in novel view spatial audio synthesis by introducing acoustic volume rendering. Listen with headphone for example below

1

6

17

RT @DJiafei: Humans learn and improve from failures. Similarly, foundation models adapt based on human feedback. Can we leverage this failu….

0

43

0