Maria Lomeli

@MariaLomeli_

Followers

390

Following

526

Media

1

Statuses

94

Researcher and engineer @AIatMeta, FAIR | PhD from @GatsbyUCL and former postdoc @CambridgeMLG

London, UK

Joined August 2022

Our Source2Synth paper is out, great work everyone @AlisiaLupidi , @carlos_gemmell , @nicola_cancedda , @JaneDwivedi , @jaseweston , @j_foerst and @robertarail !.

🚨New paper: Source2Synth🚨.- Generates synthetic examples grounded in real data .- Curation step makes data high quality based on answerability.- Improves performance on two challenging domains: Multi-hop QA and using tools: SQL for tabular QA .🧵(1/4)

1

8

25

RT @MinqiJiang: Recently, there has been a lot of talk of LLM agents automating ML research itself. If Llama 5 can create Llama 6, then sur….

0

196

0

RT @polkirichenko: Excited to release AbstentionBench -- our paper and benchmark on evaluating LLMs’ *abstention*: the skill of knowing whe….

0

81

0

RT @j_foerst: Hello World: My team at FAIR / @metaai (AI Research Agent) is looking to hire contractors across software engineering and ML.….

docs.google.com

We are looking for contractors. If you have a track record of ML-Ops and / or SWE excellence and are looking to work with us on a contracting basis, please fill in below.

0

23

0

RT @robertarail: If you work on computer use agents, consider submitting your paper or demo to our #ICML2025 workshop!.

0

3

0

RT @jaseweston: 🚨 New paper & dataset! 🚨.NaturalReasoning: Reasoning in the Wild with 2.8M Challenging Questions.- Synthesizes 2.8M challen….

0

90

0

RT @robertarail: Super excited to share 🧠MLGym 🦾 – the first Gym environment for AI Research Agents 🤖🔬. We introduce MLGym and MLGym-Bench,….

0

120

0

RT @FortunatoMeire: We need your help! If @Khipu_AI has been important to your AI career development and you would like your story to be fe….

0

8

0

RT @_avichawla: To understand KV caching, we must know how LLMs output tokens. - Transformer produces hidden states for all tokens. - Hidd….

0

7

0

RT @yeewhye: Postdoctoral fellowships and research engineer positions available for an Oxford+Singapore project on uncertainty quantificati….

docs.google.com

Postdoctoral Fellow and Research Engineer Positions on Uncertainty Quantification in LLMs in Oxford and Singapore By scaling up data, compute and model size, large language models (LLMs) have gained...

0

17

0

RT @KempeLab: Submit to our New Frontiers in Associative Memories workshop @iclr_conf. New architectures & algorithms, memory-augmented LL….

openreview.net

Welcome to the OpenReview homepage for ICLR 2025 Workshop NFAM

0

14

0

RT @j_foerst: I am recruiting for a _fully funded_ (overseas or UK) Phd student to start in October 2025. All details in the post below, de….

0

6

0

RT @j_foerst: Dear reviewers, please engage. Dear ACs, please remind the reviewers to engage. Thank you everyone!.

0

6

0

RT @keenanisalive: We often think of an "equilibrium" as something standing still, like a scale in perfect balance. But many equilibria ar….

0

2K

0

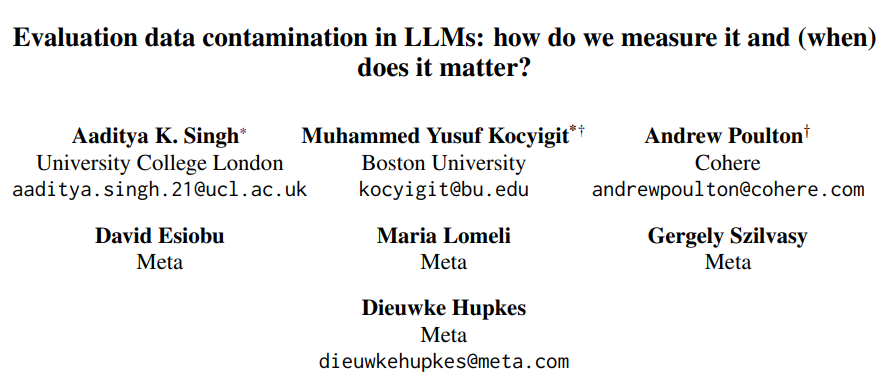

Check out our new paper about how to determine evaluation data contamination in LLMs training corpora. Great collab work with amazing people 🥳.

New deep-dive into evaluation data contamination 😍🤩. Curious how much contamination there really is in common LLM training corpora, how much that actually impacts benchmark scores and what is the best metric to evaluate that? Read our new preprint! .

1

2

14

RT @j_foerst: Doing a PhD in ML and tired of playing catch-up w arxiv and X? Catch yourself wondering what's next after LLMs run out of hum….

0

32

0

RT @naaclmeeting: 📢 NAACL needs Reviewers & Area Chairs! 📝. If you haven't received an invite for ARR Oct 2024 & want to contribute, sign….

0

26

0