Lambda

@LambdaAPI

Followers

19K

Following

2K

Media

425

Statuses

2K

The Superintelligence Cloud

San Francisco, CA

Joined July 2012

Lambda has raised over $1.5B in equity to build superintelligence cloud infrastructure. TWG Global, USIT, and existing investors led the Series E round to position Lambda to execute on its mission to give everyone the power of superintelligence. One person, One GPU. Press

2

14

67

#NeurIPS2025: Our biggest year yet. Six accepted research papers, highest workshop attendance, and an incredible booth presence. Thank you to everyone who stopped by and shared your thoughts with us on what’s next in superintelligence. We can’t wait to see what you build in 2026.

0

0

6

Building superintelligence-scale AI factories takes more than just GPUs. Join Maxx Garrison and Johnson Eung in a webinar on Thursday, December 11th, at 10am PST, to learn how Lambda and @Supermicro solve the operational problems of modular, GPU-dense AI factories and validate

0

2

8

Welcome aboard, @TheZachMueller! We are beyond thrilled :)

Secrets out. I’m happy to announce that I’ve joined @LambdaAPI as Head of Developer Relations! I’ve been using Lambda for a number of years, and especially after how warm and welcoming they were towards helping out with my course this year, it was a role that “Just Made Sense.”

0

0

16

Today, Heather Planishek joins Lambda as Chief Financial Officer. Most recently, she served as Chief Operating and Financial Officer at Tines, the intelligent workflow platform, and has been @LambdaAPI’s Audit Chair since July 2025. Heather brings deep company insight to our

businesswire.com

Lambda, the Superintelligence Cloud, today announced the appointment of Heather Planishek as Chief Financial Officer. Planishek brings deep experience scalin...

0

1

8

AI can draw a dog and a cat side by side, no problem. But ask it for “one golden retriever half-hidden behind another,” and you’ll get a four-legged blob. Why? Because most layout-to-image models are tested on scenes where objects don’t touch. Real photos don’t work that way. In

0

2

7

Day 3 at #NeurIPS: back to our research roots. NeurIPS is one of the few conferences still focused on real academic work, and that’s been Lambda’s home for the past twelve years. We spent the day meeting with founders who turn state-of-the-art research into products and

0

0

8

LLM alignment typically relies on large and expensive reward models. What if a simple metric could replace them? In a new #NeurIPS2025 paper, Lambda’s Amir Zadeh and @chuanli11 introduce BLEUBERI, which uses BLEU scores as the reward for instruction following:

0

2

9

AI can recognize objects, but it still struggles with simple spatial questions like “Is the water bottle on the left or right of the person?” or “Can the robot reach that?” One of our NeurIPS 2025 papers, co-authored by Lambda researcher @jianwen_xie, introduces SpatialReasoner

0

2

11

Day 2 at #NeurIPS2025 was all about builders talking shop. AI research teams stopped by the Lambda booth to trade notes on multimodal inference for superintelligence, building AI factories, and what reliable NVIDIA GB300 performance looks like when workloads hit production. Real

2

0

7

At the atomic scale, running millions of simulations on large-scale datasets is expensive. AI helps, but today’s models still spend most of their time performing heavy computations to ensure their predictions remain accurate regardless of how a molecule is rotated, using the

0

0

6

#NeurIPS2025 opened with a full slate of talks and demos asking the hard questions: multimodal reasoning, training at scale, and what it takes to build systems that behave more like software than static models. "We're from the AI community, building for the community. That's why

0

0

10

Achieve up to 10× inference speed and efficiency on Mixture of Experts models like DeepSeek-R1 with @nvidia Blackwell NVL72 systems on Lambda’s cloud: purpose-built for AI teams that need fast, efficient, and seamlessly orchestrated infrastructure at scale, and tightly integrated

blogs.nvidia.com

Kimi K2 Thinking, DeepSeek-R1, Mistral Large 3 and others run 10x faster on NVIDIA GB200 NVL72.

1

0

4

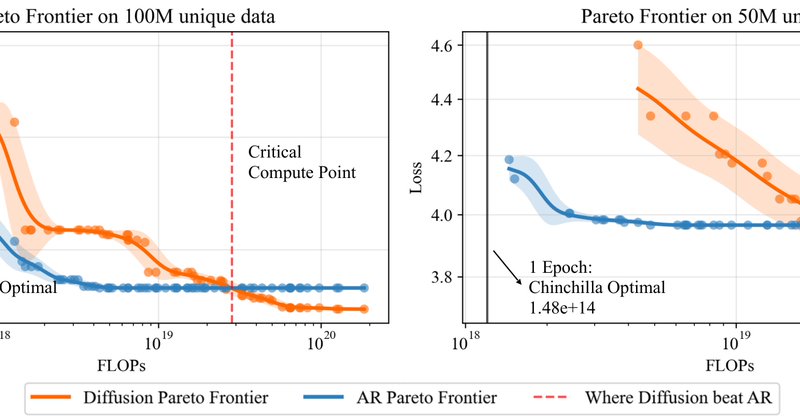

If your bottleneck is data rather than compute, you may want to rethink using standard LLMs. In this latest NeurIPS paper co-authored by our very own Amir Zadeh, “Diffusion Beats Autoregressive in Data-Constrained Settings,” we show that masked diffusion models: - Train for

blog.ml.cmu.edu

Check out our new blog post on "Diffusion beats Autoregressive in Data-Constrained settings". The era of infinite internet data is ending. This research paper asks: What is the right generative...

0

0

11

Everyone knows multimodal models can generate text or images… but few talk about what it takes to bridge the two in a way that’s efficient, aligned, and scalable. One of our accepted papers, Bifrost-1 (co-authored by @chuanli11 and Amir Zadeh), tackles that problem head-on by

arxiv.org

There is growing interest in integrating high-fidelity visual synthesis capabilities into large language models (LLMs) without compromising their strong reasoning capabilities. Existing methods...

0

0

2

How can language models benefit from explicit reasoning steps rather than relying solely on implicit activations? Join us for a deep dive into Latent Thought Models (LTMs): https://t.co/A5vhVnC6qm

@jianwen_xie walks through how LTMs infer and refine compact latent thought

4

0

8

Ready to build the future of AI? Join our Multimodal Superintelligence Workshop on building next-generation multimodal models that observe, think, and act across multiple modalities -> https://t.co/0rOcJtyUIO Speakers: Amir Zadeh, @chuanli11, @minisounds, Jessica Nicholson,

0

2

15