Lennart Luettgau

@LLuettgau

Followers

408

Following

1K

Media

38

Statuses

283

Research Scientist, AI Security, Societal Impacts @AISecurityInst. Cognitive neuroscientist by training.

Joined April 2019

Conversational AI is fast becoming a key information source for humans worldwide, including during election cycles. But what are the effects on users' epistemic health? 🧠 🚨Today we released new @AISecurityInst evidence that brings cautious optimism for LLMs vs internet search

6

27

110

This was a heroic team effort and a fun collaboration between political, behavioural and cognitive scientists and showcases the amazing research capabilities and resources @AISecurityInst has built in the last 2 years! @hannahrosekirk @KobiHackenburg @summerfieldlab

0

0

1

5/ Our findings indicate AI can serve as a valuable information resource for daily use, potentially without the widespread misinformation risks many experts have warned about. Read the full paper here:

1

0

0

4/ Our data shows AI does not increase belief in misinformation beyond regular internet searches – this held true even when we prompted models to be more persuasive or sycophantic. Similar patterns emerged for radical viewpoints, general political beliefs, and institutional trust

1

0

0

3/ Key finding #2: AI usage doesn't appear to amplify misinformation beliefs. Through randomised controlled experiments involving 2,800+ participants, we tracked belief changes for true vs. false political statements after AI interactions compared to traditional web searches.

1

0

0

2/ Key finding #1: AI has become a significant source for political insights. Our survey of nearly 2,500 UK respondents reveals that roughly 13% of eligible voters consulted AI for election-related information during the final week before the 2024 UK general election.

1

0

0

New preprint! Growing numbers of people turn to AI chatbots for information, sparking debates about their potential to mislead voters and shape public discourse. But what's the real impact of AI on political beliefs? Our latest research dives into this critical question 👇

1

2

10

🔎 People are increasingly using chatbots to seek out new information, raising concerns about how they could misinform voters or distort public opinion. But how is AI actually influencing real-world political beliefs? Our new study explores this question 👇

2

6

21

Apply here (application closes in one week): https://t.co/bVDKoLzvK9

job-boards.eu.greenhouse.io

0

0

0

My team at @AISecurityInst is hiring a Research Assistant to work on exciting Human Influence research projects! -6 month residency -Ideal for recent MSc or early PhD students in ML, AI, Psych, Cognitive/computer/data science (link in thread)

1

0

2

My team at @AISecurityInst is hiring a research assistant to work on Human Influence research! 🧠👾💻 -6 month residency w/ good salary -Ideal for recent MSc or early PhD students in ML, AI, Psych, Cognitive/computer/data sciences (job link below)

2

4

12

please apply for this: https://t.co/HxNRYFn7Se it's a strategy and delivery manager role (non-technical) in our "AI and Human Influence" team at AISI. Would suit someone who cares about AI policy, and wants to work in a fast-paced environment in the shadow of Big Ben

0

2

5

Today (w/ @UniofOxford @Stanford @MIT @LSEnews) we’re sharing the results of the largest AI persuasion experiments to date: 76k participants, 19 LLMs, 707 political issues. We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more 🧵

14

130

437

We're hiring a Senior Researcher for the Science of Evaluation team! We are an internal red-team, stress-testing the methods and evidence behind AISI’s evaluations. If you're sharp, methodologically rigorous, and want shape research and policy, this role might be for you! 🧵

1

4

10

In a new paper, we examine recent claims that AI systems have been observed ‘scheming’, or making strategic attempts to mislead humans. We argue that to test these claims properly, more rigorous methods are needed.

4

25

85

Excited to share some work recent work on using hierarchical Bayesian modeling to improve LLM evaluations! HiBayES is a flexible and robust statistical modeling framework that allows to explicitly account for the hierarchical nature of benchmarks

Advanced AI systems require complex evaluations to measure abilities, but conventional analysis techniques often fall short. Introducing HiBayES: a flexible, robust statistical modelling framework that accounts for the nuances & hierarchical structure of advanced evaluations.

1

0

6

Advanced AI systems require complex evaluations to measure abilities, but conventional analysis techniques often fall short. Introducing HiBayES: a flexible, robust statistical modelling framework that accounts for the nuances & hierarchical structure of advanced evaluations.

2

11

53

🚨 We are hiring in Berlin 💫 Pls RT 🙏 We @Zoe_Chi_Ngo @e_buchberger @LLuettgau are looking a motivated research assistant to contribute to our ongoing study on inference and generalization in 8-14 year olds. Feel free to reach out to me directly.

1

2

3

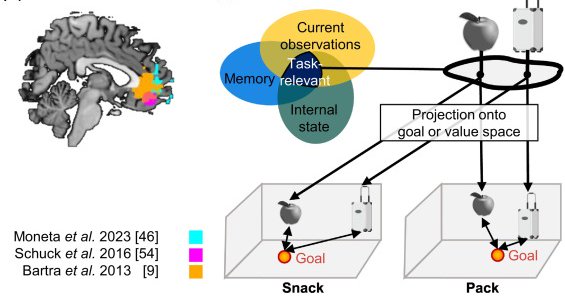

Excited to share our review, w. @ShanyGrossman (shared 1st!) and @nico_schuck where we discuss representational spaces in OFC/vmPFC and in deep RL models, featuring values, states and much more! https://t.co/4qfFoZlm6x. Fresh from the (virtual) print now in @TrendsNeuro , 1/4

cell.com

The orbitofrontal cortex (OFC) and ventromedial-prefrontal cortex (vmPFC) play a key role in decision-making and encode task states in addition to expected value. We review evidence suggesting a...

1

23

81