Salman Khan

@KhanSalmanH

Followers

1K

Following

2K

Media

106

Statuses

351

Faculty at MBZUAI. Past: Inception, ANU, Data61, NICTA, UWA.

Canberra, Australia

Joined February 2011

RT @Dr_HammadKhan: 🚨Do you have expertise in #RemoteSensing and #MachineLearning with a passion for #AgTech? Do you want to lead the develo….

0

4

0

RT @alex_lacoste_: [#ICCV2025] Our paper "GEOBench-VLM: Benchmarking Vision-Language Models for Geospatial Tasks" is accepted at ICCV 2025….

0

1

0

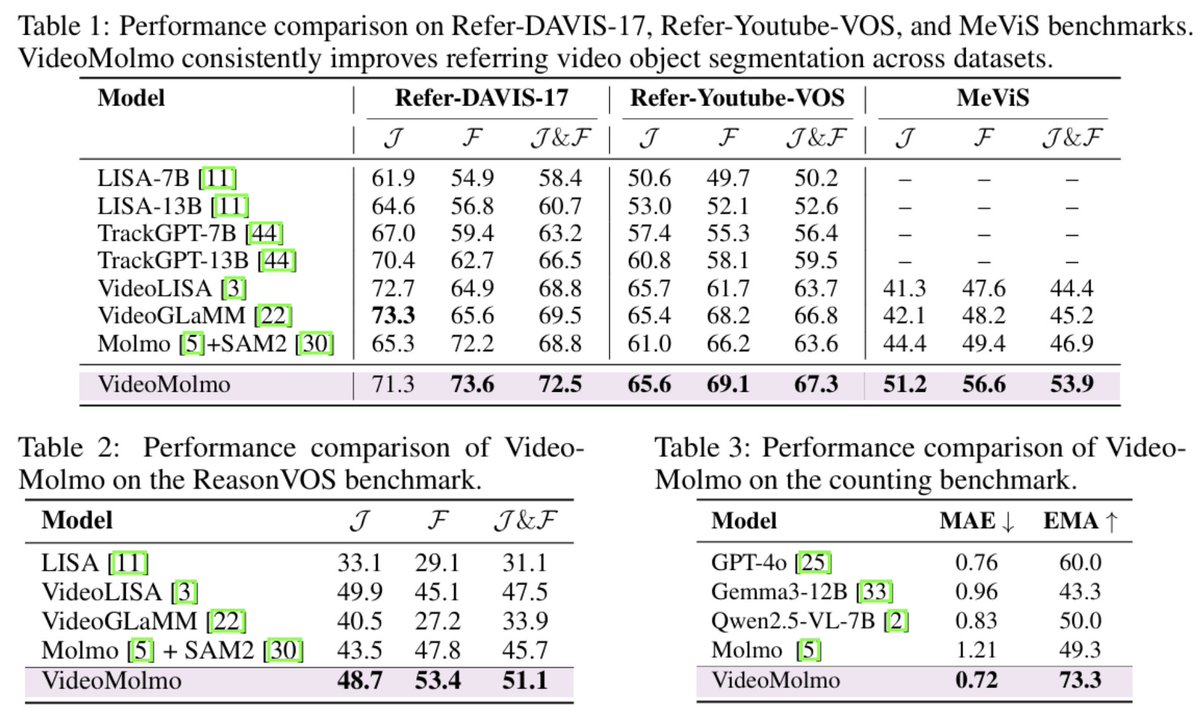

🔗 Paper: 🔬 Inference Code: 📌 Project Page: 💻 Training code coming soon!. Joint work with: Ghazi Shazan Ahmad, Ahmed Heakl, Hanan Ghani, Abdelrahman Shaker, Zhiqiang Shen, Ranjay Krishna, Fahad Khan.

github.com

Official code of the paper "VideoMolmo: Spatio-Temporal Grounding meets Pointing" - mbzuai-oryx/VideoMolmo

0

0

2

RT @SameeraRamasin1: In model parallel training, compressing the signal flow has been established as not very useful as they are too inform….

0

5

0

RT @PluralisHQ: We've reached a major milestone in fully decentralized training: for the first time, we've demonstrated that a large langua….

0

267

0

🚀 Introducing ThinkGeo: a benchmark for tool-augmented LLMs on real-world remote sensing tasks! 🌍🛰️ 436 tasks across key domains; 14 tools; step-by-step SOTA model eval. Open-source! 🔗#GeospatialAI

0

0

3