琥珀青葉@LyCORIS

@KBlueleaf

Followers

3K

Following

21K

Media

570

Statuses

3K

Undergraduate in Taiwan. Leader of LyCORIS

Taiwan

Joined May 2021

The paper of LyCORIS project is accepted by ICLR 2024!!!!!!!!. "Navigating Text-To-Image Customization:From LyCORIS Fine-Tuning to Model Evaluation". My first ever paper, as an undergraduate student. Arxiv here:

arxiv.org

Text-to-image generative models have garnered immense attention for their ability to produce high-fidelity images from text prompts. Among these, Stable Diffusion distinguishes itself as a leading...

5

22

137

RT @kuromi_starking: 最近看到好多这种言论….“日本右转了,我要二润了”.开头都是“我在日本十几年了”云云…. 我只能说,你们真在日本呆了十几年的话,你们的记忆都是鱼吗?. 以前外国人啥待遇?现在外国人啥待遇?. 以前所读专业与就业公司不匹配,不给工作签证。….

0

39

0

I think the problem is, the UI/UX of gemini app is really really bad, Even AI studio is far more better.Gemini-Cli is good but if it have gui ver with same UX than it will be awesome as well. The model is solid, works well, and have reasonable pricing. But google never made a.

Google DeepMind is winning, they just don’t hype it. > Participated in IMO.> IMO officials verified the solution.> Awarded Gold medal.> Quietly dropped the post on a Monday morning. At this point, they’re not even bothered about competing. Also this version of Gemini is coming

1

0

5

RT @gaunernst: I can confirm that mma.sync.aligned.m16n8k32.row.col.kind::mxf8f6f4.block_scale.f32.e4m3.e4m3.f32.ue8m0 is faster than mma.s….

0

2

0

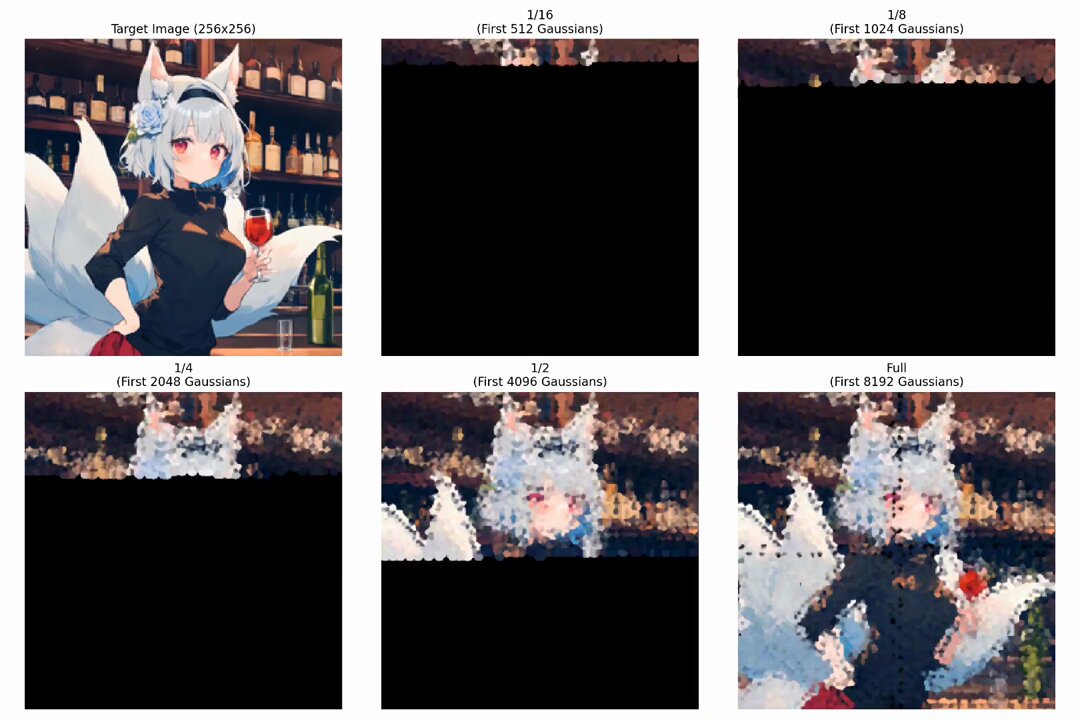

RT @KBlueleaf: I write a triton kernel for 2DGS so I can achieve 16384 gaussians on 256x256 image with basically no vram consumption (since….

0

4

0

RT @KBlueleaf: The kernel and whole impl is here. Remember to give it a star!!!.

github.com

Image Gaussian Splatting. Contribute to KohakuBlueleaf/IGS development by creating an account on GitHub.

0

9

0

The kernel and whole impl is here. Remember to give it a star!!!.

github.com

Image Gaussian Splatting. Contribute to KohakuBlueleaf/IGS development by creating an account on GitHub.

I write a triton kernel for 2DGS so I can achieve 16384 gaussians on 256x256 image with basically no vram consumption (since the largest intermedate state is the output image). Also here is the PoC which train a 256 token feature on one image and each token is a 1024dim vector. I

2

9

75