Jiacheng Xu

@JiachengNLP

Followers

1K

Following

2K

Media

10

Statuses

118

Researcher at Salesforce Research. Prev PhD @UTAustin, advised by @gregd_nlp; ex Google and Microsoft intern. Account was recently hacked (June 2025).

Palo Alto

Joined December 2017

Amazing work! Love the emphasis on interpretable, scoped AI for science. VLM-as-a-judge feels like a promising direction — curious how it compares to human eval in these tasks.

Great to work on this benchmark with astronomers in our NSF-Simons CosmicAI institute! What I like about it:.(1) focus on data processing & visualization, a "bite-sized" AI4Sci task (not automating all of research).(2) eval with VLM-as-a-judge (possible with strong, modern VLMs).

2

0

4

RT @thefernandocz: Anthropic just dropped a $3T nuke on every AI company. They've officially joined forces with Apple. Their plan?. Steal….

0

429

0

RT @_jasonwei: There are traditionally two types of research: problem-driven research and method-driven research. As we’ve seen with large….

0

92

0

RT @xai: Meet the Grok 3 family, now on our API!. Grok 3 Mini outperforms reasoning models at 5x lower cost, redefining cost-efficient inte….

0

848

0

RT @OpenAI: Introducing Sora, our text-to-video model. Sora can create videos of up to 60 seconds featuring highly detailed scenes, comple….

0

31K

0

RT @PhilippeLaban: New Preprint📣."Beyond the Chat💬: Executable▶️ and Verifiable✅ Text-Editing with LLMs". Getting help from LLMs when editi….

0

16

0

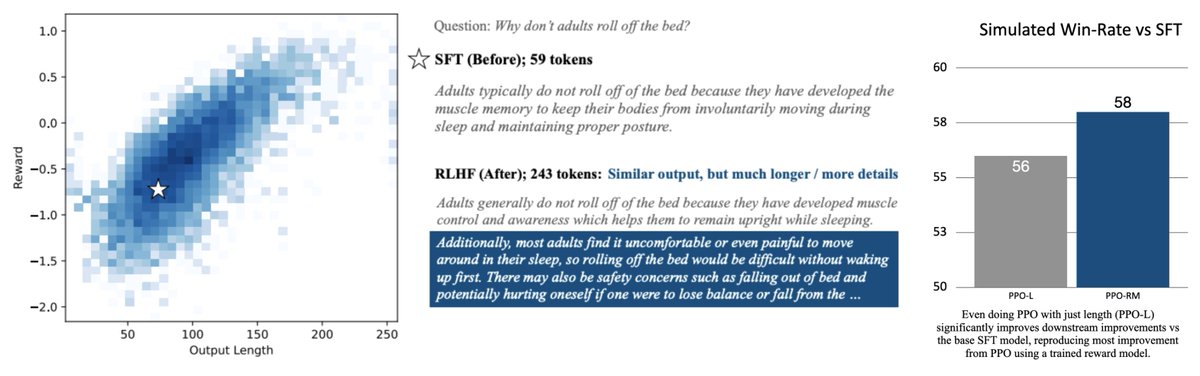

Time to build more length-calibrated/conscious models, datasets, and eval metrics? Any other factors playing a role like length here? A long way to go indeed; great work by @prasann_singhal!.

Why does RLHF make outputs longer?. w/ @tanyaagoyal @JiachengNLP @gregd_nlp. On 3 “helpfulness” settings.- Reward models correlate strongly with length.- RLHF makes outputs longer.- *only* optimizing for length reproduces most RLHF gains. 🧵 below:

0

0

9

RT @gregd_nlp: 📣 Today we launched an overhauled NLP course to 600 students in the online MS programs at UT Austin. 98 YouTube videos 🎥 +….

0

64

0

Amazing collaborators from UT Austin @prasann_singhal @tanyaagoyal @gregd_nlp and Salesforce AI Research @CaimingXiong @silviocinguetta @yingbozhou_ai @alexfabbri4 @PhilippeLaban Semih Yahvuz, @iam_wkr.

0

0

5

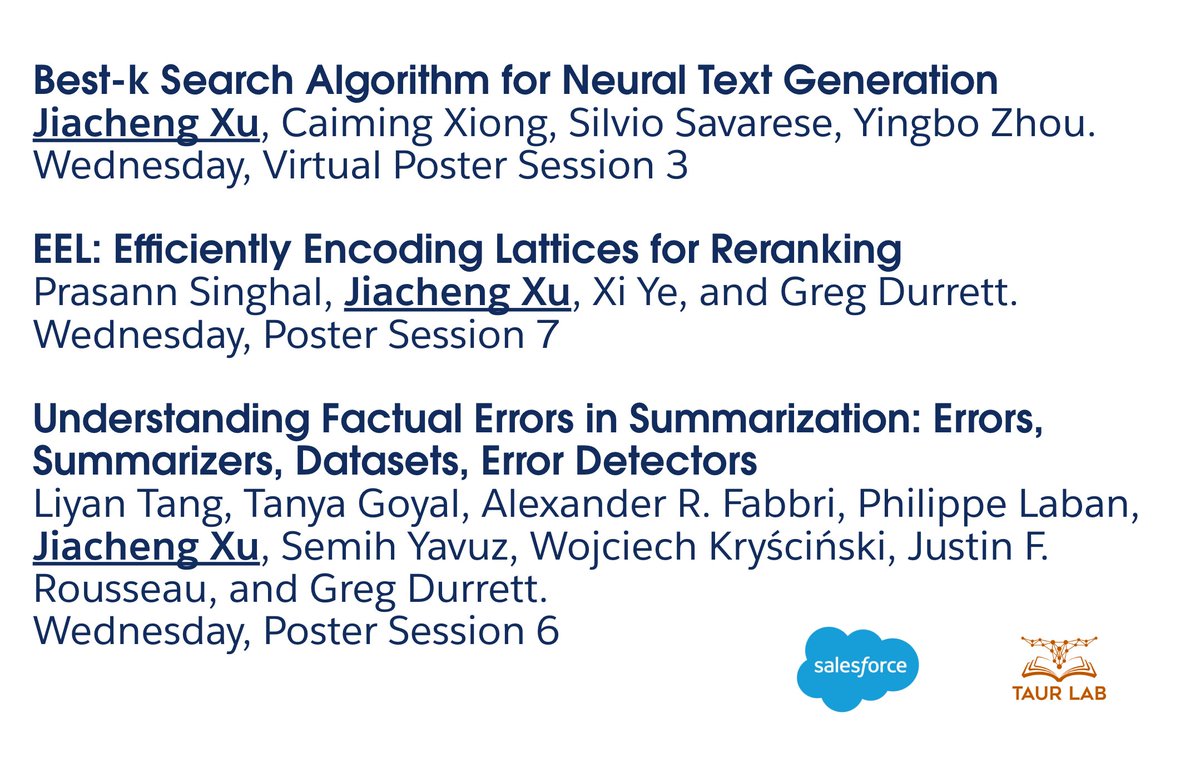

Thrilled to share that three of my papers on text generation and summarization have been accepted at #ACL2023NLP! 🎉 Unable to attend conference in person due to visa issues, but I'll be available on Twitter and Gathertown for discussions.

2

3

61

Do you want a text decoding algorithm to discover diverse options and can be customized as you wish?. Excited to share our recent ACL paper on text generation, and hope to see you at the Virtual Poster Session 3, tomorrow morning.

aclanthology.org

Jiacheng Xu, Caiming Xiong, Silvio Savarese, Yingbo Zhou. Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). 2023.

1

9

42

RT @jessyjli: Heading to #ACL2023NLP, excited to meet folks! We will be presenting work on discourse/QUD, summarization, and probing interg….

0

14

0

RT @jesse_vig: How can we teach models to simplify text using the revision history of Wikipedia articles?. Check out our paper "SWiPE: A Da….

0

11

0

RT @tanyaagoyal: I will join Cornell CS @cs_cornell as an assistant professor in Fall 2024 after spending a year at @princeton_nlp working….

0

25

0

RT @prasann_singhal: New #ACL2023NLP paper!. Reranking generation sets with transformer-based metrics can be slow. What if we could rerank….

0

18

0

RT @yasumasa_onoe: Knowledge in LMs can go out of date. Our #ACL2023NLP paper investigates teaching LMs about new entities via definitions,….

0

28

0

Drago was a great mentor, friend and thought leader in the community. I still remember the time I visited Yale, we met during conferences, and talked about research in summarization. A very kind and friendly person I'll always miss. R.I.P.

The #AI community, the #computerscience community, the @YaleSEAS community, and humanity have suddenly lost a remarkable person, @dragomir_radev - kind and brilliant, devoted to his family and friends. gone too soon. A sad day @Yale @YINSedge @YaleCompsci #NLP2023

0

1

18