Ji-Ha

@Ji_Ha_Kim

Followers

3K

Following

12K

Media

168

Statuses

5K

Joined January 2024

@stupdi_didot I forgot to add that r+eps, sqrt(r^2+eps^2) and max(r,eps) correspond to the ell1, ell2 and ell-infinity norm of the 2D vector (r,eps) respectively. Perhaps this could help with analysis or creating new ideas.

0

0

3

@stupdi_didot This is a very interesting alternative, v/max(r,eps). The Jacobian is still bounded, scale-free is preserved for eps>r, the only concern is that it is non-differentiable at r=eps, but you can use subgradients.

@Ji_Ha_Kim don’t forget about max(v, eps). I’m still not sure why we’re never doing this.

2

0

19

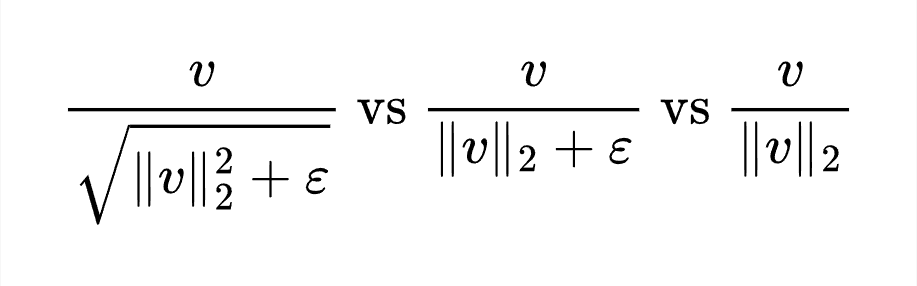

@stupdi_didot In short:.Use unbiased normalization if no backprop (e.g. optimizers) to preserve scale-free property.Use biased normalization with epsilon inside sqrt if differentiating (e.g. layer/batch-normalization) for numerical stability+simple implementation.Avoid epsilon outside sqrt.

2

1

16

@stupdi_didot Therefore, with the common ε_in^2=ε_out parameterization, the in(v|ε^2) normalization will be more numerically stable than out(v|ε) in the gradient

1

0

6

I'll clarify a point on in vs out.As noted by @stupdi_didot:. ||in(v|ε^2)||_2/√2 <= ||out(v|ε)||_2 <= ||in(v|ε^2)||_2. So with typical ε_in^2=ε_out.We see out(v|ε) shrinks more aggressively close to 0 than in(v|ε^2) (as seen in the vector field).

@Ji_Ha_Kim @bremen79 Is in(v|eps^2) not allowed?.If it is, then the following holds:.sqrt(2) sqrt(1+eps^2/||v||_2^2) >= 1+eps/||v||_2 >= sqrt(1+eps^2/||v||_2^2). I'm not familiar with how this ties into ML, but adding eps in the denom is decent for managing some numerical overflows.

1

0

9