Hayley Ross

@HayleyRossLing

Followers

57

Following

19

Media

5

Statuses

10

PhD Student at @Harvard, working on computational semantics and LLM interpretability

Joined October 2010

The tool-calling decision-making dataset I developed during my internship at NVIDIA is now out! Catch me presenting it at NAACL with @ameyasm1154 or see the 🧵 for details.

Check out a new dataset When2Call for training and evaluating LLMs on decision making about "when (not) to call" functions!. 📄 Paper: 🤗 HF Dataset Hub: 💾 GitHub: #NAACL2025.

0

0

4

You can check out our paper at We'll also have a poster at NENLP on April 11th where you can come and ask questions 😊.

arxiv.org

Recent work (Ross et al., 2025, 2024) has argued that the ability of humans and LLMs respectively to generalize to novel adjective-noun combinations shows that they each have access to a...

0

0

0

This suggests that humans and LLMs really do solve this task using composition, if we're willing to accept behavioral evidence as evidence of compositionality—see Kate McCurdy's excellent compositionality survey

aclanthology.org

Kate McCurdy, Paul Soulos, Paul Smolensky, Roland Fernandez, Jianfeng Gao. Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing. 2024.

1

0

0

New paper with @najoungkim and @TeaAnd_OrCoffee! .We previously found that humans and LLMs generalize to novel adjective-noun inferences (e.g. "Is a homemade currency still a currency?") .Turns out analogy isn't enough to generalize, so it's likely evidence of composition! 🧵

1

1

9

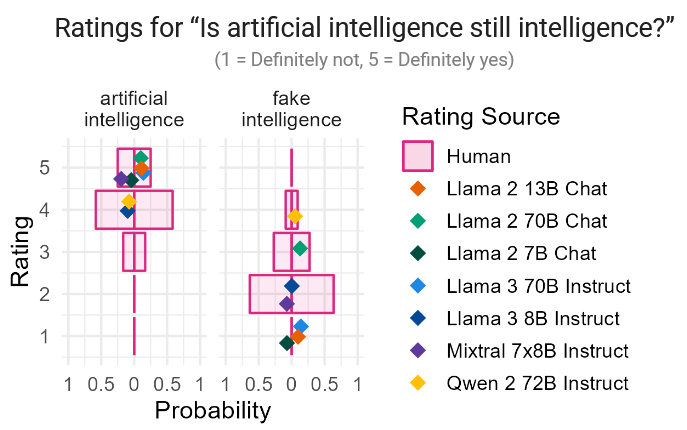

New paper with @najoungkim and @TeaAnd_OrCoffee testing if LLMs can draw adjective-noun inferences like humans! Turns out they often can, and even generalize to unseen combinations. But they're more optimistic about "artificial intelligence" than humans.

1

1

13

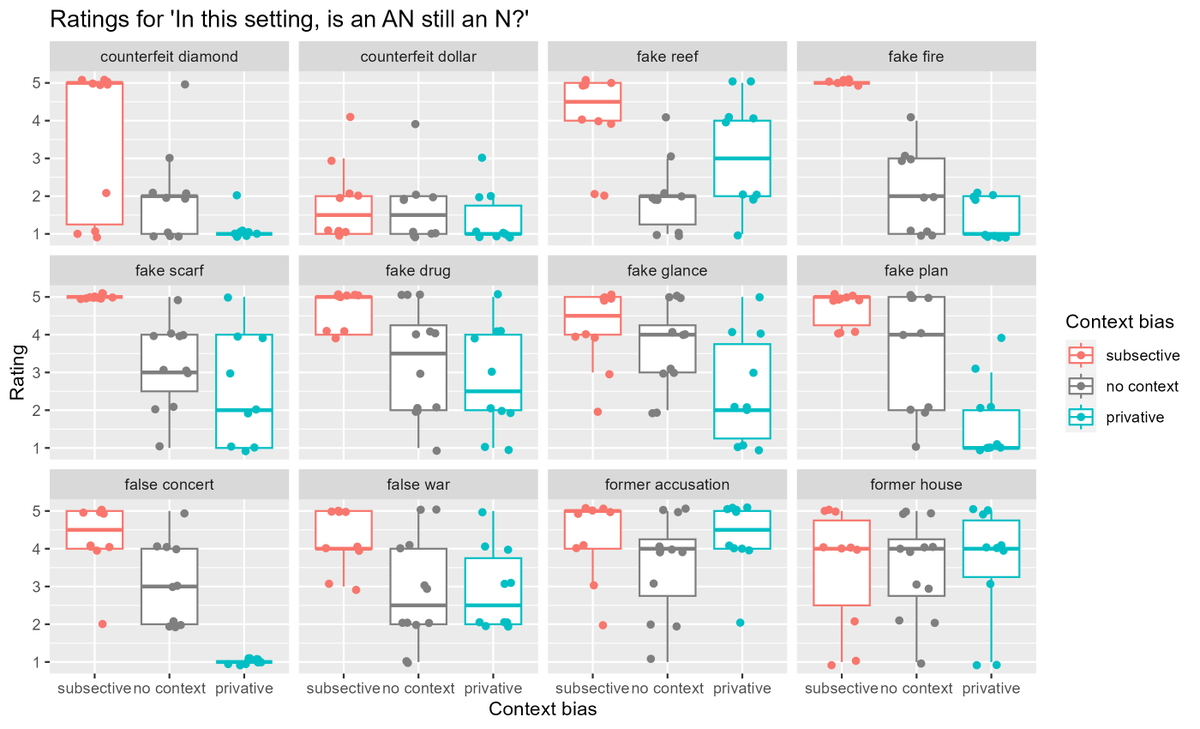

New preprint with @najoungkim & @TeaAnd_OrCoffee on fake reefs and other cases of (novel) adjective-noun composition: Whether a fake N is an N or not depends on noun + context, but people handle novel AN pairs just fine 🙂.Stay tuned for results on LLMs!

0

1

6