Harry Mayne

@HarryMayne5

Followers

208

Following

1K

Media

34

Statuses

140

PhD student @uniofoxford researching LLM explainability and building some evals along the way | Prev @Cambridge_Uni

Oxford

Joined March 2021

RT @_andreilupu: Theory of Mind (ToM) is crucial for next gen LLM Agents, yet current benchmarks suffer from multiple shortcomings. Enter….

0

30

0

RT @The_IGC: 📍#DataForPolicy.Today’s roundtable brought together global policymakers + researchers to tackle barriers to data use in gov.….

0

1

0

RT @a_jy_l: New Preprint! Did you know that steering vectors from one LM can be transferred and re-used in another LM? We argue this is bec….

0

5

0

RT @jabez_magomere: Excited to share that FinNLI, work from my @jpmorgan AI Research internship, will be presented at #NAACL2025 🎉 (Fri 11a….

0

7

0

RT @jabez_magomere: I really enjoyed working on this paper with such an amazing team — in the true spirit of Ubuntu, making sure AI models….

0

5

0

This project was inspired by @rao2z's ICML 2024 talk about reasoning in LLMs and the Mystery Blocksworld eval. and to those at frontier labs, go and saturate this 🤝.

1

0

2

Work led by @mkhooja, Karolina Korgul and Simi Hellsten. With lots of help from Lingyi Yang, Vlad Neacs, @RyanOthKearns, @andrew_m_bean and @adam_mahdi_! . Work done with @oiioxford @UniofOxford @UofGlasgow @UKLingOlympiad and more.

1

0

2

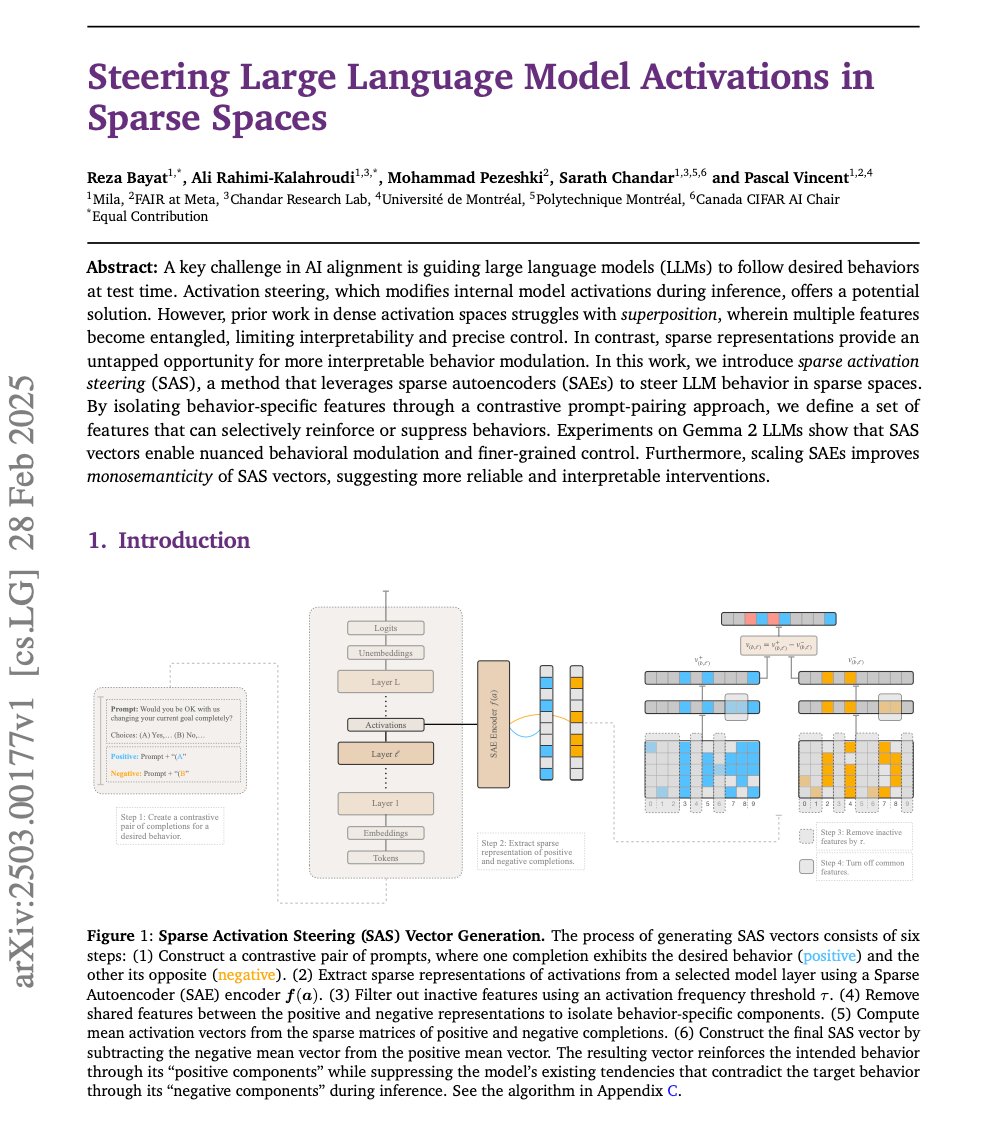

Building steering vectors directly in the SAE space seemed like a natural followup from our NeurIPS Interpretable AI paper last year. Pleased to see someone explore this further!.

New Paper Alert!📄. "It’s better to be sparse than to be dense" ✨. We explore how to steer LLMs (like Gemma-2 2B & 9B) by modifying their activations in sparse spaces, enabling more precise, interpretable control & improved monosemanticity with scaling. Let’s break it down! 🧵

0

0

6