Hannes Mehrer

@HannesMehrer

Followers

623

Following

2K

Media

10

Statuses

514

Comp Neuro postdoc at @martin_schrimpf 's lab at @EPFL_en

Geneva, Switzerland

Joined April 2012

RT @yingtian80536: 🧠 NEW PREPRINT .Many-Two-One: Diverse Representations Across Visual Pathways Emerge from A Single Objective. https://t.co….

biorxiv.org

How the human brain supports diverse behaviours has been debated for decades. The canonical view divides visual processing into distinct "what" and "where/how" streams – however, their origin and...

0

21

0

RT @TimKietzmann: Introducing All-TNNs: Topographic deep neural networks that exhibit ventral-stream-like feature tuning and a better match….

0

10

0

Join us for talks on what role biophysical constraints can and should play in creating better models of brain and behavior. Speakers include: @PouyaBashivan, @TimKietzmann, @talia_konkle, @meenakshik93, Andrew Miri, and @Pieters_Tweet.

0

0

2

Announcement: Workshop at #CCN2025.🧠 Modeling the Physical Brain: Spatial Organization & Biophysical Constraints .🗓️ Monday, Aug 11 | 🕦 11:30–18:00 CET |📍 Room A2.07 .🔗 Register: #NeuroAI @CogCompNeuro.

1

8

37

RT @BernsteinNeuro: 💼 Several new exciting #CompNeuro positions have been announced in the last few days! Find 14 open positions with upco….

0

8

0

RT @JeanRemiKing: 🔴 Our `Brain and AI` team at @MetaAI has a new Research Engineer position in Paris to work on infrastructure and scaling….

metacareers.com

Meta's mission is to build the future of human connection and the technology that makes it possible.

0

4

0

RT @bkhmsi: Excited to be at #NAACL2025 in Albuquerque! I’ll be presenting our paper “The LLM Language Network” as an Oral tomorrow at 2:00….

0

11

0

4. which data the models were trained on has basically now effect, except for one: if training on images without faces, face regions are not well predicted. code: paper:

github.com

Official code for the ICLR 2025 paper "One Hundred Neural Networks and Brains Watching Videos: Lessons from Alignment" - SergeantChris/hundred_models_brains

0

0

1

Also from Amsterdam (Christina Sartzetaki @sargechris, Gemma Roig, Cees G.M. Snoek, Iris Groen @iris_groen): Using RSA 100 models are evaluated on the bold moments dataset ( data available on openneuro: .

1

0

1

For great great visualizations, check out their initial thread: code: paper:

github.com

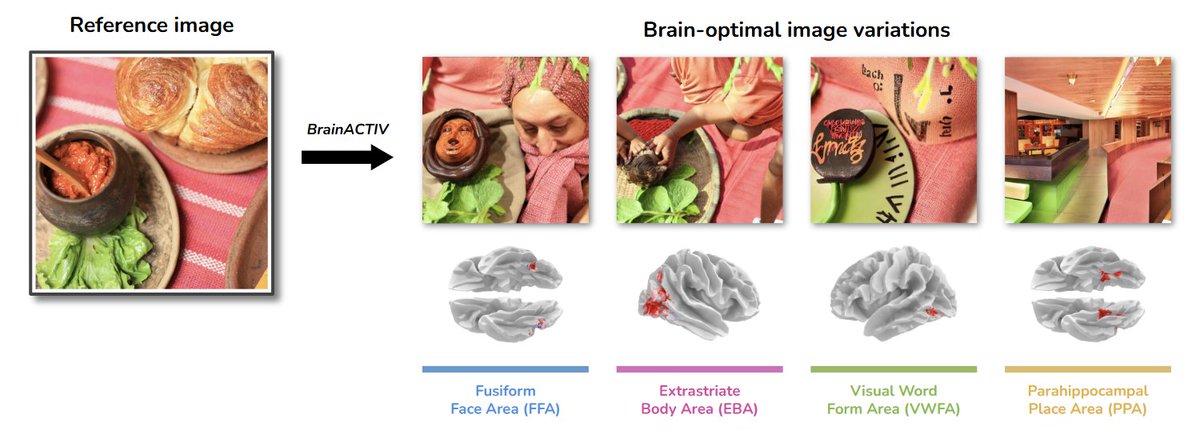

[ICLR 2025] BrainACTIV: Identifying visuo-semantic properties driving cortical selectivity using diffusion-based image manipulation - diegogcerdas/BrainACTIV

✨Our work on BrainACTIV has been accepted at ICLR 2025!✨. Brain Activation Control Through Image Variation (BrainACTIV) is an fMRI-data-driven method for manipulating a reference image to enhance/decrease activity in the visual cortex🧠using pretrained diffusion models🤖. (1/5)

1

0

1

uses CLIP latents fit to fMRI data (#NSD) to optimize features of a given image to up- or down-regulate activity levels in targeted ROIs. Not yet on the poster, but I believe they are already working on it: model evaluation using neural data coming soon.

1

0

1

Similar to the @PouyaBashivan 2019 paper on neural population control (, a team from Amsterdam (Diego Cerdas @diego_gcerdas, Christina Sartzetaki @sargechris, Magnus Petersen @Omorfiamorphism, Pacal Mettes @PascalMettes, Iris Groen @iris_groen).

science.org

A deep artificial neural network can model primate vision.

1

0

2

Work from: Amir Ozhan Dehghani @great_caster, Xinyu Qian, Asa Farahani @Asa_Farahani, Pouya Bashivan @PouyaBashivan.code: paper:

openreview.net

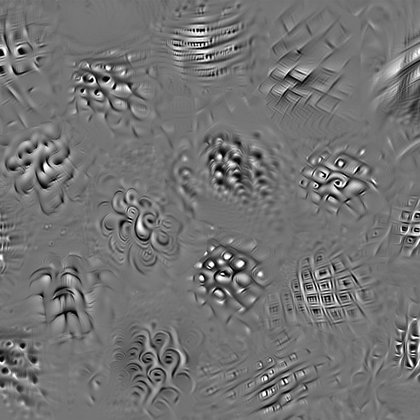

In the primate neocortex, neurons with similar function are often found to be spatially close. Kohonen's self-organizing map (SOM) has been one of the most influential approaches for simulating...

1

0

1

Initial TopoNets thread:

Very proud to share our @iclr_conf paper: TopoNets! High-performing vision and language models with brain-like topography! Expertly led by grad student @mayukh091 and @MainakDeb19! A brief thread.

1

0

1

The authors (Mayukh Deb @mayukh091, Mainak Deb @MainakDeb19 from Ratan Musty's @apurvaratan group) show how this results in smoothly varying maps in language models, but also in models of other modalities e.g. vision.code: paper:

arxiv.org

Neurons in the brain are organized such that nearby cells tend to share similar functions. AI models lack this organization, and past efforts to introduce topography have often led to trade-offs...

1

0

1

Thanks again for the whole team for making this possible: @neil_rathi, @bkhmsi, @NeuroTaha, @nmblauch, @martin_schrimpf.

1

0

2