Safer, Built by Thorn

@GetSaferio

Followers

605

Following

61

Media

112

Statuses

211

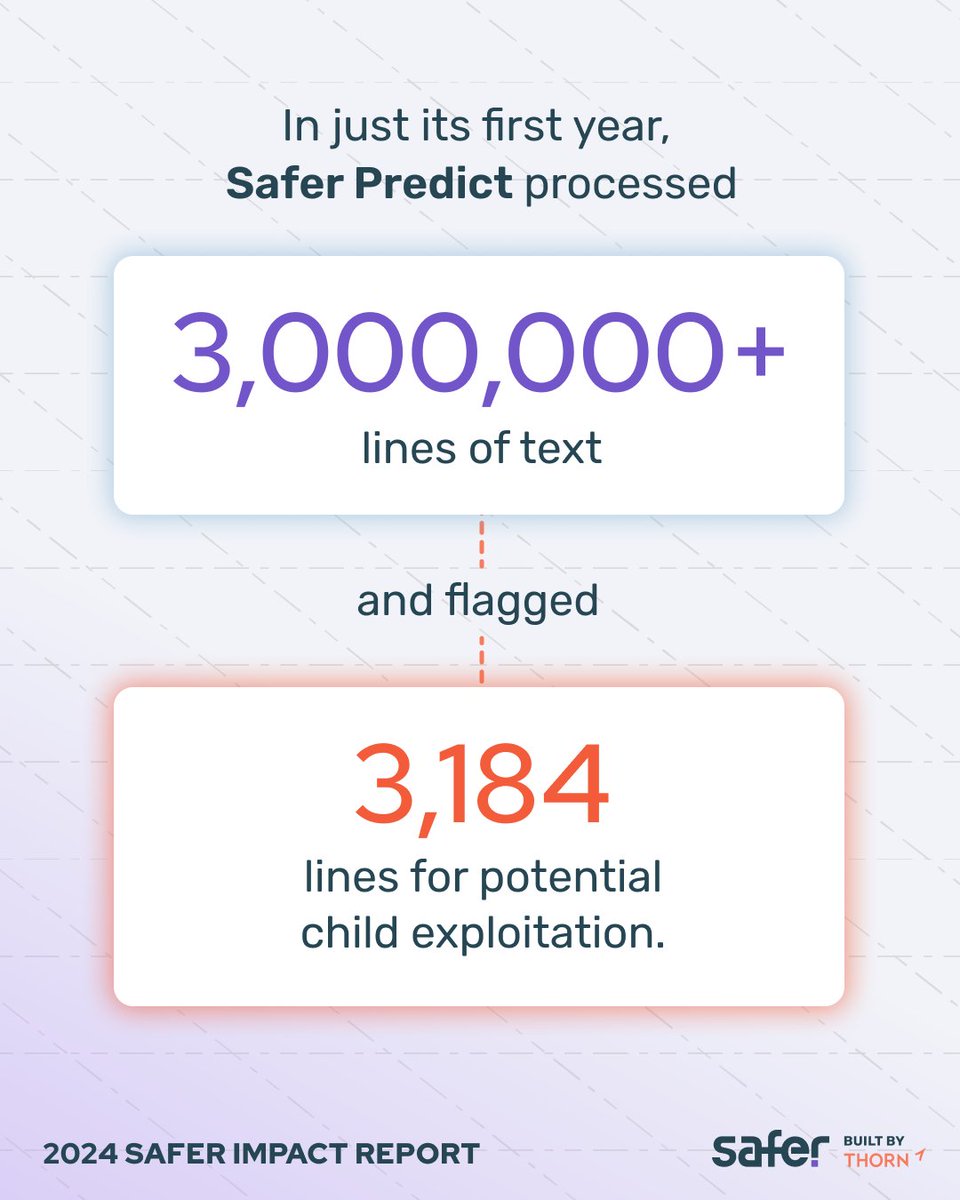

With Safer, @Thorn is equipping content-hosting platforms with industry-leading tools for proactive detection of child sexual abuse material #csam.

California

Joined May 2020

AI is creating new risks for digital platforms—with the rise of explicit deepfake images of minors. In her interview with @pbsnewshour, @thorn's Melissa Stroebel addressed this growing risk and what tech platforms must do now to respond.

0

0

0

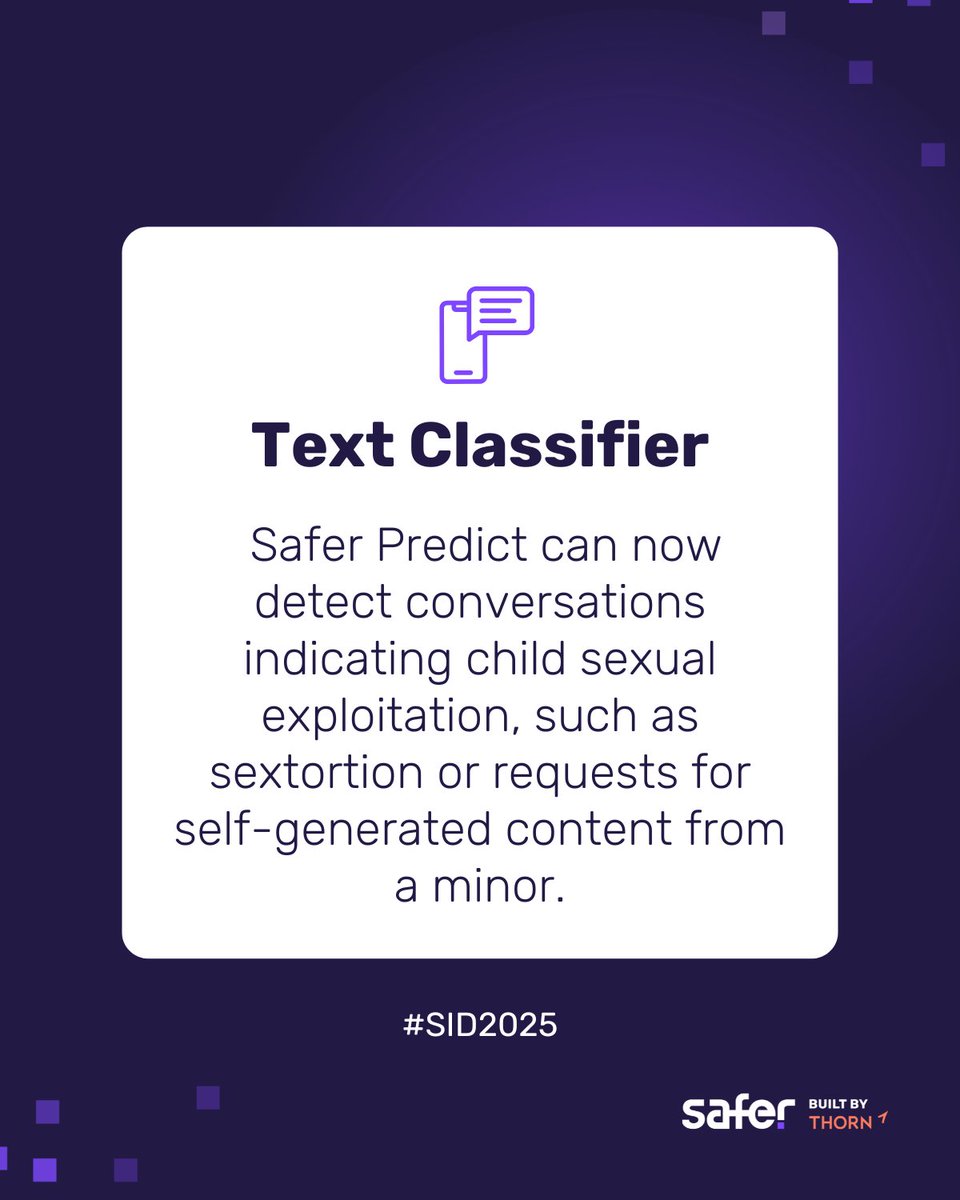

Boundaries blur quickly online. Trust builds fast. What starts casual can quickly turn coercive. For Trust & Safety teams, this moment matters. It’s time to rethink how platforms define risk and design safety. Find out what you can do:

safer.io

Explore new data on online child sexual exploitation and the evolving risks youth face.

0

0

0

🚨Thorn’s latest research shows how today’s youth are being offered money, social currency, material goods, and even in-game rewards in exchange for sexual interactions online. Learn more about the research:.

safer.io

Explore new data on online child sexual exploitation and the evolving risks youth face.

0

0

0

Our partnership with @GIPHY is making a real impact in the fight against child sexual abuse material (CSAM). The results:. ✅ 400% increase in CSAM detected and deleted since 2021. Dive into the GIPHY case study:.

0

0

1

Patricia @Cartes went from dreaming of a career as a translator to shaping #onlinesafety policies at some of the biggest tech companies in the world. On Safe Space with John Starr, she opens up about T&S work and why she’s hopeful about the future:.

0

0

1

From #socialwork to social networks, Jerrel Peterson shares his unexpected journey into Trust & Safety—and why soft skills (and skepticism) matter just as much as technical ones. 🎧 Listen to the full episode:

0

0

0

Since implementing Safer's hash matching service and CSAM classifier in 2021, @GIPHY has:. - Detected and deleted 400% more CSAM files.- Reduced confirmed reports of CSAM through its reporting tools to just one user report. Case Study:

safer.io

GIPHY proactively detects CSAM with Safer to deliver on its promise of being a source for content that makes conversations more positive.

1

0

1

Big news! Our updated site is live, and we’re making it easier than ever to learn how Safer’s #trust & #safety solutions can help protect your platform. The fight for a #saferinternet starts with the right tools. Watch the demo today:

0

0

0

Trust is the foundation of every online platform. This #SaferInternetDay, we ask that you share our mission to keep digital spaces secure and trusted for everyone. Together, we can make a safer internet for everyone. #SID2025

0

0

1

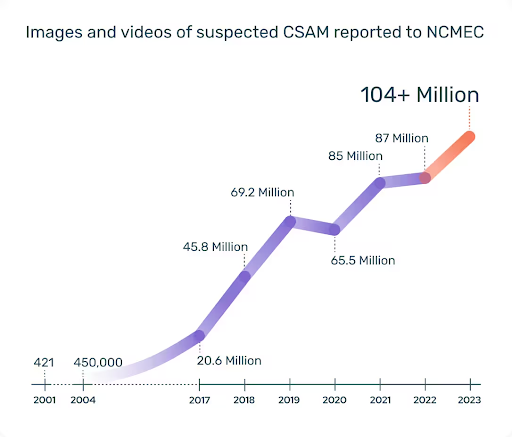

#AI-generated child sexual abuse material (AIG-CSAM) is a rapidly growing threat—and it has real impact on victims, platform safety, and the child safety ecosystem. Learn more about keeping your platform secure from emerging threats:.

get.safer.io

We’re committed to providing resources and solutions to help content-hosting platforms thwart the spread of child sexual abuse material and child sexual exploitation at scale.

0

0

0

We’re so proud and grateful to be selected as one of @EverestGroup's “Content Moderation Technology Trailblazers.”. A heartfelt thank you to our team and partners who believe in our mission and work tirelessly to make this vision a reality. We share this honor with you.

0

0

0