Evidently AI

@EvidentlyAI

Followers

3K

Following

1K

Media

521

Statuses

2K

Open source ML and LLM evaluation 📊 , testing 🚦and monitoring 📈 GitHub: https://t.co/37H9bfnYj6 Discord: https://t.co/ElZ9RlroUa

Joined February 2020

3️⃣ 2️⃣ 1️⃣ Our free course on LLM evaluations for AI product teams starts today! 🎥 7 days of byte-sized videos into your inbox ⭐️ Certificate upon completion 👩💻 No coding skills required 👩🎓500+ students have signed up You can still join the course👇 https://t.co/Go2bNYJXCR

1

1

6

📌 In case you missed it ⚖️ How to build LLM judges that mirror human judgment? Our tutorial shows how to design, test, and calibrate an evaluator for evaluating the quality of LLM-generated code reviews. You can adapt the example for your use case: https://t.co/G1vVvBrXeg

0

0

0

💬 Writing good LLM prompts is tedious. Join us for a webinar on automated prompt optimization: 🐣 Intro to prompt optimization ✅ How to improve prompts with Evidently Open-source 💻 Live demo Thanks @DataTalksClub for the invite! Register here 👇 https://t.co/ajSePLx59i

luma.com

Improving LLM prompts using data-driven feedback optimization - Mikhail Sveshnikov Outline: Overview of prompt optimization challenges and common approaches…

0

1

3

📌 In case you missed it 7 questions about LLM judges! We answered some of the most common questions we get about how LLM judges work and how to use them effectively 👇 https://t.co/LnCc8TyCDZ

evidentlyai.com

LLM-as-a-judge is a popular approach to testing and evaluating AI systems. We answered some of the most common questions about how LLM judges work and how to use them effectively.

0

0

0

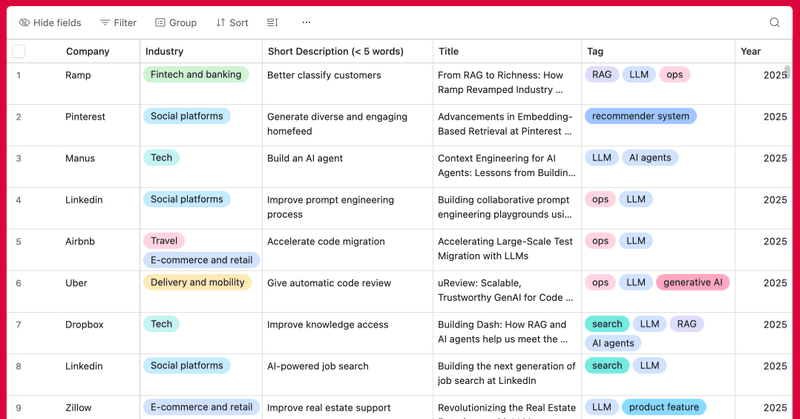

A Friday ML use case 📕 📚 From the database of 650 ML & LLM systems: https://t.co/jJoUj6MfFZ How Booking built an AI agent that assists partners by automatically suggesting a relevant response to each guest inquiry. https://t.co/isWy5be7iu

booking.ai

Authors: Ozan Sonmez, Bjorn Burscher, Klaus Schaefers, Basak Eskili

0

0

1

10 AI risks every team should be testing for! ⚠️ Top risks when building AI products ❌ How to mitigate them ✍️ Common AI risk assessment frameworks ✅ How to set up a continuous AI testing workflow Check whether you test for all the critical risks: https://t.co/geKkIALIqq

0

2

3

🕶 Detecting and reducing scheming in AI models AI models can “scheme” – act helpful while secretly pursuing other goals. Research from OpenAI and Apollo suggests “deliberate alignment” as a new approach to reducing covert actions. Read the paper 👇 https://t.co/qQleAMICJ5

openai.com

Together with Apollo Research, we developed evaluations for hidden misalignment (“scheming”) and found behaviors consistent with scheming in controlled tests across frontier models. We share examples...

0

0

0

📌 In case you missed it 7 agentic AI examples and use cases We explore seven agentic AI examples and use cases in the real world – from transaction analysis to e-commerce recommendations to code review 👇 https://t.co/3J48HOhKYd

evidentlyai.com

In this blog, we will explore seven agentic AI examples and use cases in the real world — from transaction analysis to e-commerce recommendations to code review.

0

0

0

A Friday ML use case 📕 📚 From the database of 650 ML & LLM systems: https://t.co/jJoUj6MfFZ How LinkedIn engineered its Hiring Assistant: the architecture, design choices, and lessons learned from building an agentic product. https://t.co/hnA8O4SNrM

linkedin.com

1

1

1

🚀 LLM tracing and dataset management are now live in Evidently open-source! The new release unlocks previously closed features: 🔡 Data storage backend ✅ Raw dataset management and viewer 🐾 LLM tracing storage and viewer Try it now 👇 https://t.co/xMnU1xHb7O

1

2

3

☠️ How LLMs could be insider threats Anthropic stress-tested 16 LLMs and identified potentially risky agentic behaviors. Turns out, LLMs can blackmail and leak sensitive info as a means to avoid replacement or achieve their goals. Read the paper 👇 https://t.co/JeLFbbmqSP

anthropic.com

New research on simulated blackmail, industrial espionage, and other misaligned behaviors in LLMs

0

0

0

📌 In case you missed it Gen AI use cases in 2025: learnings from 650 examples. We highlighted some new patterns of how top companies apply Gen AI based on a database of real-world AI and ML use cases we’ve been curating 👇 https://t.co/WfL237WONA

evidentlyai.com

Since 2023, we've been curating a database of real-world AI and ML use cases. Here is what we've learned from 650+ examples from top companies.

0

0

0

A Friday ML use case 📕 📚 From the database of 650 ML & LLM systems: https://t.co/jJoUj6MfFZ How Thomson Reuters uses RAG to enhance customer service: support execs can retrieve relevant info from a curated database through a conversational interface. https://t.co/vnmboQoZzz

medium.com

High quality customer support is critical to business success. In this article, we’ll explain how we employed an AI-powered solution…

0

0

0

⚖️ How to align LLM judge with human labels: new tutorial! We break down the process of designing, testing, and calibrating LLM evaluators. We also show how to create an LLM judge for evaluating the quality of LLM-generated code reviews👇 https://t.co/G1vVvBrXeg

1

3

4

🦄 Why language models hallucinate OpenAI’s research suggests that standard training and evaluation reward guessing over acknowledging uncertainty. Read the paper: https://t.co/9znwcKbcL6

openai.com

OpenAI’s new research explains why language models hallucinate. The findings show how improved evaluations can enhance AI reliability, honesty, and safety.

0

0

0

📌 In case you missed it 25 AI benchmarks: examples of AI models evaluation A brief explainer of what AI benchmarks are and why we need them, with 25 examples of common AI benchmarks for reasoning, conversation abilities, coding, RAG, and tool use 👇 https://t.co/xPonNn2CN4

evidentlyai.com

In this blog, we’ll explore AI benchmarks and why we need them. We’ll also provide 25 examples of widely used AI benchmarks for reasoning and language understanding, conversation abilities, coding,...

0

1

2

A Friday ML use case 📕 📚 From the database of 650 ML & LLM systems: https://t.co/jJoUj6MfFZ How Grab combines vector similarity search with LLMs to enhance the relevance and accuracy of search results for complex queries. https://t.co/FBPXG5cLNw

engineering.grab.com

Vector similarity search has revolutionised data retrieval, particularly in the context of Retrieval-Augmented Generation in conjunction with advanced Large Language Models (LLMs). However, it...

0

0

0

❓ 7 questions about LLM judges! We answered some of the most common questions we get about how LLM judges work and how to use them effectively 👇 https://t.co/LnCc8TyCDZ

0

0

0

📌 In case you missed it 8 AI hallucinations examples 🦄 We put together eight examples of real-world AI hallucinations – from a transcription tool fabricating texts to citing made-up company policies. Explore the examples 👇 https://t.co/Mz8QQhoy7C

evidentlyai.com

AI hallucinations come in different forms: from giving factually incorrect responses to inventing nonexistent product features or even people. We compiled eight real-life AI hallucination examples.

1

2

3

📊 What's your go-to MLOps stack? The State of MLOps Survey is live – and we’re excited to see the first results, where Evidently is the most popular tool for ML monitoring 🔥 Kudos to @AxSaucedo for this insightful research! You can still vote here 👇 https://t.co/Rsv1dMgDnd

0

1

1

A Friday ML use case 📕 📚 From the database of 650 ML & LLM systems: https://t.co/jJoUj6MfFZ How Instacart helps users find new products by incorporating LLMs into the search stack to generate discovery-oriented content. https://t.co/kFtDrOtHzu

tech.instacart.com

Authors: Taesik Na, Yuanzheng Zhu, Vinesh Gudla, Jeff Wu, Tejaswi Tenneti Key contributors: Akshay Nair, Benwen Sun, Chakshu Ahuja, Jesse…

0

0

0