Ehsan Imani

@EhsanImanii

Followers

1K

Following

4K

Media

3

Statuses

204

RecSys/Post-Training @Spotify | RLAI grad

Toronto, Ontario

Joined November 2018

Introducing Grok 4.1, a frontier model that sets a new standard for conversational intelligence, emotional understanding, and real-world helpfulness. Grok 4.1 is available for free on https://t.co/AnXpIEOPEb,

https://t.co/53pltyq3a4 and our mobile apps. https://t.co/Cdmv5CqSrb

x.ai

Grok 4.1 is now available to all users on grok.com, 𝕏, and the iOS and Android apps. It is rolling out immediately in Auto mode and can be selected explicitly as “Grok 4.1” in the model picker.

2K

2K

13K

Very excited that our AlphaProof paper is finally out! It's the final thing I worked on at DeepMind, very satisfying to be able to share the full details now - very fun project and awesome team! https://t.co/OuWDemzAt4

19

101

1K

I'm looking for a student researcher to join me at @GoogleDeepMind Montreal to work on getting the best generative AI into the hands of the best creative people. Your work will push models past their current frontiers and directly benefit creative engagements. Are you

22

82

637

Come work with me on OLMo! https://t.co/YiWhZtrcvz

job-boards.greenhouse.io

Seattle, WA

We're starting to hire for our 2026 Olmo interns! Looking for excellent students to do research to help build our best models (primarily enrolled in Ph.D. with experience or interest in any area of the language modeling pipeline).

1

4

98

After 7 years at FAIR, I've been affected by the recent AI layoffs. If you are interested in robotics learning, let's chat :)

61

54

1K

Recommendation system with AI will be a complete game changer. This is nothing like traditional algorithms that recommend based on heuristics. @grok will read every post, understand you even better than yourself, and recommend contents you like. If your content is genuinely

132

62

912

Wish to build scaling laws for RL but not sure how to scale? Or what scales? Or would RL even scale predictably? We introduce: The Art of Scaling Reinforcement Learning Compute for LLMs

9

103

553

🤔Can we train RL on LLMs with extremely stale data? 🚀Our latest study says YES! Stale data can be as informative as on-policy data, unlocking more scalable, efficient asynchronous RL for LLMs. We introduce M2PO, an off-policy RL algorithm that keeps training stable and

4

41

228

To the degree that people are seeing improvements in their feed, it is not due to the actions of specific individuals changing heuristics, but rather increasing use of Grok and other AI tools. We will post the updated algorithm, including model weights, later this week. You

The improvement in the X feed over the past year is incredible X team has finally got to to the bottom and wiped spam & engagement bait now or extremely rare Can’t even recall the last time a low-quality post showed up Huge credit to the X team.... these changes are truly

48K

10K

87K

More on LLMs, RL, and the bitter lesson, on the Derby Mill podcast.

Are LLMs Bitter Lesson pilled? @RichardSSutton says "no" @m_sendhil @suzannegildert @shulgan

https://t.co/HKN9BNYg24

7

18

238

📣 Webscale-RL: Automated Data Pipeline for Scaling RL Data to Pretraining Levels 📣 RL for LLMs faces a critical data bottleneck: existing RL datasets are <10B tokens while pretraining uses >1T tokens. Our Webscale-RL pipeline solves this by automatically converting pretraining

1

7

36

(1/n) Neural networks trained with Adam record a map of their learning journey—the optimizer's second moments (exp_avg_sq). These approximate the Hessian (curvature) at training's end. We used this insight to uncover why model merging works & built stronger merging methods 🧵

3

18

59

At @ChandarLab, we are happy to announce the third edition of our assistance program to provide feedback for members of communities underrepresented in AI who want to apply to high-profile graduate programs. Want feedback? Details: https://t.co/0K6AA4gV0U. Deadline: Nov 01! cc:

2

28

86

when you give up on this nebulous idea and illusion of prestige, you will finally find peace and freedom. submit to TMLR and JMLR.

10

30

549

We just released a model that’s both fast and smart, beating all models of the same size by a huge margin. It’s available for free. Give it a try!

Introducing Grok 4 Fast, a multimodal reasoning model with a 2M context window that sets a new standard for cost-efficient intelligence. Available for free on https://t.co/AnXpIEOhOD,

https://t.co/53pltypvkw, iOS and Android apps, and OpenRouter. https://t.co/3YZ1yVwueV

35

11

366

For anyone worried their LLM might be making stuff up, we made a budget‐friendly truth serum (semantic entropy + Bayesian). See for yourself: https://t.co/gq8oFP5Eqr Paper:

0

7

4

We're hiring Research Scientists in Tech Research @RiotGames! If you're passionate about shaping the future of games with AI and have research experience in RL, IL, or generative methods, we'd love to hear from you. DM me if interested.

1

3

20

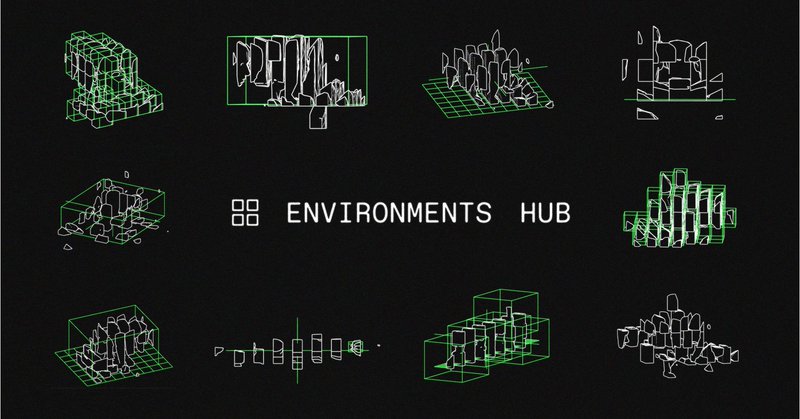

@AndyKhris2 we're building a community. we'd love for you to join. https://t.co/8NwezP5Mif

primeintellect.ai

RL environments are the playgrounds where agents learn. Until now, they’ve been fragmented, closed, and hard to share. We are launching the Environments Hub to change that: an open, community-powered...

0

4

19