Edward Johns

@Ed__Johns

Followers

3K

Following

897

Media

193

Statuses

400

Associate Professor and Director of the Robot Learning Lab at Imperial College London.

London, UK

Joined January 2019

RT @DJiafei: 1/ 🚀 Announcing #GenPriors — the CoRL 2025 workshop on Generalizable Priors for Robot Manipulation!. 📍 Seoul, Korea 📅 Sat 27 S….

0

9

0

I am very happy and proud today that my student Norman Di Palo (@normandipalo) has just passed his PhD viva!. Norman has had a very creative few years in my lab and I've learned a lot from his insights and curiosity. Congratulations Norman, it has been a pleasure working with

3

0

53

Vitalis and I had a cracking chat about "Instant Policy" with @micoolcho and @chris_j_paxton. Thanks for the invite!. If you want to know how to really get in-context learning working in robotics, @vitalisvos19 and I take you through it in the video below 👇.

Ep#13 with @vitalisvos19 & @Ed__Johns on Instant Policy: In-Context Imitation Learning via Graph Diffusion . Co-hosted by @chris_j_paxton & @micoolcho

1

2

14

RT @RoboPapers: Full episode coming soon!. Geeking out with @vitalisvos19 & @Ed__Johns on Instant Policy: In-Context Imitation Learning via….

0

6

0

A few years ago, humanoids with legs walking around the ICRA exhibition was the new thing. This time, it’s the year of the hands! Tons and tons of humanoid hands! #ICRA2025

12

49

309

At #ICRA2025, I'm about to present:."R+X: Retrieval and Execution from Everyday Human Videos". By using a VLM for retrieval and in-context IL for execution, robots can now learn from just videos of humans!. Led by @geopgs and @normandipalo. See:

3

1

46

RT @normandipalo: we open sourced the code to transform human videos into robot trajectories, so you can train robots with your hands 👐🏻. w….

0

29

0

Tomorrow morning at #ICRA2025, I will be presenting our findings on whether robots can learn dual-arm tasks from just a single demonstration. (Spoiler: they can!). Come along!. This was led by my excellent PhD student Yilong Wang. Paper & videos here:

1

12

94

Thank you very much to @Andrey__Kolobov, @shahdhruv_, @AlexBewleyAI, and the rest of the organisers, for delivering an excellent and packed-out workshop!.

0

0

6

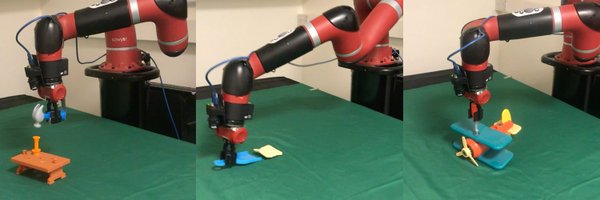

Vitalis (@vitalisvos19) and I were really honoured today to win the Best Paper Award at the ICLR 2025 Robot Learning Workshop, for our paper “Instant Policy”. The video below shows Instant Policy in action… A single demonstration is all you need!. See:

4

19

154

This was led by my excellent student Vitalis Vosylius (@vitalisvos19), in the final project of his PhD. To read the paper and see more videos, please visit (7/7)

0

0

2

Today, we are presenting "Instant Policy" in our #ICLR oral!. Below is a single uncut video: after just one demonstration for a task, the robot has learned that task instantly. So. we've achieved in-context learning in robotics! . See: (1/7) 🧵

1

1

20

This was led by my excellent student Vitalis Vosylius (@vitalisvos19), in the final project of his PhD. To read the paper and see more videos, please visit (7/7)

0

0

0