Dillon Laird

@DillonLaird

Followers

557

Following

2K

Media

47

Statuses

205

Working on vision models @LandingAI 🤖 @StanfordEng @uwcse 👨🎓 neovim enthusiast 💻 I help neural networks find local minima 🧠

San Francisco, CA

Joined April 2009

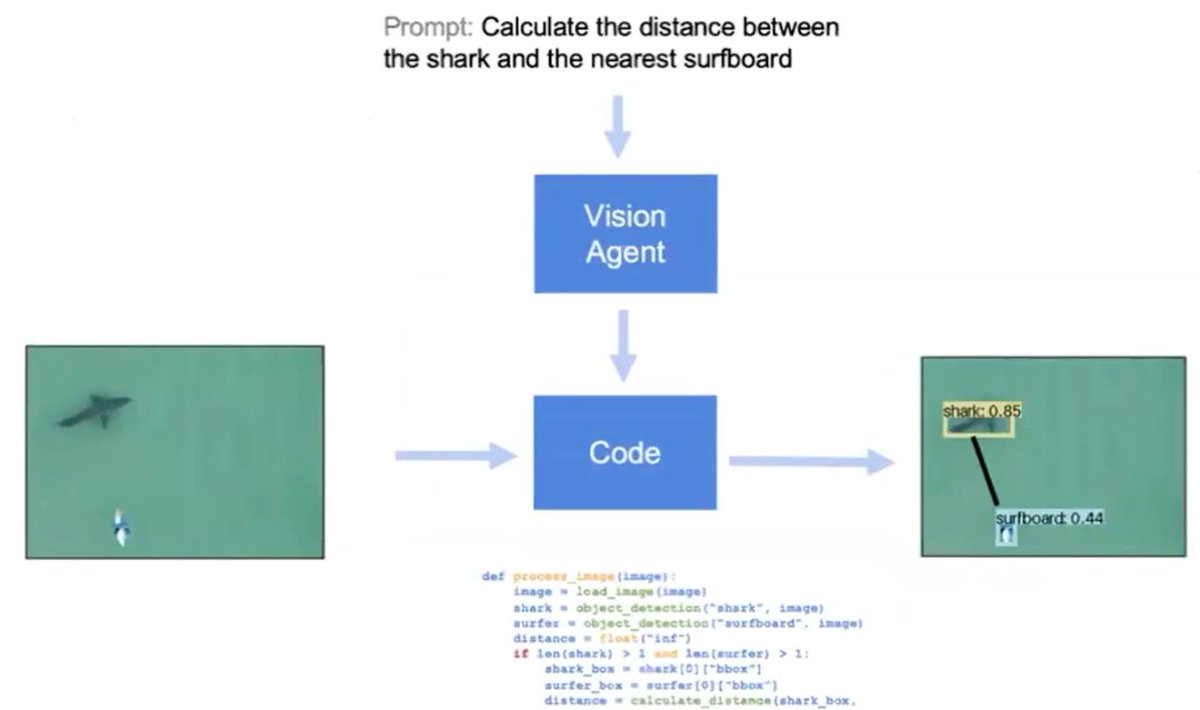

In this new blog we introduce a new version of VisionAgent -- a modular, agentic AI framework that breaks visual reasoning problems into subtasks, chooses the right vision tools, and applies visual design patterns to solve them. Check it out here:

dillonl.ai

Dillon Laird's Personal Website

0

0

2

I’ve made a new personal website and provided a short summary of the ~2 hour lecture with my

dillonl.ai

Dillon Laird's Personal Website

0

0

2

Thanks for hosting @tereza_tizkova!.

🎙️Speakers. We got really great feedback on the lightning talks. I want to thank all the speakers who made their time on Saturday and presented cool demos. It's definitely worth to follow these founders and AI companies:. 📢 Vasek Mlejnsky (@mlejva) - Founder and CEO of @e2b_dev

0

0

4

RT @AndrewYNg: A decision on SB-1047 is due soon. Governor @GavinNewsom has said he's concerned about its "chilling effect, particularly in….

0

481

0

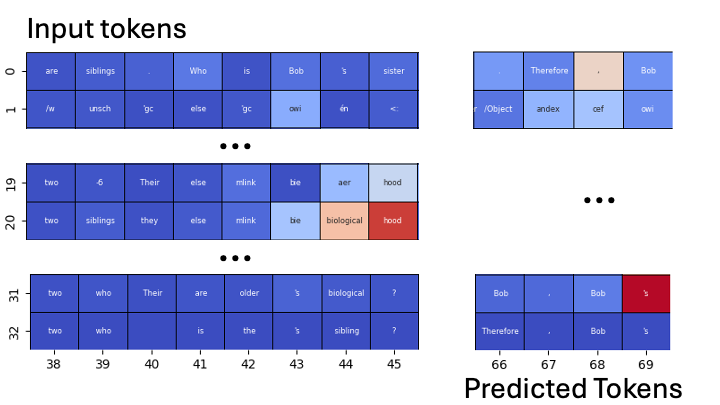

🧵The internal output layer is closest to the token “hood”, which probably doesn’t mean much here because the internal output layers are likely not on the same manifold as the final output layers. You can find the code here

github.com

A simple repo for viewing attention maps of llama 3.1 - dillonalaird/llama-attn-maps

0

0

0

RT @AIatMeta: Introducing Meta Segment Anything Model 2 (SAM 2) — the first unified model for real-time, promptable object segmentation in….

0

1K

0

RT @PascalMettes: Vision-language models benefit from hyperbolic embeddings for standard tasks, but did you know that hyperbolic vision-lan….

0

54

0

RT @mervenoyann: Forget any document retrievers, use ColPali 💥💥. Document retrieval is done through OCR + layout detection, but it's overki….

0

111

0