David G. Rand @dgrand.bsky.social

@DG_Rand

Followers

15K

Following

16K

Media

654

Statuses

11K

Prof @cornell - I'm more active on BlueSky at @dgrand.bsky.social

Ithaca, NY

Joined June 2012

🚨Out in Science!🚨 Conspiracy beliefs famously resist correction, ya? WRONG: We show brief convos w GPT4 reduce conspiracy beliefs by ~20%! -Lasts over 2mo -Works on entrenched beliefs -Tailored AI response rebuts specific evidence offered by believers https://t.co/3Rg79Cx5id 1/

37

178

549

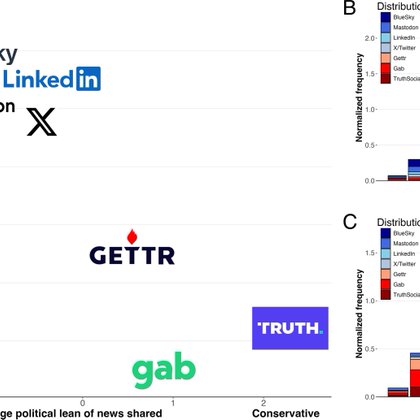

🚨Out in PNAS🚨 Examining news on 7 platforms: 1)Right-leaning platforms=lower quality news 2)Echo-platforms: Right-leaning news gets more engagement on right-leaning platforms, vice-versa for left-leaning 3)Low-quality news gets more engagement EVERYWHERE https://t.co/kRzHK4YP9Q

2

12

43

NEW: @oiioxford researchers analysed 10 million posts across 7 social media platforms. They found: 🗳️ Lower-quality news is shared more on right-leaning platforms 📈 Posts aligning with a platform’s politics get more engagement ⚡ Across all platforms, low-quality posts

14

49

125

New video! Watch Dr Mohsen Mosleh, @oiioxford sharing his insights on his new co-authored research with Jennifer Allen @NYUStern and @David_Rand_ @Cornell, analysing news-sharing trends across seven major social media platforms. Download the full study:

pnas.org

In recent years, social media has become increasingly fragmented, as platforms evolve and new alternatives emerge. Yet most research studies a sing...

NEW: @oiioxford researchers analysed 10 million posts across 7 social media platforms. They found: 🗳️ Lower-quality news is shared more on right-leaning platforms 📈 Posts aligning with a platform’s politics get more engagement ⚡ Across all platforms, low-quality posts

0

3

6

Huge credit to lead author Mohsen Mosleh and always-valuable collaborator Jennifer Allen. Full paper in PNAS: https://t.co/kRzHK4YP9Q And explore more of our group’s misinformation research here:

docs.google.com

Papers related to misinformation from David Rand and Gordon Pennycook’s research team Key papers The Psychology of Fake News TiCS 2021 [X thread] [15 minute video summary] Durably reducing conspiracy...

0

0

1

Conclusion: Some social-media dynamics are consistent across ecosystems (eg high-quality news getting less engagement), but others differ substantially (eg partisan engagement). ⚡️Studying behavior across multiple platforms is crucial for understanding news consumption online⚡️

1

0

1

Result 3b: Surprisingly, the pattern isn’t driven by low-quality outlets overperforming, but by the underperformance of top high-quality outlets (NYT, WSJ, WaPo, Reuters, USA Today). Additional analysis shows paywalls don’t explain the difference.

1

0

2

Result 3: Low-quality news gets more engagement on every platform, including left-leaning BlueSky and algorithm-free Mastodon. Models include user fixed effects, accounting for all possible user-level variation. Within a given user, their lower quality links get more engagement.

1

0

3

Result 2: Instead of a universal right-wing engagement advantage, we see *echo-platforms* where right-leaning domains earn more engagement on right-leaning platforms, left-leaning domains earn more on left-leaning ones.

1

0

3

Result 1b: Neutral news is shared more often than partisan news, and high-quality domains appear more frequently than low-quality ones. These plots are normalized within each platform; the dominance of high-quality news is even stronger without normalization across platforms.

1

1

3

Result 1a: Across platforms, average political lean strongly predicts average quality (r = –0.93). More right-leaning domains tend to be lower quality. Mirrors, atthe platform level, past findings about conservative users sharing lower-quality news.

1

0

1

Research usually looks at only 1 platform - here we look *across* 7 platforms (X, BlueSky, TruthSocial, Gab, GETTR, Mastodon, LinkedIn) and collect *every* post from Jan 2024 with a link to a news domain. Then we estimate domain quality (expert ratings) + political lean (GPT-4)

1

0

1

🚨Out in PNAS🚨 Examining news on 7 platforms: 1)Right-leaning platforms=lower quality news 2)Echo-platforms: Right-leaning news gets more engagement on right-leaning platforms, vice-versa for left-leaning 3)Low-quality news gets more engagement EVERYWHERE https://t.co/kRzHK4YP9Q

2

12

43

Back to X just to post new PNAS paper for @elonmusk: we find @CommunityNotes flags 2.3x more Republicans than Democrats for misleading posts! The issue is Reps sharing misinformation, not fact-checker bias... https://t.co/qnVNWCUHPt

6

36

125

Many thanks to lead author @captaineco_fr who does amazing work on Community Notes and other topics; and the always-wonderful coauthor Mohsen Mosleh For more of my group's work on misinformation, check out this doc:

docs.google.com

Papers related to misinformation from David Rand and Gordon Pennycook’s research team Key papers The Psychology of Fake News TiCS 2021 [X thread] [15 minute video summary] Durably reducing conspiracy...

0

0

7

CONCLUSION: *Clear partisan diff in misinfo sharing not due to political bias on the part of fact-checkers or academics *Undercuts logic offered by Musk+Zuckerberg for eliminating fact-checkers *Platforms should expect more Rep-sanctioning even when using Community Notes!

3

0

3

We also address two poss confounds: *Is it just that more Reps are using X than Dems, throwing off base rates? No, it's the opposite *It is just that more Dems use CN? No, b/c rated-helpful notes + bridging algorithm require people from both sides rating the notes as helpful

2

0

3

Across all English notes 1/23-6/24: 1.5x more notes proposed on tweets written by Reps than Dems. The partisan diff is MUCH bigger when restricting to "helpful" (ie ~unbiased) notes: 70% on Reps, 30% on Dems. The "vox populi" has concluded that Reps share more misinfo than Dems!

1

0

4

Many studies find Reps do share more misinfo. But those studies could be biased in evaluating what is misinfo. So we analyze Community Notes, where users flag posts & X decides what is misleading using a “bridging algorithm” requiring agreement from users who typically disagree

1

0

3

Accusations of bias against Reps (eg Trump+others suspensions) drove @elonmusk to buy Twitter & gut fact-checking in favor of @CommunityNotes. @finkd did same at @Meta. But greater sanctioning of Reps could just be the result of Reps sharing more misinfo

🚨Out in Nature!🚨 Many (eg Trump JimJordan @elonmusk) have accused social media of anti-conservative bias Is this accurate? We test empirically - and it's more complicated than you might think: conservatives ARE suspended more, but also share more misinfo https://t.co/xVcTUDdCEx

1

0

5

Back to X just to post new PNAS paper for @elonmusk: we find @CommunityNotes flags 2.3x more Republicans than Democrats for misleading posts! The issue is Reps sharing misinformation, not fact-checker bias... https://t.co/qnVNWCUHPt

6

36

125