Cleanlab

@CleanlabAI

Followers

2K

Following

643

Media

206

Statuses

699

Cleanlab makes AI agents reliable. Detect issues, fix root causes, and apply guardrails for safe, accurate performance.

San Francisco

Joined October 2021

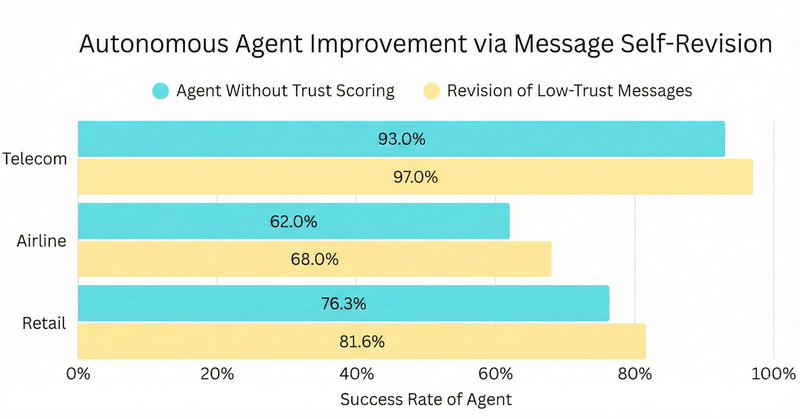

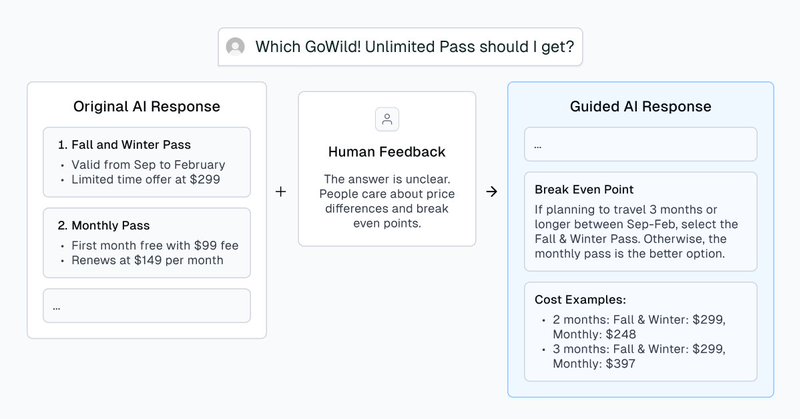

🚀 New from Cleanlab: Expert Guidance AI agents running multi-step workflows can fail in tiny, trust-breaking ways. Expert Guidance lets teams fix these behaviors with simple human feedback, instantly. ✈️In one airline workflow: 76% → 90% after only 13 guidance entries.

1

3

14

For anyone who cares about structured output benchmarks as much as I do, here's an early Christmas present 🎁 ! Pretty well thought out from the folks @CleanlabAI. Seems like I'll def be using it to compare LLMs using BAML and DSPy! https://t.co/clQ0BuaX9l

github.com

A Structured Output Benchmark whose 'ground-truth' is actually right - cleanlab/structured-output-benchmark

4

11

59

Where Did $37B in Enterprise AI Spending Go? $19B → Applications (51%) $18B → Infrastructure (49%) Our report includes a snapshot of the Enterprise AI ecosystem, mapped across departmental, vertical AI, and infrastructure. Although coding captures more than half of

1

2

8

Which LLM is better for Structured Outputs / Data Extraction: Gemini-3-Pro or GPT-5? We ran popular benchmarks, but found their "ground truth" is full of errors. To enable reliable benchmarking, we've open-sourced 4 new Structured Outputs benchmarks with *verified* ground-truth

3

8

33

This pipeline can used to automatically make any agent more reliable. Extensive benchmarks here:

cleanlab.ai

Evaluating autonomous failure prevention for AI agents on the leading customer service AI benchmark.

0

0

1

We discovered how to cut the failure rate of any AI agent on Tau²-Bench, the #1 benchmark for customer service AI. Agents often fail in multi-turn, tool-use tasks due to a single bad LLM output (reasoning slip, hallucinated fact, misunderstanding, wrong tool call, etc). We

2

1

4

The reality: We’re moving from hype to hardening, building the reliability layer AI needs. 🔍 Read the full Cleanlab report → https://t.co/pQRAlTujqj 📰 @Computerworld feature →

computerworld.com

Curtis Northcutt, whose startup focuses on agentic orchestration and finding ways to reduce hallucinations, says companies are already scrambling to get ahead of the fast-evolving tech.

0

0

1

The “Year of the Agent” just got pushed back. Out of 1,837 enterprise leaders, most are struggling with stack churn + reliability. ⚙️ 70% rebuild every 90 days 😬 Less than 35 % are happy with their infrastructure 🤖 Most “agents” still aren’t really acting yet

5

8

27

🚧 Even the best AI models still hallucinate. OpenAI’s recent paper on Why Language Models Hallucinate shows why this problem persists, especially in domain-specific settings. For teams implementing guardrails, we put together a short walkthrough:

0

1

3

AI pilots prove intelligence, but AI in production demands reliability. The best teams separate their stack early: 🧠 Core = how AI thinks 🛡️ Reliability = how it stays safe That’s how prototypes become products. 👉 https://t.co/JtOO6rpKhV

2

7

22

AI agents won’t replace humans. Their real power comes when humans guide it. We just added Expert Answers to our platform: 👩🏫 SMEs fix AI mistakes right away 🔁 Fixes are reused across future queries 📈 Accuracy improves, “IDK” drops 10x Full blog: https://t.co/iLq78qcUhg

0

0

0

Launching an AI agent without human oversight is basically launching a rocket without mission control 🚀 Cool for a few minutes… until something breaks. 🕹️ It’s not the rocket that makes the mission succeed. It’s the control center. https://t.co/ZZKaXQzl5v

9

23

80

📍 Live at @AIconference 2025 in San Francisco! Tomorrow, @cgnorthcutt is sharing practical strategies for building trustworthy customer-facing AI systems, and our team is around all day to connect. 👋 Stop by and geek out with us!

0

0

3

Most AI pilots in financial services never make it to production. The reason is simple: they can’t be trusted. Today, Cleanlab + @CorridorAI are fixing that by combining governance with real-time remediation so AI is finally safe to deploy at scale. 🔗 https://t.co/PxxZOuW3LG

0

0

4

AI safety is not a feature. It is infrastructure. AI agents are probabilistic, which means unpredictability is guaranteed. The 4 risk surfaces every team building AI agents must address: - Responses - Retrievals - Actions - Queries 👉 https://t.co/76czylEas2

8

0

3

🚨 Next week at @AIconference in San Francisco: @cgnorthcutt will share practical strategies with guarantees for building customer-facing AI support agents you can actually trust. 🗓️ Sep 18 | 12:00–12:25 PM 👉 Don’t miss it. https://t.co/8T5HWrNzEn

0

1

0

Today's AI Agent architectures (ReAct, Plan-then-Act, etc) produce too many incorrect responses. Our new benchmark confirms this, evaluating 5 popular Agent architectures in multi-hop Question-Answering. We then added real-time trust scoring to each one, which reduced

2

10

26

💡 Trust Scoring = More Reliable AI Agents AI engineer Gordon Lim's latest study shows that trust scoring reduces incorrect AI responses by up to 56% across popular agents like Act, ReAct, and PlanAct. 🔍 Explore the full study: https://t.co/LgaRsF5eER

0

0

3