Changdae Oh

@Changdae_Oh

Followers

330

Following

487

Media

21

Statuses

99

CS PhD student @ UW-Madison; Trustworthy AI

Madison, Wisconsin, USA

Joined December 2021

Does anyone want to dig deeper into the robustness of Multimodal LLMs (MLLMs) beyond empirical observations Happy to serve this exactly through our new #ICML2025 paper "Understanding Multimodal LLMs Under Distribution Shifts: An Information-Theoretic Approach"!

1

30

113

Heading to SD for #NeurIPS2025 soon! Excited that many students will be there presenting: @HyeonggyuC, @shawnim00, @LeitianT, @seongheon_96 @Changdae_Oh @Samuel861025 @JiatongLi0418, @windy_lwd, @xuanmingzhangai. Let’s enjoy AI conference while it lasts. You can find me at

5

15

121

0

0

5

+Eager to coffee chat on MLLM/agent bias, robustness, uncertainty quantification, or anything AI-ish😍

1

0

3

Planning for #NeurIPS2025 poster hunt? Catch ours! 🤠Before digging into RL, there’s still plenty of unexplored ground in SFT. 🤩Swing by and grab our insights on making multimodal LLMs robust to query distribution shifts! 📍Wed Dec 3, 11am - 2pm, Exhibit Hall C,D,E # 3902

1

4

16

Ilya Sutskever "Three lines of math can prove all of supervised learning" (4:33) "I have not seen an exposition of unsupervised learning that I found satisfying" (7:50) Optimization objective has little relation to the actual objective you care about! https://t.co/IzRQbSNxKY

19

192

2K

The term “AGI” is currently a vague, moving goalpost. To ground the discussion, we propose a comprehensive, testable definition of AGI. Using it, we can quantify progress: GPT-4 (2023) was 27% of the way to AGI. GPT-5 (2025) is 58%. Here’s how we define and measure it: 🧵

208

415

2K

We hear increasing discussion about aligning LLM with “diverse human values.” But what’s the actual price of pluralism? 🧮 In our #NeurIPS2025 paper (with @shawnim00), we move this debate from the philosophical to the measurable — presenting the first theoretical scaling law

8

33

287

Your LVLM says: “There’s a cat on the table.” But… there’s no cat in the image. Not even a whisker. This is object hallucination — one of the most persistent reliability failures in multi-modal language models. Our new #NeurIPS2025 paper introduces GLSim, a simple but

3

47

234

🔭 Towards Extending Open dLLMs to 131k Tokens dLLMs behave differently from AutoRegressive models—they lack attention sinks, making long-context extension tricky. A few simple tweaks go a long way!! ✍️blog https://t.co/Epf2y2Lnsk 💻code https://t.co/c04Cj5iT1y

5

50

200

Super excited to present our new work on hybrid architecture models—getting the best of Transformers and SSMs like Mamba—at #COLM2025! Come chat with @nick11roberts at poster session 2 on Tuesday. Thread below! (1)

2

28

69

Collecting large human preference data is expensive—the biggest bottleneck in reward modeling. In our #NeurIPS2025 paper, we introduce latent-space synthesis for preference data, which is 18× faster and uses a network that’s 16,000× smaller (0.5M vs 8B parameters) than

5

59

325

I will be giving a talk at UPenn CIS Seminar next Tuesday, October 7. More info below https://t.co/RdnTrxfrjk thanks @weijie444 for hosting!

3

15

124

Excited to share our #NeurIPS2025 paper: Visual Instruction Bottleneck Tuning (Vittle) Multimodal LLMs do great in-distribution, but often break in the wild. Scaling data or models helps, but it’s costly. 💡 Our work is inspired by the Information Bottleneck (IB) principle,

2

37

242

Multi-Agent Debate (MAD) has been hyped as a collaborative reasoning paradigm — but let me drop the bomb: majority voting, without any debate, often performs on par with MAD. This is what we formally prove in our #NeurIPS2025 Spotlight paper: “Debate or Vote: Which Yields

11

72

455

Everyday human conversation can be filled with intent that goes unspoken, feelings implied but never named. How can AI ever really understand that? ✨ We’re excited to share our new work MetaMind — just accepted to #NeurIPS2025 as a Spotlight paper! A thread 👇 1️⃣ Human

11

62

345

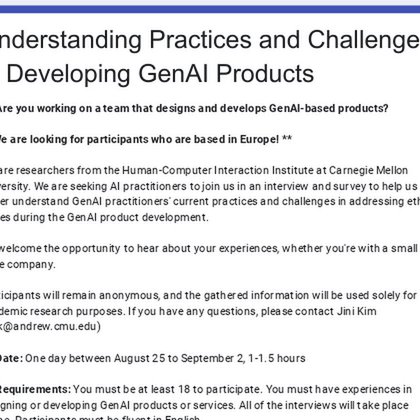

Hello! We are researchers from Carnegie Mellon University. We are looking for AI professionals based in Europe with industry experience in designing Generative AI products and services to share their insights in an interview study. 👉 Sign up here:

docs.google.com

👋 Are you working on a team that designs and develops GenAI-based products? ** We are looking for participants who are based in Europe! ** We are researchers from the Human-Computer Interaction...

0

8

16

My students called the new CDIS building “state-of-the-art”. I thought they were exaggerating. Today I moved in and saw it for myself. Wow. Photos cannot capture the beauty of the design.

17

14

311

It’s official: I got my tenure! Immensely grateful to my colleagues, students, friends, and family who have supported me on this journey. On, Wisconsin!

178

56

2K