Archiki Prasad

@ArchikiPrasad

Followers

2K

Following

2K

Media

48

Statuses

466

PhD student @uncnlp in #NLProc, #ML, advised by @mohitban47 | @Apple Scholar in AI/ML | Intern @GoogleDeepMind; Prev (intern): @AIatMeta (FAIR), @allenai_org

Chapel Hill, NC

Joined December 2016

🥳🥳 Honored and grateful to be awarded the 2025 @Apple Scholars in AI/ML PhD Fellowship! ✨. Huge shoutout to my advisor @mohitban47 for his guidance, and many thanks to my lab mates at @uncnlp, past collaborators, internship advisors, and mentors for their support 😊🙏

38

24

227

RT @jmin__cho: Dear prospective PhD students:.Check out Jaehong’s lab at NTU Singapore — one of the best places for cutting-edge research i….

0

3

0

RT @EliasEskin: 🇦🇹 I’m on my way to #ACL2025 to help present two papers (🧵s below). ➡️ MAT-Steer (07/30 at 11am), our method for steering L….

0

15

0

RT @EliasEskin: 🚨 Excited to announce GrAInS, our new LLM/VLM steering method that uses gradient-based attribution to build more targeted i….

0

12

0

📢 Excited to share our new paper, where we introduce, ✨GrAInS✨, an inference-time steering approach for LLMs and VLMs via token attribution. Some highlights:. ➡️GrAIns leverages contrastive, gradient-based attribution to identify the most influential textual or visual tokens.

🚀 We introduce GrAInS, a gradient-based attribution method for inference-time steering (of both LLMs & VLMs). ✅ Works for both LLMs (+13.2% on TruthfulQA) & VLMs (+8.1% win rate on SPA-VL). ✅ Preserves core abilities (<1% drop on MMLU/MMMU). LLMs & VLMs often fail because

0

21

81

RT @duynguyen772: 🚀 We introduce GrAInS, a gradient-based attribution method for inference-time steering (of both LLMs & VLMs). ✅ Works fo….

0

29

0

RT @jmin__cho: 🥳 Gap year update: I'll be joining @allen_ai/@UW for 1 year (Sep2025-Jul2026 -> @JHUCompSci) & looking forward to working wi….

0

24

0

RT @meetdavidwan: 🎉 Our paper, GenerationPrograms, which proposes a modular framework for attributable text generation, has been accepted t….

0

24

0

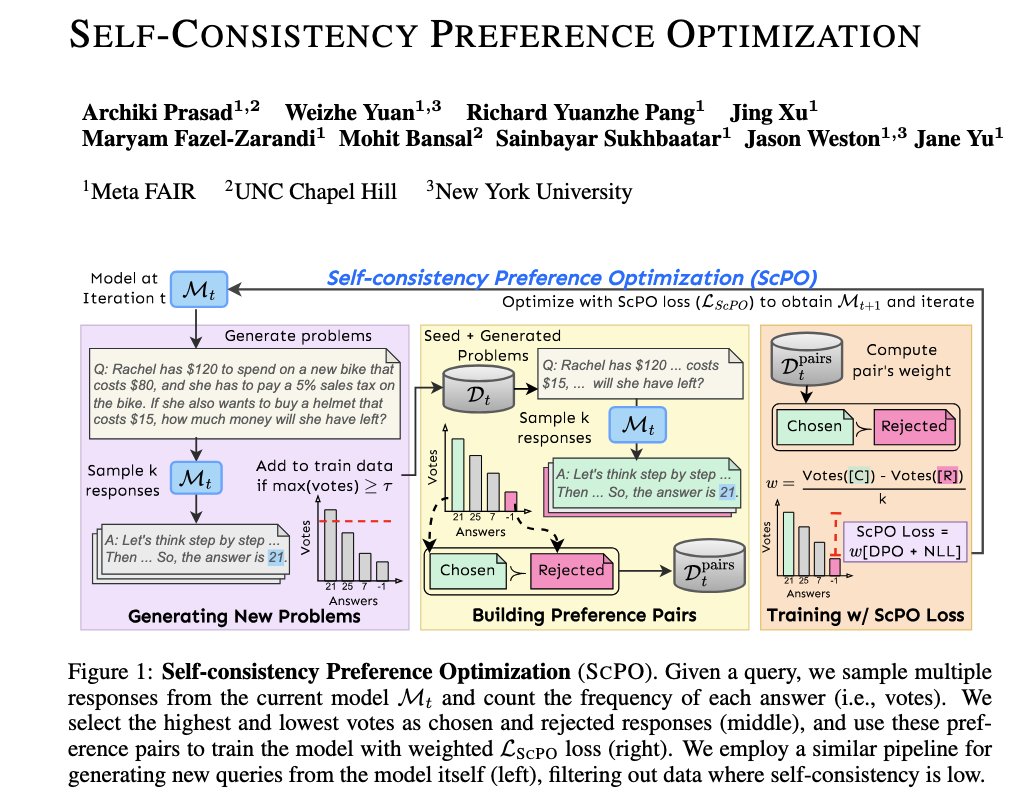

I’ll be at #ICML2025 this week to present ScPO:. 📌 Wednesday, July 16th, 11:00 AM-1:30 PM.📍East Exhibition Hall A-B, E-2404. Stop by or reach out to chat about improving reasoning in LLMs, self-training, or just tips about being on the job market next cycle! 😃.

🚨 Self-Consistency Preference Optimization (ScPO)🚨.- New self-training method without human labels - learn to make the model more consistent!.- Works well for reasoning tasks where RMs fail to evaluate correctness. - Close to performance of supervised methods *without* labels,

0

18

93

🎉 Glad to see our work on handling conflicting & noisy evidence and ambiguous queries in RAG systems (via a new benchmark & multi-agent debate method) has been accepted to #COLM2025 @COLM_conf!! 🇨🇦. Congrats to Han on leading this effort. More details in the thread below and.

🚨Real-world retrieval is messy: queries can be ambiguous, or documents may conflict/have incorrect/irrelevant info. How can we jointly address all these problems?. We introduce:.➡️ RAMDocs, a challenging dataset with ambiguity, misinformation, and noise. ➡️ MADAM-RAG, a

0

13

44

RT @HanWang98: 🥳 Excited to share our work -- Retrieval-Augmented Generation with Conflicting Evidence -- on addressing conflict in RAG due….

0

19

0

RT @ZiyangW00: 🚨Introducing Video-RTS: Resource-Efficient RL for Video Reasoning with Adaptive Video TTS! . While RL-based video reasoning….

0

28

0

RT @EliasEskin: 🎉 Very excited to see TaCQ — our work on task-conditioned mixed-precision quantization that draws on interpretability metho….

0

15

0

🥳Our work UTGen & UTDebug on teaching LLMs to generate effective unit tests & improve code debugging/generation has been accepted to @COLM_conf #COLM2025!. Stay tuned for more exciting results -- e.g., using 32B-scale UTGen models to improve debugging with frontier models like.

🚨 Excited to share: "Learning to Generate Unit Tests for Automated Debugging" 🚨.which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests. UTGen+UTDebug improve LLM-based code debugging by addressing 3 key

8

26

89

RT @hyunji_amy_lee: 🥳Excited to share that I’ll be joining @unccs as postdoc this fall. Looking forward to work with @mohitban47 & amazing….

0

27

0

RT @mohitban47: 🎉 Yay, welcome @hyunji_amy_lee -- super excited to have you join us as a postdoc! 🤗. Welcome to our MURGe-Lab + @unc_ai_gro….

0

11

0

RT @meetdavidwan: Excited to share GenerationPrograms! 🚀. How do we get LLMs to cite their sources? GenerationPrograms is attributable by d….

0

41

0

RT @jaew00_lee: 🎉Excited to share that I’ll be starting my CS PhD journey at @UNC @unccs this fall! 🎓.I’ll be working with the renowned @mo….

0

12

0

RT @A_v_i__S: Big news! 🎉 I’m joining UNC-Chapel Hill as an Assistant Professor in Computer Science starting next year! Before that, I’ll….

0

34

0

RT @hirscheran: 🚨 Introducing LAQuer, accepted to #ACL2025 (main conf)!. LAQuer provides more granular attribution for LLM generations: use….

0

32

0

RT @rohanpaul_ai: RAG systems struggle to simultaneously handle ambiguous queries, conflicting information, and noise. This paper introduc….

0

4

0