Amr Farahat

@AmrFouad_

Followers

701

Following

8K

Media

196

Statuses

2K

MD/M.Sc/PhD candidate @ESI_Frankfurt and IMPRS for neural circuits @MpiBrain. Medicine, Neuroscience & AI

Frankfurt, Germany

Joined March 2009

🧵 time! 1/15 Why are CNNs so good at predicting neural responses in the primate visual system? Is it their design (architecture) or learning (training)? And does this change along the visual hierarchy?

🚨Preprint Alert New work with @martin_a_vinck We elucidate the architectural bias that enables CNNs to predict early visual cortex responses in macaques and humans even without optimization of convolutional kernels. 🧠🤖

1

54

230

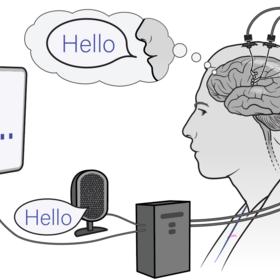

We're excited to announce the Brain-to-Text '25 competition, with a new intracortical speech neuroscience dataset and $9,000 in prizes generously provided by @BlackrockNeuro_!. Can you do better than us at decoding speech-related neural activity into text? https://t.co/MKz7WuHjTh

kaggle.com

Decode intracortical neural activity during attempted speech into words

4

41

120

🧵1/ ✨New preprint ✨ LLMs are getting better at answering medical questions. However, they still struggle to spot and fix errors in their own reasoning. That’s a big problem in medicine, where stakes are high and mistakes at any step could be critical. To address this issue,

2

51

226

Firing rates in visual cortex show representational drift, while temporal spike sequences remain stable https://t.co/Cqpx0Ea9hr Great work by Boris Sotomayor and with Francesco Battaglia

0

19

103

15/15 It is also important to use various ways to assess model strengths and weaknesses, not just one like prediction accuracy.

1

0

7

14/15 Our results also emphasize the importance of rigorous controls when using black box models like DNNs in neural modeling. They can show what makes a good neural model, and help us generate hypotheses about brain computations

1

1

8

13/15 Our results suggest that the architecture bias of CNNs is key to predicting neural responses in the early visual cortex, which aligns with results in computer vision, showing that random convolutions suffice for several visual tasks.

1

0

8

12/15 We found that random ReLU networks performed the best among random networks and only slightly worse than the fully trained counterpart.

1

0

6

11/15 Then we tested for the ability of random networks to support texture discrimination, a task known to involve early visual cortex. We created Texture-MNIST, a dataset that allows for training for two tasks: object (Digit) recognition and texture discrimination

1

0

10

10/15 We found that trained ReLU networks are the most V1-like concerning OS. Moreover, random ReLU networks were the most V1-like among random networks and even on par with other fully trained networks.

1

0

8

9/15 We quantified the orientation selectivity (OS) of artificial neurons using circular variance and calculated how their distribution deviates from the distribution of an independent dataset of experimentally recorded v1 neurons

1

0

9

8/15 ReLU was introduced to DNNs inspired by sparsity and i/o function of biological neurons. To test its biological relevance, we looked for characteristics of early visual processing: orientation selectivity and the capacity to support texture discrimination

1

0

8

7/15 Importantly, these findings hold true both for firing rates in monkeys and human fMRI data, suggesting their generalizability.

1

0

7

6/15 Even when we shuffled the trained weights of the convolutional filters, V1 models were way less affected than IT

1

0

6

5/15 This means that predicting responses in higher visual areas (e.g., IT, VO) strongly depends on precise weight configurations acquired through training in contrast to V1, highlighting the functional specialization of those areas.

1

0

10

4/15 We quantified the complexity of the models' transformations and found that ReLU models and max pooling models had considerably higher complexity. Complexity explained substantial variance in V1 encoding performance in comparison to IT (63%) and VO (55%) (not shown here)

2

1

7

3/15 Surprisingly, we found out that even training simple CNN models directly on V1 data did not improve encoding performance substantially, unlike IT. However, that was only true for CNNs using ReLU activation functions and/or max pooling.

1

0

7

2/15 We found that training CNNs for object recognition doesn’t improve V1 encoding as much as it does for higher visual areas (like IT in monkeys or VO in humans)! Is V1 encoding more about architecture than learning?

1

0

10