Amartya Sanyal

@AmartyaSanyal

Followers

2K

Following

3K

Media

123

Statuses

2K

Assistant Professor @DIKU_Institut @UCPH_Research, Ex Postdoc @MPI_IS @ETH_ AI Center @CompSciOxford, @StHughsCollege, @turinginst, @cseatiitk

Copenhagen, Denmark

Joined June 2014

Ad for postdoc position with me in University of Copenhagen with a relatively tight deadline. Note: The Assistant Professor position is essentially a senior postdoc position. We will discuss which position is more suitable for you after shortlisting.

1

1

22

RT @mlsec: 🚨 Got a great idea for an AI + Security competition?. @satml_conf is now accepting proposals for its Competition Track! Showcase….

0

11

0

RT @mlsec: We’re happy to announce the Call for Competitions for.@satml_conf!. The competition track has been a highlight of SaTML, featuri….

0

7

0

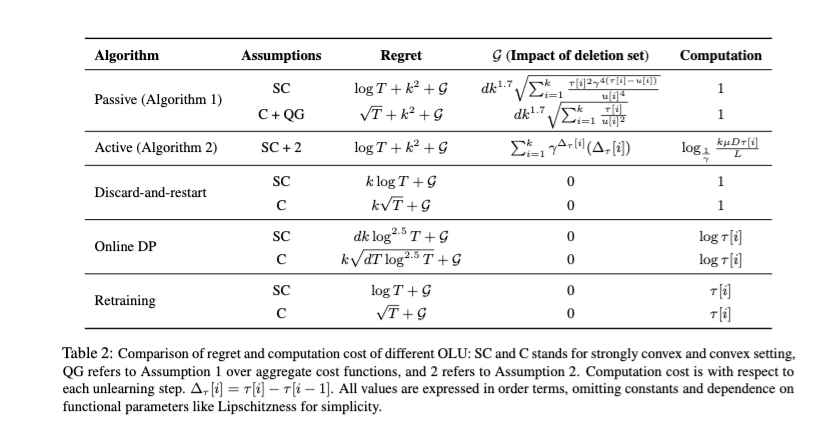

🚨New Paper: Online Learning and Unlearning 🚨. We look at learning and unlearning in the online setting where both learning and unlearning requests arrive continuously over time. Lead by @yaxi_hu and joint work with @bschoelkopf .

arxiv.org

We formalize the problem of online learning-unlearning, where a model is updated sequentially in an online setting while accommodating unlearning requests between updates. After a data point is...

What if learning and unlearning happen simultaneously, with unlearning requests between updates? . Check out our work on online learning and unlearning-algorithms with certified unlearning, low computational overhead and almost no additional regret.

1

2

32

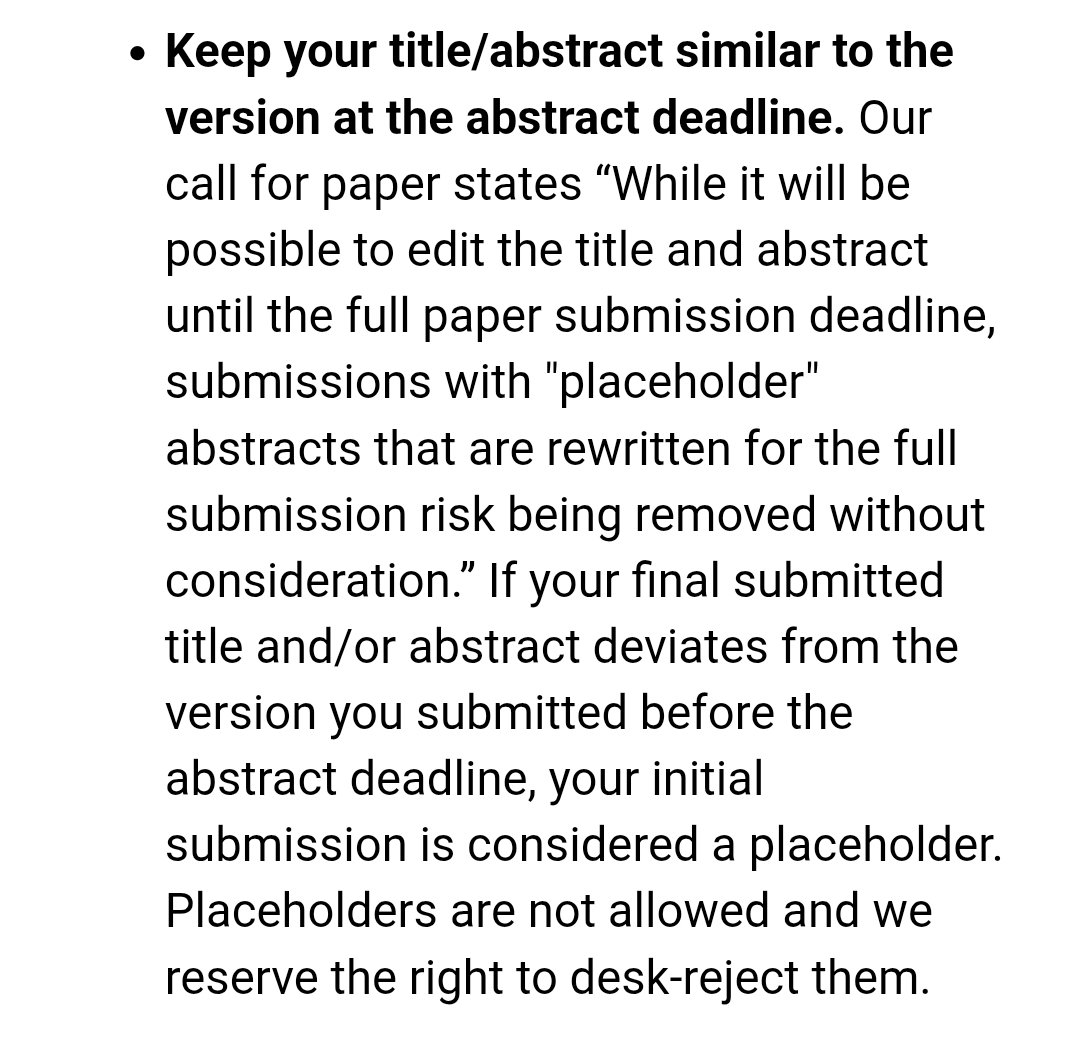

Yes @NeurIPSConf, this is confusing. Information available at abstract deadline was that abstract is editable but can't be placeholders. My understanding was that we can't materially change it but edits are possible. Is that not true anymore ?. Then why is it still editable?.

What is the point of editable abstract if it cannot "deviate"? Also, what is the point of three different submission dates (abstract, full paper, and supplementary)? Just adds unnecessary complications IMO. @NeurIPSConf

0

0

7

RT @yaxi_hu: What if learning and unlearning happen simultaneously, with unlearning requests between updates? . Check out our work on onlin….

0

14

0

Tomorrow in #AISTATS2025 , I'll present our poster on how label noise in training data and distribution shift in test don't play well together. An instance where accuracy-on-line breaks - we call this accuracy-on-the-wrong-line. @yaxi_hu @yixinwang_ @bschoelkopf , yaodong, yian

2

2

15

RT @kasperglarsen: I have an opening for a post doc position in machine learning theory, with a deadline of June 1st. Please share and appl….

international.au.dk

Vacancy at Computer Science, Dept. of, Aarhus University

0

9

0

RT @stanleyrwei: New unlearning work at #ICLR2025! We give guarantees for unlearning a simple class of language models (topic models), and….

arxiv.org

Machine unlearning algorithms are increasingly important as legal concerns arise around the provenance of training data, but verifying the success of unlearning is often difficult. Provable...

0

16

0

I will be in Singapore for #ICLR2025. Happy to chat all things ML & privacy, robustness, unlearning, etc. We will present 3 papers.1. DP alignment in LLMs - 2. Unlearning in Topic models - 3. Detect backdoors-

arxiv.org

Current backdoor defense methods are evaluated against a single attack at a time. This is unrealistic, as powerful machine learning systems are trained on large datasets scraped from the internet,...

1

5

54

#SaTML2025 is nearly here and we do not have late registration fees. Join us in Copenhagen in this young conference to talk about security, privacy, safety, fairness, and robustness (among others things) of Machine Learning algorithms. Registration:

eventsignup.ku.dk

No plans for April 9–11 yet? — Why not spend an amazing week in beautiful Copenhagen 🇩🇰, exploring cutting-edge research on trustworthy machine learning. Join us at SaTML 2025, the premier conference on AI security, AI privacy, and AI fairness!. 👉

0

1

14

Very shortly at @RealAAAI , @alexandrutifrea and I will be giving a Tutorial on the impact of Quality and availability of labels and data for Privacy, Fairness, and Robustness of ML algorithms . See here @MLSectionUCPH @DIKU_Institut @GoogleDeepMind.

1

5

38

I was very lucky and happy to be awarded the Villum Young Investigator grant yesterday Looking forward to the resulting research in unlearning, privacy, and online learning supported by @VILLUMFONDEN.Hiring motivated PhDs and postdocs, especially postdocs.

villumfonden.dk

Nineteen promising researchers working within the technical and natural sciences have received funding of DKK 150 million for their research projects.

1

0

42

Really enjoyed hearing about this paper the first time and then reading it later!.

📢📢 Happy to share that our paper on unlearning evaluations has been accepted to ICLR 2025 🇸🇬 . 📜: Thanks to my great co-authors @SethInternet @thegautamkamath @jimmy_di98 @ayush_sekhari @yiweilu.

0

0

2

RT @FazlBarez: 🚨 New Paper Alert: Open Problem in Machine Unlearning for AI Safety 🚨. Can AI truly "forget"? While unlearning promises data….

0

62

0