Alexandre TL

@AlexandreTL2

Followers

743

Following

42K

Media

188

Statuses

516

Intern at @LinguaCustodia in Paris. (Pre|post)-training LLMs

Montpellier, France

Joined January 2020

muP works great for Mamba !.Zero-shot transfered the learning rate from a 172k model to a 105 model. Now part of 👇🧵

2

8

69

RT @SeunghyunSEO7: btw, i wrote a post about "how to scale" based on what i've learned over the past few months. it covers muP, HP scaling….

0

79

0

Got the chance to test out the nGPT implementation of @NousResearch but unfortunately their baseline (and their nGPT) is far behind nanoGPT, let alone modded-nanoGPT (11/08/24 record so very old, before Muon and stuff)

5

4

55

that's it, hope that I will be able to post more about this stuff, this is really interesting!.@bfspector @simran_s_arora @AaryanSinghal4.

1

0

4

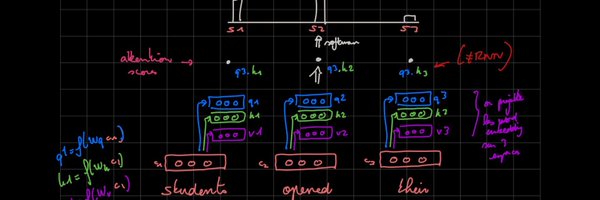

if we received a new chunk of sequence (Q5, K5, V5), we would have : O5 = Q5 @ S + causal(Q5@K5^T) @ V.

1

0

3