Sandro Pezzelle

@sandropezzelle

Followers

813

Following

2K

Media

39

Statuses

384

Assistant Professor at the University of Amsterdam. #NLProc #AI #CogSci #interpretability

Amsterdam, The Netherlands

Joined December 2012

Can we use "#LLMs instead of Human Judges?" To answer this question, we (a team brought together by @ELLISforEurope) conducted a large-scale empirical study across 20 #NLP evaluation tasks! 🚀 Check out JUDGE-BENCH👍👎🤌, now on arXiv: https://t.co/qmOSZQHrfN

#NLProc #genAI #AI

1/5 📣 Excited to share “LLMs instead of Human Judges? A Large Scale Empirical Study across 20 NLP Evaluation Tasks”! https://t.co/BIqZCmToz1 🚀 We introduce JUDGE-BENCH, a benchmark to investigate to what extent LLM-generated judgements align with human evaluations. #NLProc

1

2

22

I'll be at @COLM_conf until Thursday, presenting this paper—check it out at the Wednesday 4:30-6:30 poster session! Would also love to chat with folks about (mechanistic) interpretability, and how it can be useful for cog sci / linguistics

Circuits are a hot topic in interpretability, but how do you find a circuit and guarantee it reflects how your model works? We (@sandropezzelle, @boknilev, and I) introduce a new circuit-finding method, EAP-IG, and show it finds more faithful circuits https://t.co/P1ZlOH2O9n 1/8

1

5

47

Time for panel discussion! At #RepL4NLP at #ACL2024, @_dieuwke_ @PontiEdoardo @megamor2 and @hassaan84s exchange ideas on the importance of representation analysis, data, and generalization in the era of #LLMs! Kudos to our own @seraphinagt for moderating! 🚀 @sigrep_acl #NLProc

1

3

16

0

2

6

0

2

3

It was great! Thanks to the #CMCL organizers for having me 🙏

🎉Let's start #CMCL2024 @aclmeeting with our first invited speaker, Dr. @sandropezzell: How do llms deal with implicit and underspecified language? 🕵Let's find out! Come check in room Lotus 4 #ACL2024 💫

0

0

15

Time for the first #RepL4NLP at #ACL2024 poster session of the day! 🔥 Come to poster boards 66 to 100 to learn more about the accepted papers! @sigrep_acl @seraphinagt @henryzhao4321

0

1

6

Great invited talk by @PontiEdoardo at the #RepL4NLP workshop at #ACL2024 on eliminating fixed tokenizers and compress #LLMs memory for efficiency! 🚀 Thanks, Edo, for being with us! @seraphinagt @sigrep_acl @mariusmosbach @atanasovapepa @henryzhao4321 @peterbhase

2

4

26

Happening now! 🚀🇹🇭

I am attending #ACL2024 in Bangkok with 2 papers on multimodal #NLProc 🇹🇭 🧵 1) "Naming, Describing, and Quantifying Visual Objects in Humans and LLMs" with @sandropezzelle and J. Sprott (poster 12/8 at 14:00 - with a fun game for attendees)

0

0

7

A few months ago, @sandropezzelle and I were awarded a grant by the University of Amsterdam. We proposed a method to bridge #AI representations to human-readable explanations. Our method can be applied to #NLP, #ComputerVision, and #aiinhealthcare .

1

1

8

Consider applying for these positions! Great opportunity at @UvA_Amsterdam

Postdocs in multimodal / modular NLP, CV and NLP at @UvA_Amsterdam @AmsterdamNLP. Deadline: May 31 https://t.co/1IyUtnurZU I am at @iclr_conf, connect if interested

0

0

3

Check out Gecko 🦎: @GoogleDeepMind's latest work looking at how to evaluate text-to-image technology with: 📊 a new benchmark 🕵️ 100K+ human ratings of state-of-the-art T2I models 🤖 a better human-correlated auto-eval metric https://t.co/CyB4YwgYjh

5

22

99

Humans often use non-literal language to communicate –like hyperboles for added emphasis or sarcasm to offer polite criticism with humor AI systems should be able to decipher the real intent behind these words, and respond appropriately! 📜 https://t.co/qoVSjgAOkl

#ACL2024 main🎉

4

23

91

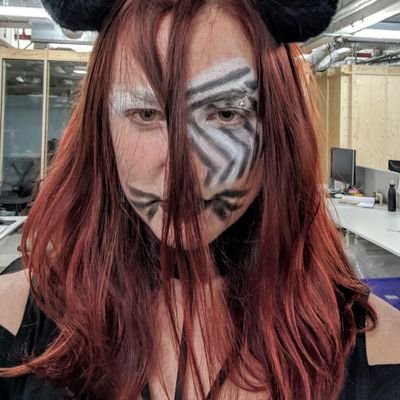

Reposting just because these pics are beautiful ❤️ congrats, Dr. @ecekt2!!

Many thanks to the members of the evaluation committee @sinazarriess @bertugatt @KatiaShutova @wzuidema and Khalil Sima’an A couple of snapshots of part of the group in the best Dutch style #Rembrandt

0

0

12

🔥Proud supervisor moment 🔥 Congratulations, doctor @ecekt2, for this great achievement! 🤩 You rock! (but also folk, blues, jazz, and more 😅) 🎸🎸 Ad maiora!!

Congratulations to Dr. Ece Takmaz @ecekt2 who successfully defended her PhD thesis “Visual and Linguistic Processes in Deep Neural Networks: A Cognitive Perspective”, proudly co-supervised with @sandropezzelle 🎊🎓 @UvA_Amsterdam @illc_amsterdam

0

0

25

Some very cool recent work from @ChengxuZhuang on visually grounded language learning. Surprisingly, standard text/image repr learning gives great image reprs but doesn't change behavior much on language tasks---how can we use image data for better learning of *language*?

Two papers! Can visual grounding help LMs learn more efficiently? 1. We show that algs like CLIP don't learn language better ( https://t.co/JazJksBGf2) 2. We then propose a new one, LexiContrastive Grounding, which does! ( https://t.co/gIDBKk4Dpk) Code: https://t.co/Tqx0w9uXkj 🧵

0

1

27

Congratulations to Stefania Milan @annliffey and Don Weenink @FMG_UvA, @tobias_blanke @UvA_Science @UvA_Humanities, Max Nieuwdorp @amsterdamumc, and Linda Amaral Zettler of NIOZ @Plastisphere @IBED_UvA – all recipients of @ERC_Research Advanced Grants 🎉 https://t.co/TUDNrleV0I

uva.nl

The European Research Council (ERC) has awarded prestigious Advanced Grants to UvA academics Prof. Tobias Blanke, Prof. Stefania Milan and Dr Don Weenink. The grants, which amount to 2.5 million...

0

3

18

👶 BabyLM Challenge is back! Can you improve pretraining with a small data budget? BabyLMs for better LLMs & for understanding how humans learn from 100M words New: How vision affects learning Bring your own data Paper track https://t.co/uU12YWwLTe 🧵

7

42

136

BabyLM is back for round two! This time we have: 1. Multimodal track 2. Bring your own data and 3. Paper track! See the CfP for all the juicy details!

👶 BabyLM Challenge is back! Can you improve pretraining with a small data budget? BabyLMs for better LLMs & for understanding how humans learn from 100M words New: How vision affects learning Bring your own data Paper track https://t.co/uU12YWwLTe 🧵

0

3

31