Richard Diehl Martinez

@richarddm1

Followers

152

Following

55

Media

5

Statuses

59

CS PhD at University of Cambridge. Previously Applied Scientist @Amazon, MS/BS @Stanford.

Joined December 2009

RT @piercefreeman: Text diffusion models might be the most unintuitive architecture around. Like: let's start randomly filling in words in….

0

3

0

RT @GeoffreyAngus: Struggling with context management? Wish you could just stick it all in your model?. We’ve integrated Cartridges, a new….

0

11

0

in case ur writing an nlp thesis rn - here's a pretty chunky bib I made that might be useful .

github.com

My Cambridge PhD Thesis (2025) . Contribute to rdiehlmartinez/phd-thesis development by creating an account on GitHub.

0

0

2

Training language models is an art not a science. But I don't think it should be. That's why I've been working on ~Pico~ : .

www.youtube.com

Introducing PicoLM: A Modular Framework for Hypothesis-Driven Small Language Model ResearchFor more information visit: https://www.picolm.ioSupported by a gr...

1

1

12

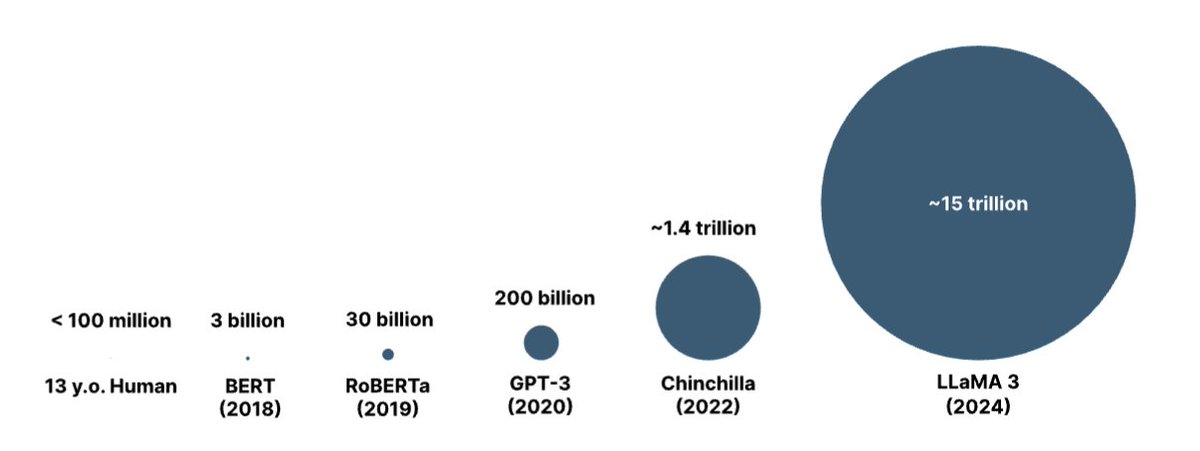

Small language models are worse than large models because they have less parameters . duh! . Well not so fast. In a recent #EMNLP2024 paper, we find that small models have WAY less stable learning trajectories which leads them to underperform.📉 .

arxiv.org

Increasing the number of parameters in language models is a common strategy to enhance their performance. However, smaller language models remain valuable due to their lower operational costs....

0

5

17

Language Models tend to perform poorly on rare words - this is a big problem for niche domains!. Our Syntactic Smoothing paper (#EMNLP2024) provides a solution 👀.

arxiv.org

Language models strongly rely on frequency information because they maximize the likelihood of tokens during pre-training. As a consequence, language models tend to not generalize well to tokens...

2

3

25

RT @pietro_lesci: 🙋♂️My Lab mates are @emnlpmeeting this week: drop by their posters! . If you want to know more about our recent work [1]….

arxiv.org

Increasing the number of parameters in language models is a common strategy to enhance their performance. However, smaller language models remain valuable due to their lower operational costs....

0

5

0