Pluralis Research

@PluralisHQ

Followers

8K

Following

648

Media

12

Statuses

100

We've reached a major milestone in fully decentralized training: for the first time, we've demonstrated that a large language model can be split and trained across consumer devices connected over the internet - with no loss in speed or performance.

94

266

934

RT @_AlexanderLong: Thats a wrap for ICML2025. Incredible to watch the space go from "What are you talking about" to "That's impossible" to….

0

4

0

We also made a @3blue1brown style video explaining the paper at a high level.

Pluralis has a main tack paper at ICML this week and the team is in Vancouver running several events. The best will be the Open Source Mixer we're running with @gensynai and @akashnet_ Thursday night. For anyone interested in decentralised training it should be a great evening.

6

11

76

Pluralis has a main tack paper at ICML this week and the team is in Vancouver running several events. The best will be the Open Source Mixer we're running with @gensynai and @akashnet_ Thursday night. For anyone interested in decentralised training it should be a great evening.

11

14

119

RT @gensynai: Connect with fellow open-source developers, engage with leading minds in decentralized AI (DeAI) and machine learning, and en….

lu.ma

Join Akash Network, Gensyn, and Pluralis Research for an Open Bar & Open Source Mixer—a premier networking event for machine learning professionals—on July…

0

7

0

RT @_AlexanderLong: Using beautiful Grafana dashboards for everything internally, so much nicer than Tensorboard. Wandb still good but does….

0

5

0

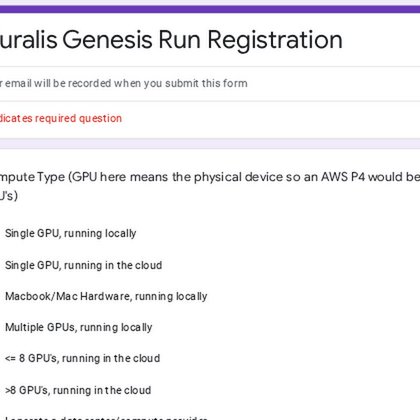

@SameeraRamasin1 Now this is feasible, we will begin a live run of the first community Protocol Model - Pluralis’s Genesis Run - in the coming days. Registration here: EOT.

docs.google.com

7

13

67

@SameeraRamasin1 We also expect this to also have a major impact on centralized training, reducing the importance of high speed networking, making spot-instance based training feasible, and increasing inference speeds.

1

2

31

@SameeraRamasin1 And it is the most significant milestone in our Protocol Learning Research Program.

blog.pluralis.ai

Two enormous, previously disparate fields converge and a path towards the largest models to ever be trained is opened.

1

0

26

@SameeraRamasin1 This unlocks:. 1. Truly community-created and owned base models. 2. A new path to scaling base models beyond anything we have seen to date. It is a result that we set out to achieve almost exactly one year ago today.

blog.pluralis.ai

Collaborative Training of foundation models is closer to actualization than broadly understood. The popular view that low bandwidth node-to-node connections render this infeasible is incorrect.

1

1

33

@SameeraRamasin1 What this means is, for the first time, individuals can pool globally distributed compute to train models far larger than they could alone. As the model is split across nodes, the only constraint is how much compute the protocol can gather.

1

1

21

This is a very high-level summary. All details are in the pre-print (led by @SameeraRamasin1)

1

1

33

This work proves a third path is viable between closed models and today’s open-weight releases, which remain centralized and unsustainable. Community-owned models are the only true open-source AI and open a new path to scale.

blog.pluralis.ai

Developing the true open-source AI

1

4

54