Tikhnov regularised trust-region methods (*cough* Levenberg-Marquardt) oddly use two different approximations to the objective function at each step.

One regularised, one not.

What if we just regularised both?

1/

1

6

35

Replies

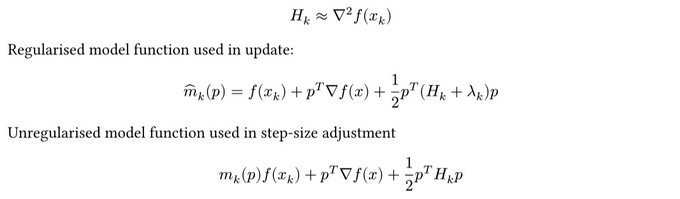

The first model function is minimised to produce the next iterate, this is the one with Tikhnov regularisation.

The second is used to predict how much the model was supposed to decrease, in order to adjust the step-size (trust-region radius.) This one is not regularised.

1

0

4

Tikhnov regularisation is often interpreted as interpolating between the Newton and gradient directions (plus some scaling.)

higher regularisation -> more gradient direction + smaller step-size

lower regularisation -> more Newton direction + larger steps

1

0

4

The typical heuristic to update the amount of regularisation each step is the Levenberg-Marquardt heuristic:

Take the ratio between the the actual decrease of a step and the decrease predicted by a local unregularised model.

1

0

3

If the ratio is close to 1, the model is good and we lower the regularisation next step. If it's closer to 0, the model is poor and we increase the regularisation.

The unregularised model is not the one used by the Tikhnov regularised model. This motives the "damped" ratio.

1

0

3

To see if it makes any difference to use the regularised model for step-size adjustment, I implemented a custom

`search` in Optimistix () to test it.

The GitHub gist is available here:

1

0

1

Because Optimistix is modular, by implementing this search we also implement a damped ratio

Levenberg-Marquardt and a new damped ratio BFGS trust-region method.

The latter would normally be very hard to implement without writing BFGS from scratch!

1

0

3

So how does it fair? Unsurprisingly, it behaves basically identically to the original trust-region update.

Here is the BFGS algorithm on some select minimisation test problems (averaged over a few different initialisations)

1

0

2

Using the damped ratio LM variant to optimise the parameters of a simple ODE () we see no difference between it and the original LM algorithm.

1

0

2

This means that both algorithms made the same choice of when to increase/decrease the size of the trust-region, which is reasonable.

1

0

2

This example was taken from an offhand comment in "Training Deep and Recurrent Networks with Hessian-Free Optimisation" by Martens and Sutskever.

1

0

4